Artificial Intelligence (AI) isn’t always about complex models or massive datasets. Sometimes, it’s about how you ask the questions. Imagine training a new hire—provide them with a few strong examples, and they quickly grasp the concept. That’s the essence of Few-Shot Prompting. Instead of presenting an AI with zero or just one example, you offer several—enough to demonstrate the format and intent.

This straightforward method significantly enhances the model’s understanding and response capabilities. It improves clarity, boosts accuracy, and enhances results in tasks such as summarization, translation, and data analysis. Few- shot prompting transforms clear examples into effective AI communication tools.

The Mechanics of Few-Shot Prompting

Few-shot prompting is more akin to coaching than engineering. You’re not reprogramming a language model or delving into its layers—you’re simply guiding it with a few examples. It’s like instructing a new team member by providing a few solid references. No additional training or system overhaul required—just clear guidance.

Here’s how it works: you provide the model with a few examples of inputs paired with the expected outputs. Then, you introduce a new input and let the model follow the established pattern. For instance, if you’re translating English into French, you’d provide a couple of English sentences followed by their French equivalents. Once the model recognizes the structure, it continues the pattern. It’s not learning French; it’s identifying and mimicking the format—similar to completing a melody after hearing the opening notes.

Modern language models are designed to be context-aware. They treat everything in the prompt as a continuous sequence, predicting the next component based on preceding information. By providing a few strong examples, you’re effectively shaping the model’s thought process.

However, there’s a limitation: you’re working within a constraint. Too many or overly lengthy examples can consume the space needed for your actual prompt. This is why clarity and brevity are crucial.

Few-shot prompting is particularly useful when labeled data is scarce, the task is challenging to define, or when a quick, flexible model interaction is desired. In many situations, better prompts yield better results—no fine- tuning necessary.

Advantages and Limitations in Real-World Use

The primary advantage of few-shot prompting is its flexibility. You don’t need to retrain or fine-tune a model for task-specific performance. Instead, you guide the model solely through context. This makes it ideal for rapid prototyping, executing custom tasks, and generating language on-the-fly. You can create product descriptions, classify support tickets, extract data from text, or even simulate role-based conversations with minimal setup.

Another significant benefit is that few-shot prompting generally produces more consistent results than zero-shot prompting. Without context, the model often makes guesses that don’t align with your intended format. By offering a few examples, the model gains a template to follow. This is especially useful in Language Model Prompting for tasks like generating code snippets, answering questions in a specific style, or formatting chatbot responses.

However, few-shot prompting has its challenges. For instance, you’re still working with a model that hasn’t genuinely “learned” your task. It’s imitating a pattern, not permanently adapting its behavior. This leaves room for deviation—if your examples aren’t clear, the model may deviate from the intended path. Additionally, complex tasks may be too intricate to capture with a few examples. For deeper reasoning or nuanced decision-making, prompt- only approaches might not suffice.

Another limitation is token length. With long-form input or multi-step reasoning, few-shot prompting might not provide enough room in the prompt for everything needed. In such cases, breaking the task into smaller parts or using multi-turn prompting becomes essential.

Despite these constraints, few-shot prompting remains a powerful technique due to its simplicity. You don’t need a custom-built model, just good examples, making this method incredibly efficient for developers, researchers, and product teams.

How Does Few-Shot Prompting Compare with Other Techniques?

When comparing prompting strategies, it’s helpful to view them as points along a spectrum.

Zero-shot prompting assumes the model already comprehends the task. You instruct it without providing examples. While efficient, this often results in vague or inconsistent outputs, especially for unfamiliar tasks.

One-shot prompting offers a single example before the main request. This slightly improves performance, particularly with a strong example, but lacks sufficient pattern variety for the model to generalize.

Few-shot prompting, on the other hand, provides just enough variety to establish a reliable pattern. The model can infer structure, tone, and logic. It’s not as extensive as fine-tuning, which involves training on thousands of examples and requires a custom training pipeline. However, it’s more effective than zero-shot prompting, which leaves too much to chance.

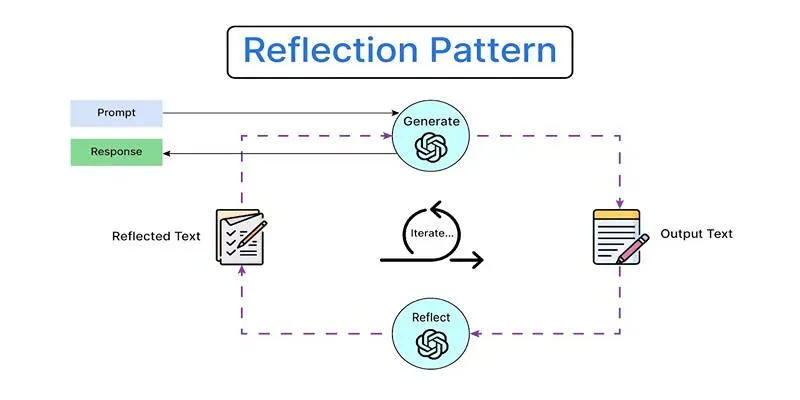

There’s also chain-of-thought prompting, which sometimes overlaps with few- shot techniques. In this method, each example includes the reasoning process leading to the final answer. This is particularly effective for complex tasks like math or logic problems. When combined with few-shot prompting, it enhances both performance and transparency.

While few-shot prompting isn’t a universal solution, it often hits the sweet spot: low effort, high impact. It’s particularly effective when speed is needed, there’s no time for dataset curation, or when experimenting with model behavior in different contexts.

Conclusion

Few-shot prompting demonstrates that a handful of good examples can significantly guide AI behavior. Instead of complex training or extensive coding, it relies on simple, well-structured prompts to instruct models. This approach mirrors human learning—with just a few cues, we understand and apply patterns. It’s fast, efficient, and incredibly useful across many AI tasks. As language models become more advanced, the way we prompt them is increasingly important. Few-shot prompting isn’t just a shortcut—it’s a practical strategy for smarter, smoother, and more predictable AI interactions.

zfn9

zfn9