In the realm of Natural Language Processing (NLP), lemmatization plays a pivotal role by transforming text words into their base dictionary forms, known as lemmas, without losing their contextual meanings. Unlike stemming, which often removes word suffixes indiscriminately, lemmatization ensures the output consists of valid dictionary words. This article explores how lemmatization functions, highlights its advantages over other approaches, discusses implementation challenges, and provides practical usage examples.

The Role of Lemmatization in NLP

** **Human

language is inherently complex, with words appearing in various forms

depending on tense, number, and grammatical functions. For machines to

comprehend text effectively, a standardized format of these word variations is

essential. Lemmatization simplifies words to their fundamental forms, allowing

algorithms to process “running,” “ran,” and “runs” as the same term, “run.”

This technique significantly enhances the performance of NLP models in tasks

such as document classification, chatbots, and semantic search.

**Human

language is inherently complex, with words appearing in various forms

depending on tense, number, and grammatical functions. For machines to

comprehend text effectively, a standardized format of these word variations is

essential. Lemmatization simplifies words to their fundamental forms, allowing

algorithms to process “running,” “ran,” and “runs” as the same term, “run.”

This technique significantly enhances the performance of NLP models in tasks

such as document classification, chatbots, and semantic search.

How Lemmatization Works: A Step-by-Step Process

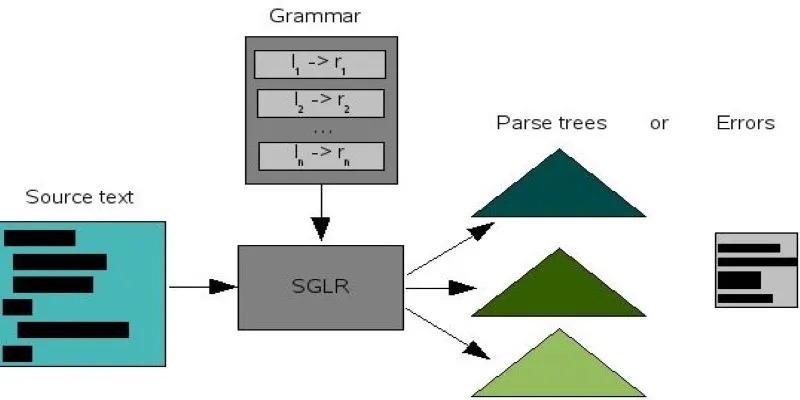

Lemmatization involves a sophisticated procedure that goes beyond basic rule- based truncation methods, requiring multiple analytical processes for linguistic analysis.

Morphological Analysis:

Words are broken down into morphological components to identify their root elements, prefixes, and suffixes. For instance, “unhappiness” is divided into “un-” (prefix), “happy” (root), and “-ness” (suffix).

Part-of-Speech (POS) Tagging:

The system analyzes each word to determine its function—such as noun, verb, adjective, or adverb—since lemmas may vary based on context. For example, “saw” can be a verb with the lemma “see” or a noun with the lemma “saw.”

Contextual Understanding:

Software uses surrounding text to resolve word ambiguities. For example, “the bat flew” indicates an animal, while “he swung the bat” refers to a sports tool.

Dictionary Lookup:

The algorithm uses lexical databases like WordNet to identify base forms (lemmas) after performing a dictionary lookup.

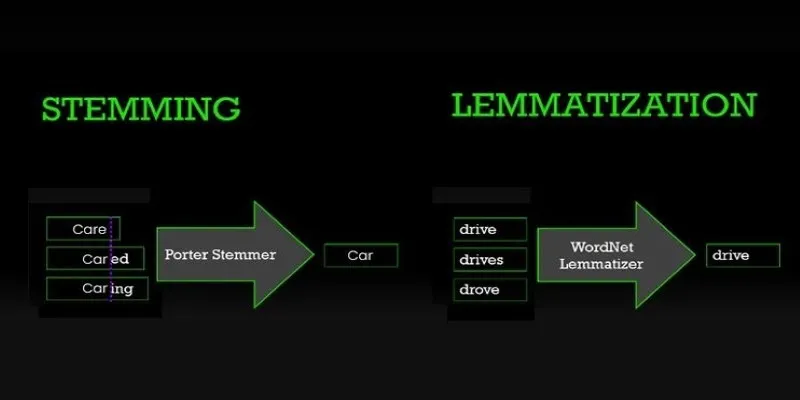

Lemmatization vs. Stemming: Key Differences

While both techniques aim to normalize text, lemmatization and stemming differ significantly in their methods and results.

- Stemming: Involves removing word endings using predefined rules, often resulting in non-dictionary words. For example, “jumping” becomes “jump,” but “happily” might turn into “happili,” which is nonsensical.

- Lemmatization: Utilizes context and grammatical analysis to create valid dictionary terms. “Happily” transforms into “happy,” and “geese” becomes “goose.”

- Stemming is faster but less accurate, suitable for indexing, while lemmatization provides better semantic accuracy, ideal for precise tasks like chatbots or sentiment analysis.

Advantages of Lemmatization

Enhanced Semantic Accuracy:

Lemmatization enables NLP models to understand relationships, such as “better” being equivalent to “good,” improving tasks like sentiment analysis.

Improved Search Engine Performance:

Lemmatization allows search engines to retrieve all relevant documents containing “run” or “ran” when users search for “running shoes.”

Reduced Data Noise:

Consolidating word variants into a single form streamlines datasets, reducing duplication and enhancing machine learning processes.

Support for Multilingual Applications:

Effective lemmatization systems adapt to languages with rich word forms, such as Finnish and Arabic, enhancing their applicability.

Challenges in Lemmatization

Computational Complexity:

POS tagging and dictionary lookups increase processing time, complicating text analysis.

Language-Specific Limitations:

While English lemmatizers are well-developed, tools for low-resource languages may lack accuracy due to limited lexical data.

Ambiguity Resolution:

Advanced processing is required to distinguish meanings in words like “lead” (to guide) versus “lead” (a metal), with potential for errors.

Integration with Modern NLP Models:

Subword tokenization in models like BERT reduces the need for specific lemmatization, but it remains valuable for rule-based applications and human interpretation.

Applications of Lemmatization Across Industries

Healthcare:

Lemmatization aids in interpreting patient statements, like “My head hurts” and “I’ve had a headache,” for consistent diagnostic input.

E-Commerce:

Online platforms enhance recommendation systems by linking terms like “wireless headphones” and “headphone wireless.”

Legal Tech:

Lemmatized legal terms help document analysis tools identify related concepts, such as “termination” and “terminate,” in contracts.

Social Media Monitoring:

Brands track consumer sentiment by converting keywords like “love,” “loved,” and “loving” into their base forms to analyze opinion trends.

Machine Translation:

Lemmatization ensures accurate language matching in translation software, improving phrase-level linguistic accuracy.

Tools and Libraries for Lemmatization

NLTK (Python):

WordNetLemmatizer requires explicit POS tags to transform words like “better” (adjective) into “good” and “running” (verb) into “run.”

SpaCy:

SpaCy offers a robust feature set, automatically determining parts of speech and performing lemmatization efficiently.

Stanford CoreNLP:

This Java-based toolkit provides enterprise-grade lemmatization for academic and business applications across various languages.

Gensim:

While primarily for topic modeling, Gensim integrates with SpaCy or NLTK for text preprocessing, including lemmatization.

Future of Lemmatization in AI

As NLP models grow in complexity, lemmatization remains essential for several reasons:

- Explainability: Translating model outputs into human-readable terms.

- Combining rule-based lemmatization with deep learning creates hybrid frameworks for domain-specific text understanding, such as in medicine and law.

- Improving lemmatizers for underrepresented languages through multilingual transformers and transfer learning methods.

Conclusion

Lemmatization enhances the accuracy and efficiency of NLP systems by processing natural human language. Mastery of lemmatization techniques is vital for data scientists and developers involved in language model development, search algorithm enhancement, and chatbot creation. Effective AI integration into daily operations relies on lemmatization to maximize human- machine interaction capacity.

zfn9

zfn9