Phones, hospitals, banks, and more utilize artificial intelligence (AI) to facilitate quicker, smarter decision-making. However, many individuals lack an understanding of how AI functions, which can lead to misinterpretations and uncertainties. This is where explainable artificial intelligence (XAI) plays a crucial role. XAI elucidates the decision-making process of AI, providing clear and concise explanations at every stage.

XAI is beneficial across various sectors such as law, business, and healthcare, where complete trust in the outcomes is essential. Users of XAI gain insights into how AI operates, enabling error correction and system enhancement. Developers can build improved models and identify weaknesses. By instilling faith in AI tools, XAI offers a sense of security to everyday technology users.

What Is AI and Why Is It Hard to Understand?

Artificial intelligence (AI) utilizes data to learn and make informed decisions, identifying patterns and predicting future events. While AI often outperforms humans in speed and accuracy, understanding how it arrives at decisions can be challenging. Complex models like deep learning operate as “black boxes,” concealing their processes and reasoning behind decisions. This lack of transparency can raise doubts regarding the reliability of AI.

Just like a student who provides the correct answer without showing the working, AI outcomes may lack credibility without transparent reasoning. This is where explainable AI becomes invaluable, simplifying and elucidating AI decision-making processes to enhance trust and understanding.

What Is Explainable AI (XAI)?

Explainable artificial intelligence (XAI) aims to demystify AI decision-making by providing justifications for each choice. Through various techniques, XAI enhances credibility by shedding light on AI operations, making it particularly crucial in sectors like law, healthcare, and finance where trust is paramount. XAI offers two main types of explanations:

- Global Explanation: Offers a comprehensive view of how AI makes decisions, providing users with an understanding of the overall model operation.

- Local Explanation: Focuses on explaining specific AI decisions, clarifying why a particular choice was made in a given context.

Why Explainable AI Is Important

Explainable artificial intelligence is integral for several reasons:

- Builds Trust: Transparent systems instill confidence. Understanding AI processes fosters trust, encouraging widespread adoption.

- Ensures Fairness: XAI helps mitigate biases in AI decisions, ensuring equal treatment for all users.

- Helps Meet Legal Requirements: XAI enables compliance with regulations by providing clear documentation of AI decision-making processes.

- Improves Models: By identifying and rectifying errors, XAI enhances system performance and reliability.

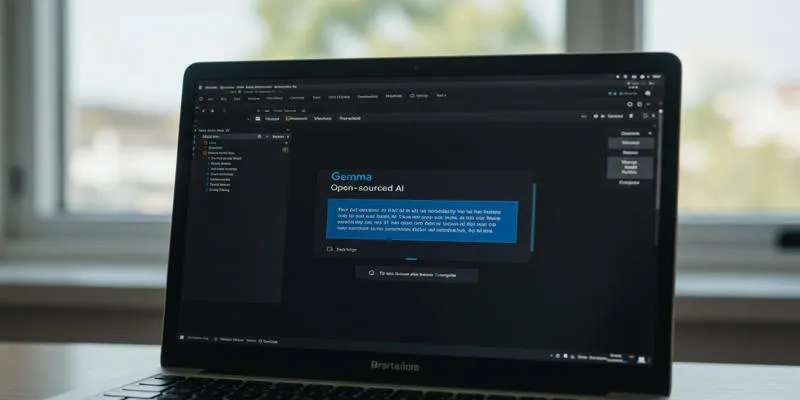

How Explainable AI Works

Explainable artificial intelligence employs various techniques to simplify complex models. Techniques like feature importance, LIME (Local Interpretable Model-Agnostic Explanations), SHAP (SHapley Additive exPlanations), and decision trees enhance transparency and understanding, improving confidence in AI systems.

When Should You Use Explainable AI?

XAI is particularly crucial in fields where AI decisions significantly impact individuals’ lives, such as finance and healthcare. It enhances trust, ensures compliance with legal standards, and aids in troubleshooting and model refinement, ultimately boosting system performance.

Conclusion:

Explainable artificial intelligence (XAI) is vital for understanding AI decision-making processes, fostering confidence, ensuring fairness, and promoting transparency across sectors like law, banking, and healthcare. By enhancing user understanding of AI decisions, XAI strengthens trust, compliance, and system performance, making it an indispensable tool in the age of AI technology.

zfn9

zfn9