Reinforcement learning is a fascinating area of artificial intelligence that mimics human learning through trial, error, and feedback, much like training a dog. Unlike traditional methods that rely heavily on large datasets, reinforcement learning thrives on rewards and policies. Rewards indicate what the system should aim for, while policies dictate how to achieve it. Together, they shape agent behavior in various environments, from video games to autonomous driving.

This practical approach allows machines to learn through consequences rather than explicit instructions. Understanding how rewards and policies interact is crucial for mastering reinforcement learning, as it reveals the dynamic trade- offs and opportunities in this powerful learning paradigm.

Rewards: The Currency of Learning

In reinforcement learning, rewards are everything. They aren’t just hints or suggestions—they’re the only feedback the system receives about its performance. A reward is essentially a numeric value given after an action, which can reflect profit, efficiency, distance traveled, or points earned. Regardless of how it’s defined, the primary goal remains the same: maximize the reward.

The power of rewards lies in their simplicity. Usually represented by a single digit, a reward doesn’t explain why an action succeeded or failed—only that it did. This minimalist philosophy makes reinforcement learning extremely flexible. You don’t have to tell the system how to act explicitly; you just need to define what successful outcomes look like.

However, the structure of rewards can dramatically affect learning. Short-term rewards might seem appealing, but focusing solely on immediate benefits can misguide the agent. Conversely, long-term rewards require patience and strategic thinking. For instance, training a robot to walk: rewarding each step individually might result in aimless movement, whereas rewarding the robot only upon reaching a specific destination encourages thoughtful behavior.

Additionally, reward hacking poses a real threat. Agents sometimes discover loopholes to collect rewards without accomplishing the intended goals. The challenge is designing reward functions carefully to ensure they are both meaningful and robust, preventing unintended exploits.

Policies: The Blueprint for Action

If rewards represent the “what,” then policies explain the “how.” In reinforcement learning, a policy acts as a strategy guiding the agent’s actions in every possible scenario. Think of it as the agent’s decision-making center, converting environmental cues directly into actions.

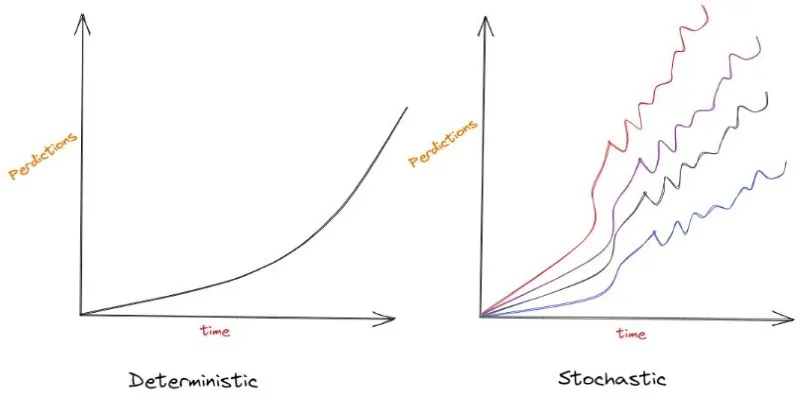

Policies typically fall into two categories: deterministic and stochastic. A deterministic policy will always select the same action when encountering the same situation, ensuring consistency but potentially lacking flexibility. In contrast, stochastic policies introduce randomness into the decision process. This randomness encourages exploration, which is crucial because an agent that never explores new possibilities may never discover superior strategies.

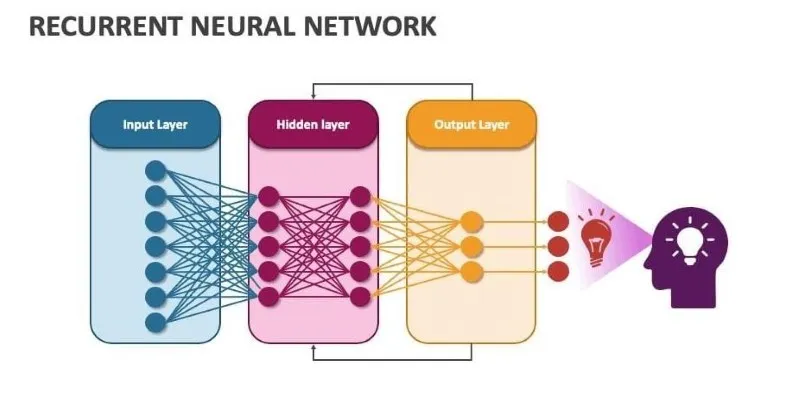

Creating effective policies is central to reinforcement learning. Typically, these policies are represented by neural networks fine-tuned using reward feedback. The agent doesn’t receive explicit instructions on the optimal move; instead, it experiments with actions, assesses the outcomes, and adjusts its internal policy accordingly. Gradually, through techniques involving value estimation and gradient methods, policies evolve and improve.

The complexity of policies varies based on the environment and task complexity. Simple games might require nothing more than a rule-based table, while tasks such as autonomous driving or chess demand highly sophisticated policies capable of anticipating future events and managing uncertainty.

Maintaining policy stability is a common challenge. Small policy adjustments can drastically influence agent behavior, especially in complex or uncertain scenarios. Modern reinforcement learning leverages techniques like entropy regularization, experience replay, and trust-region optimization to ensure steady and reliable improvement over time.

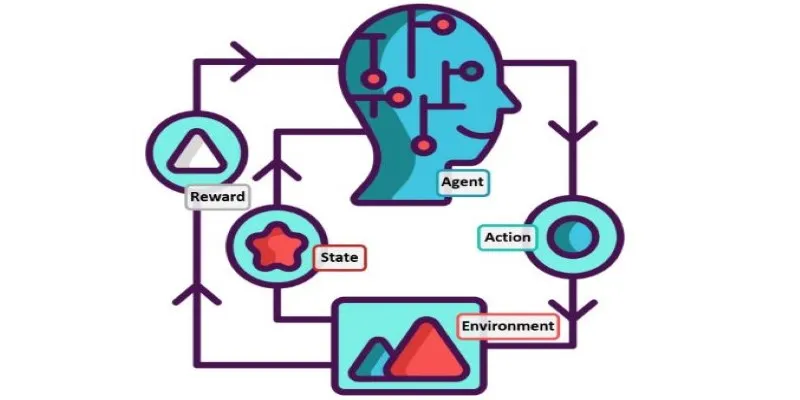

The Feedback Loop: How Rewards Shape Policies

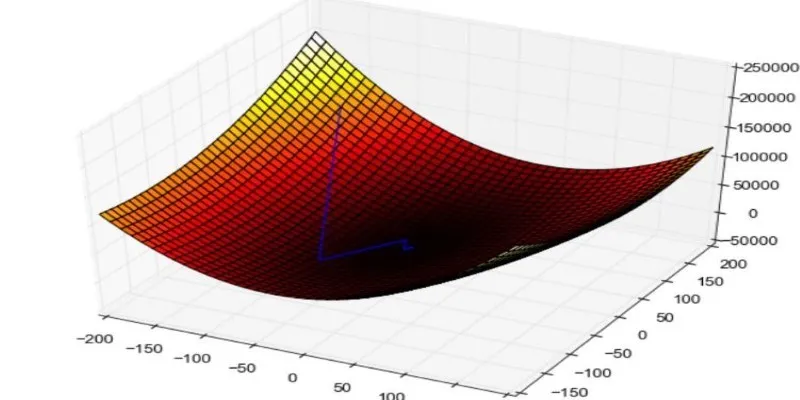

Reinforcement learning operates as an ongoing feedback loop. The agent observes its environment, selects actions based on its current policy, receives rewards, and then updates its policy accordingly. This continuous cycle forms the backbone of reinforcement learning, where the interplay between rewards and policies is essential.

Rewards serve as direct feedback, guiding policy adjustments. Yet, a significant challenge is determining attribution—figuring out exactly which actions resulted in rewards. This issue, known as the credit assignment problem, becomes especially tricky when rewards occur after numerous sequential actions. For example, if an agent makes 100 moves but earns a reward only at the end, identifying the specific actions responsible for success can be challenging.

Temporal Difference (TD) learning addresses this by estimating values for state-action pairs based on expected future rewards and refining predictions over time. Alternatively, Monte Carlo methods involve learning from entire sequences or episodes. Each method offers unique advantages and trade-offs, and the best choice depends on specific environments and tasks.

Crucially, policies don’t emerge by chance—they’re shaped intentionally by reward structures. Adjusting rewards even slightly can dramatically affect how policies develop. Poorly designed rewards may misguide agents, while overly intricate policies risk instability. Achieving a balanced interaction between rewards and policies is fundamental to building effective, adaptive systems across diverse applications.

Beyond Theory: Real-World Stakes

Reinforcement learning has transitioned from theoretical concepts to practical reality, with applications reaching far beyond gaming environments. Popular games like Go and StarCraft were just the starting point; today, reinforcement learning tackles messy real-world problems filled with unpredictable factors and noisy data.

Consider autonomous vehicles navigating city streets. Every minute, thousands of decisions are guided by policies influenced by rewards prioritizing safety and efficiency. These vehicles must anticipate pedestrians, changing weather, and dynamic traffic laws. The reinforcement learning framework behind them must be robust, adaptable, and thoroughly tested, as real-world mistakes carry severe consequences.

Similarly, healthcare is exploring reinforcement learning for personalized treatment plans, where rewards represent patient outcomes. Poorly designed rewards could result in ineffective or harmful medical interventions, emphasizing the necessity of transparency and rigorous validation.

Ultimately, reinforcement learning profoundly affects human lives, emphasizing the importance of carefully designed rewards and policies, as their impact extends well beyond abstract mathematical frameworks.

Conclusion

Reinforcement learning harnesses the power of feedback, relying on rewards and policies to guide agent behavior. Rewards signal the value of actions, while policies dictate choices made by agents. When properly aligned, these components allow systems to adapt independently, making reinforcement learning exceptionally practical across diverse fields like gaming, robotics, and autonomous systems. As we continue refining these elements, we must thoughtfully consider not just the capabilities we’re creating but also the ethical implications of teaching machines through consequences.

zfn9

zfn9