Neural networks might seem complex, but they’re essentially tools that replicate how the human brain processes information. At their core, they are designed to recognize patterns, make decisions, and learn from experience. Today, they power many smart systems we use daily, from voice assistants to medical imaging devices.

Their impact stems from the interplay of structure and function, creating robust learning systems. While the terminology may appear technical, the basic concept is simple: connect artificial neurons in layers and allow them to learn through data exposure and feedback over time.

The Building Blocks of Neural Networks

A neural network comprises units called neurons, organized into layers. It starts with an input layer that accepts data—such as images, numbers, or text. This data is processed through hidden layers, where most computations occur. Each neuron in a hidden layer applies a weight and an activation function to its input, guiding the flow of information forward.

The final layer, the output layer, provides predictions, such as recognizing an image or suggesting a product. Weights control the strength of connections, while activation functions introduce complexity, enabling the network to solve challenging problems instead of just simple ones. Without these elements, the network would function more like a basic calculator than an intelligent system.

The structure and function of neural networks allow them to address non-linear problems, which traditional programs find challenging. Instead of following hardcoded rules, they develop internal logic through training, adjusting weights to improve accuracy over time.

Training: How Neural Networks Learn

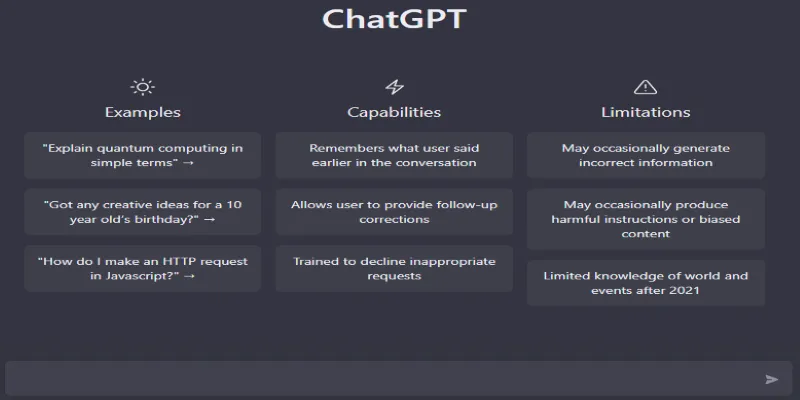

Neural networks aren’t inherently smart—they need training. Training involves providing data and using an algorithm known as backpropagation. When the network makes an error, it compares its output to the correct answer, identifies the mistake, and adjusts accordingly.

This adjustment occurs over many cycles, gradually narrowing the gap between prediction and reality. Through this process, the system learns general rules, not just specific answers, enabling it to interpret new data. This distinction separates memorization from true understanding.

However, networks can overfit, performing well on known data but struggling with new inputs. To counter this, developers employ techniques like dropout (removing random neurons during training) or regularization (penalizing overly complex models). These strategies enhance the network’s flexibility, improving its ability to generalize.

The key is how well the network adapts to the unknown. This is the ultimate test of its structure and function—whether it can apply its experience to new, unpredictable challenges.

Function in Real-World Applications

Neural networks operate quietly behind many technologies we use daily. They filter spam from your email, assist in autocompleting messages, and help doctors diagnose conditions through medical imaging. Their strength lies in adaptability, processing images, text, audio, and numerical data effectively.

In finance, neural networks detect fraud by identifying unusual transaction patterns that might elude human detection. In autonomous vehicles, they recognize road signs, detect pedestrians, and make driving decisions. In entertainment, they power recommendation engines, suggesting shows or music based on your habits and history.

Different types of neural networks address various needs. Convolutional neural networks (CNNs) are ideal for image recognition tasks, scanning for features like edges, shapes, and textures. Recurrent neural networks (RNNs) are suited for sequential data, such as speech or time-based information. Transformers, a newer architecture, have revolutionized how machines understand and generate human language by managing context over long text spans.

Despite their differences, all these systems rely on layered architecture and learning principles. Their widespread success demonstrates the effectiveness of neural networks’ structure and function.

The Future and Evolving Designs

Neural networks are rapidly advancing to become more efficient, scalable, and specialized. Early networks were shallow, with few layers. Today, deep neural networks with many layers handle much greater complexity. This evolution, known as deep learning, has unlocked powerful capabilities across various industries.

New developments are pushing boundaries by incorporating brain-inspired hardware. Neuromorphic computing mimics the human brain’s structure and operations, using specialized chips to make neural network computations faster and more energy-efficient. This innovation could allow AI systems to run on smaller devices or make large models more sustainable.

Another exciting development is spiking neural networks, which use time-based signals to better replicate how biological neurons communicate. While still in the early stages, they show potential for tasks requiring quick, low-power responses, such as real-time decision-making.

Despite these advancements, the core principles of neural networks remain unchanged. Their layered architecture and adaptive learning processes continue to underpin their success. Neural networks are flexible and versatile, adapting to a wide range of applications across industries, and their future will likely see even greater integration and impact in new fields.

Conclusion

Neural networks have transformed how machines understand and interact with the world. They combine a structured arrangement of artificial neurons with functions that enable learning and adaptation. This blend of structure and function gives them broad utility, from diagnosing diseases to optimizing social media feeds. Originating as a concept inspired by the human brain, they now form the backbone of modern AI. While designs continue to evolve, the fundamentals remain: layers that pass data, weights that adjust, and systems that learn through experience. At their best, neural networks don’t just mimic intelligence—they demonstrate it through action.

zfn9

zfn9