Large language models (LLMs) like GPT-4, Gemini (formerly Bard), and Claude are increasingly being integrated into various applications. It’s evident that no single model excels in all areas. Some models are better at providing accurate answers, others excel in creative writing, and some are particularly adept at addressing moral and sensitive topics.

This diversity in model strengths has led to a more intelligent approach: LLM Routing. This method dynamically assigns tasks to the most suitable language model based on the type of task, system conditions, or model performance. In this post, we will explore the concept of LLM routing , dissect key strategies, and walk through original Python implementations of these strategies.

What is LLM Routing?

LLM Routing involves strategically directing different types of requests to the most appropriate LLM. Instead of relying on a single model for all queries, a system determines which model is best for a given task—whether factual, creative, technical, or ethical.

Routing enhances:

- Accuracy and relevance of responses

- Performance and response time

- System scalability and cost-efficiency

LLM Routing Strategies

There are several approaches to LLM routing. Let’s examine the major ones before diving into coding.

1. Static Routing (Round-Robin)

This is the simplest method, where tasks are distributed in a rotating sequence across available models. It’s easy to implement but doesn’t consider task complexity or model capabilities. This method works well when task volume is uniform and models are equally capable.

2. Dynamic Routing

Routing decisions here are based on real-time conditions, such as current load or model availability. This approach helps balance workload and optimize for speed. Dynamic routing is ideal for high-traffic systems that need to maintain performance under pressure. It adapts automatically to changes in system load, helping to avoid bottlenecks.

3. Model-Aware Routing

This approach uses a profile of each model’s strengths, such as creativity or accuracy, to route tasks accordingly. It offers a more intelligent and performance-driven routing solution. By aligning tasks with specialized models, this strategy improves output quality and user satisfaction. It requires model benchmarking or historical performance data to function effectively.

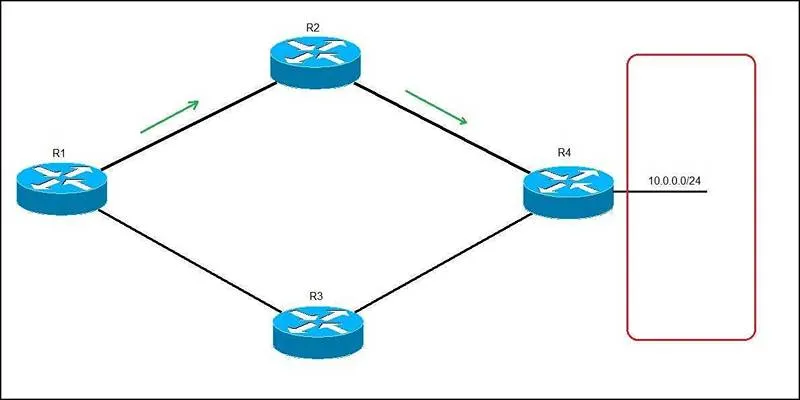

4. Consistent Hashing

Often used in distributed systems, this strategy routes tasks based on a hash value, ensuring consistent routing to the same model. This approach minimizes task redistribution when models are added or removed, making it suitable for scalable environments.

5. Contextual Routing

This advanced technique uses the content or metadata of the task—like topic or tone—to decide which model should handle it. It often involves NLP-based classification or tagging systems to understand the intent behind each input.

LLM Routing Techniques

Beyond strategies, effective LLM routing relies on several key techniques to ensure accurate and efficient routing decisions.

1. Task Classification

This involves identifying the nature of a request (e.g., creative, technical, factual) using keyword rules or NLP classifiers, enabling targeted model selection.

2. Model Profiling

This technique involves rating models based on strengths like creativity, accuracy, and ethics to match tasks with the most suitable model.

3. Latency Monitoring

Tracks response time and model load to support dynamic routing, ensuring tasks are sent to the most responsive model in real time.

4. Weighted Distribution

Assigns weights to models based on their performance or capacity, ensuring balanced and cost-efficient task allocation.

5. Fallback Logic

Provides backup model options if the primary fails, improving reliability and maintaining service quality.

Python Implementation Examples (Original & Unique)

Let’s explore how to implement each strategy in Python using mock functions for simplicity. All code here is original and crafted specifically for this post.

1. Static Routing (Round-Robin)

static_round_robin(tasks): index = 0 total_models = len(language_models) for

task in tasks: current_model = language_models[index % total_models]

print(f"Task: '{task}' is assigned to: {current_model}") index += 1 ```

### 2\. Dynamic Routing (Simulated with Randomness)

```python import random def dynamic_routing(tasks): for task in tasks:

selected_model = random.choice(language_models) print(f"Dynamically routed

task '{task}' to: {selected_model}") ```

In a real-world setting, you'd base the choice on metrics like response time,

queue length, etc.

### 3\. Model-Aware Routing (Based on Strengths)

```python model_capabilities = { "GPT-4": {"creativity": 90, "accuracy": 85,

"ethics": 80}, "Gemini": {"creativity": 70, "accuracy": 95, "ethics": 75},

"Claude": {"creativity": 80, "accuracy": 80, "ethics": 95} } def

model_aware_routing(tasks, focus_area): for task in tasks: best_model =

max(model_capabilities, key=lambda m: model_capabilities[m][focus_area])

print(f"Task: '{task}' is routed to: {best_model} based on {focus_area}") ```

### 4\. Consistent Hashing

```python import hashlib def consistent_hash(text, total_models): hash_value =

hashlib.md5(text.encode()).hexdigest() numeric = int(hash_value, 16) return

numeric % total_models def consistent_hash_routing(tasks): for task in tasks:

idx = consistent_hash(task, len(language_models)) selected_model =

language_models[idx] print(f"Consistently routed task '{task}' to:

{selected_model}") ```

### 5\. Contextual Routing (Based on Task Type)

```python model_roles = { "GPT-4": "technical", "Claude": "creative",

"Gemini": "informative" } def classify_task(task): if "write" in task or

"story" in task: return "creative" elif "how" in task or "explain" in task:

return "technical" else: return "informative" def contextual_routing(tasks):

for task in tasks: task_type = classify_task(task) selected_model =

next((model for model, role in model_roles.items() if role == task_type),

"Unknown") print(f"Contextually routed task '{task}' to: {selected_model}

({task_type})") ```

## Strategy Comparison Table

Strategy | Task Matching | Adaptability | Complexity

---|---|---|---

Static (Round-Robin) | No | No | Low

Dynamic Routing | No | Yes | Medium

Model-Aware Routing | Yes | No | Medium

Consistent Hashing | No | No | Medium

Contextual Routing | Yes | Yes | High

## Conclusion

As AI applications expand in scope and complexity, LLM routing is becoming a

necessity rather than an enhancement. It allows systems to scale

intelligently, handle tasks efficiently, and provide better user experiences

by ensuring the right model handles the right job.

With strategies ranging from simple round-robin to sophisticated contextual

routing—and supported by Python implementations—you now have a foundation to

start building multi-model LLM systems that are smarter, faster, and more

reliable.

zfn9

zfn9