The way machines “pay attention” has fundamentally transformed AI. In a few short years, we’ve evolved from basic chatbots to advanced tools like ChatGPT and AI writing assistants. At the core of this transformation are transformers and attention mechanisms—concepts that focus on information flow and prioritization within data, rather than any form of magic.

These innovations have significantly enhanced machines’ ability to understand language and context, laying the groundwork for modern AI. If you’ve ever wondered what powers today’s most intelligent models, it all starts with these pivotal concepts.

What Makes Transformers So Different?

Prior to transformers, most natural language processing models relied on systems that processed text sequentially, much like how humans read—one word at a time. These were known as recurrent neural networks (RNNs). While effective, RNNs struggled with scaling, often forgetting earlier components of a sentence as they processed further along, which made capturing long-term connections difficult.

Transformers revolutionized this approach. Instead of processing text word by word, transformers consume entire sequences simultaneously, processing context in parallel. This gives the model a comprehensive view of the sentence, allowing it to identify complex relationships between words, even those that are far apart.

This approach significantly improved both speed and comprehension. Models could be trained faster and with greater accuracy. However, the real secret to their success lies in the attention mechanism. Without it, transformers would be just another sophisticated architecture. Attention is their true advantage.

Attention Mechanisms: The Real Game Changer

The attention mechanism is simple in theory but powerful in application. Consider reading a sentence: “The bird that was sitting on the fence flew away.” Understanding “flew” requires linking it back to “bird,” not “fence.” You focus on important words over others. This is precisely what attention mechanisms do—they assign a score to each word in relation to others and determine which ones to emphasize.

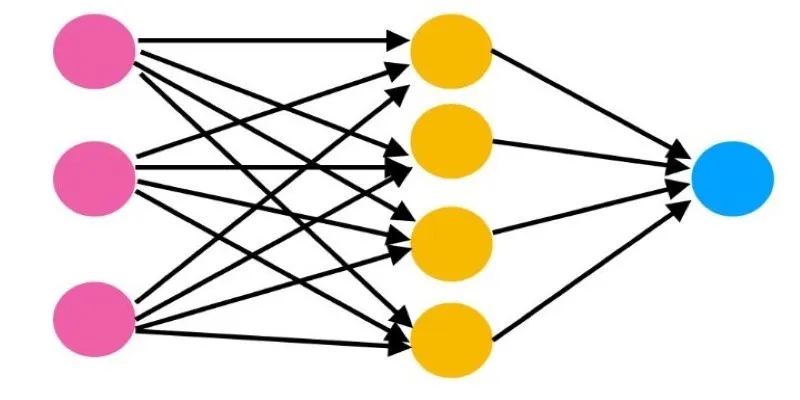

When a transformer processes a sentence, it breaks it down into smaller parts called tokens. For each token, it calculates how much attention it should give to all other tokens, storing these attention scores in an attention matrix. This matrix helps the model decide which words most influence the meaning of the current word.

This is achieved using key, query, and value vectors—three different perspectives of each word. A word’s “query” assesses how well it matches the “keys” of other words. If the match is strong, the model incorporates the associated “value.” This is the essence of attention. Each word becomes aware of others, forming a network of influence across the sentence.

Attention mechanisms allow models to maintain nuance and structure across lengthy text passages. Whether a sentence has five words or five hundred, attention mechanisms help preserve meaning.

Layering, Self-Attention, and Why It Works

In a transformer, attention is applied in layers. Each layer uses the attention mechanism and passes its output to the next, creating depth. Lower levels focus on simple patterns like grammar, while higher levels recognize themes, tone, and abstract relationships.

Within each layer, self-attention occurs. This means every word attends to every other word, including itself, akin to each word engaging in a dialogue with others to understand its role in the sentence. Self-attention enables transformers to capture language structure and relationships effectively.

Another crucial feature is multi-head attention. Instead of a single perspective on attention, transformers divide it into multiple “heads,” each learning different relationships. One head might focus on subject-verb agreement, while another tracks pronoun references. By merging all these heads, the model gains a richer and more comprehensive understanding of the input.

This design—multi-layer, multi-head self-attention—gives transformers their exceptional flexibility. Whether for language translation, article summarization, or code generation, the model uses these patterns to decipher meaning and structure.

From BERT to GPT: How Transformers Took Over AI

After transformers demonstrated their potential, models like BERT (Bidirectional Encoder Representations from Transformers) emerged. Utilizing a transformer encoder, BERT comprehends text bidirectionally, excelling in tasks like question answering, sentiment analysis, and language comprehension.

Then came GPT (Generative Pre-trained Transformer), shifting the focus from understanding to generating text. Instead of merely analyzing, GPT could write text—predicting the next word step by step, leveraging all prior knowledge. GPT’s innovation lay in its decoder-based transformer structure, optimized for generating coherent and creative text.

As models evolved—GPT-2, GPT-3, and beyond—the undeniable power of transformers became clear. Large language models trained on extensive datasets could produce essays, poems, stories, code, and more. And their application extended beyond text, adapting to images, speech, and other data forms, establishing transformers as the Swiss army knife of AI.

The foundation remained consistent: attention mechanisms layered within transformer blocks. However, the scale, training data, and computational power advanced, dramatically enhancing output quality.

Today, tools like ChatGPT integrate these concepts, relying on massive transformer models fine-tuned with human feedback to maintain natural conversations. This progress wouldn’t be possible without attention.

Conclusion

Transformers and attention mechanisms have fundamentally transformed AI, enabling machines to understand and generate language with enhanced accuracy and context. By allowing models to focus on relevant input data, they improve efficiency and scalability. This breakthrough has led to the development of advanced large language models like GPT, capable of handling complex tasks. As AI continues to evolve, transformers will remain central to its progress, driving innovations across various domains.

zfn9

zfn9