Deep learning is continuously evolving, revolutionizing artificial intelligence systems across various sectors. New methodologies are reducing errors, enhancing accuracy, and improving training processes. Researchers are exploring innovative neural network architectures to more accurately mimic the human brain. These cutting-edge deep learning techniques are addressing complex tasks in language processing, computer vision, and automation. Industries are leveraging these advancements to boost productivity, enhance efficiency, and drive innovation.

Advanced AI neural networks now offer faster reflexes and smart decision- making in real-time. Improved generalization capabilities lead to better performance on previously unseen challenges. The rapid advancements in artificial intelligence are propelling the field toward unprecedented levels of accuracy and efficiency. These emerging techniques signify a new era in artificial intelligence.

The Evolution of Deep Learning Frameworks

Deep learning began with simple neural networks that were limited in capability and scope. Over time, frameworks evolved to accommodate additional layers and larger datasets. Innovations like TensorFlow and PyTorch have revolutionized model construction and training, enabling quicker testing and iteration. Enhanced GPUs have accelerated deep network training, reducing the time from weeks to mere hours. Researchers have introduced reusable modules to streamline coding. Modern libraries now focus more on model logic by automating complex tasks.

Integration with cloud systems allows for scaling across multiple machines. Collaborative platforms facilitate the sharing of pre-trained models among research teams. Optimization libraries like ONNX ensure cross-framework compatibility. Improved tools lower entry barriers for emerging developers. Fewer defects and performance issues lead to smoother training pipelines. Developments in this field have laid the foundation for current innovations, supporting modern approaches and methodologies. Robust frameworks are crucial for advanced AI research and applications in real-world settings.

Transformers and Attention-Based Architectures

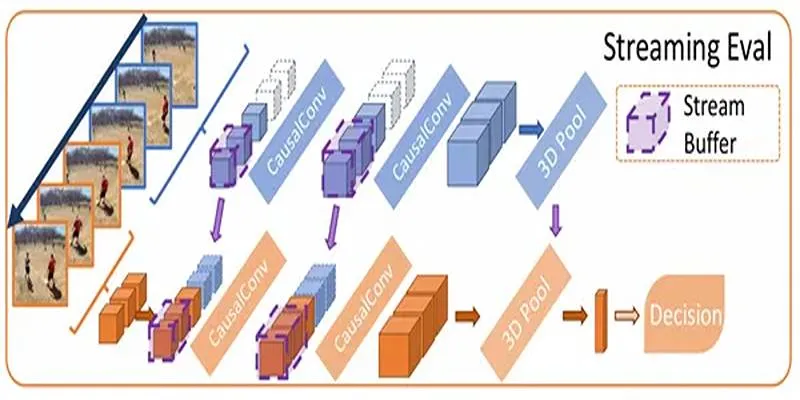

Transformers have transformed how machines understand sequences such as language, voice, or video. Attention mechanisms enable models to focus on critical data elements, often outperforming traditional recurrent models. Transformers expedite processing by eliminating the need for sequential data input. Self-attention layers effectively capture long-range dependencies, aiding models in understanding context in applications like translation. Models like GPT and BERT showcase the power of this architecture class. Trained transformers can be fine-tuned on small datasets, enhancing versatility.

Applications include chatbots, text summarization, and image captioning. Transformers support parallel GPU training, significantly reducing training time. These models adapt to new tasks with minimal retraining. Attention maps enhance transparency by revealing decision-making processes. The success of transformers has inspired architectural hybrids that incorporate convolutional networks. Companies apply these techniques in customer service bots, voice assistants, and search engines, remaining fundamental in new machine learning and AI developments.

Self-Supervised Learning and Reduced Data Dependency

Generating labeled data on a large scale can be time-consuming and costly. Self-supervised learning addresses this challenge by extracting structures and patterns from unlabeled data. Tasks like image inpainting or sentence completion drive learning. Once trained, models can be fine-tuned with minimal labeled examples, enabling rapid scaling into new domains. Pretrained models save significant time during implementation. Contrastive learning improves representation quality with similarity-based objectives.

Self-supervised learning mirrors how humans naturally observe and learn, facilitating machines’ effective adaptation to new environments. This approach is particularly beneficial for robotics, medical imaging, and natural language processing. Reduced reliance on labeled data leads to faster iterations and deployments, accelerating product development and enhancing accuracy. Developers can reuse models across various projects with minimal modifications. By minimizing biased labeling errors, self-supervision also promotes fairness. These techniques expand access for small teams and startups without labeled datasets, transforming AI learning from raw data.

Sparse Models and Efficient Computation

Sparse models aim to reduce memory usage without sacrificing accuracy by activating sections of the network as needed. They are ideal for low-power or edge devices. Pruning techniques remove unnecessary weights after training, resulting in faster inference and smaller models. Quantization further reduces model storage by lowering data precision. These methods facilitate the use of AI in embedded and mobile systems. Combining experts allows models to activate only a few layers during inference, accelerating processing while maintaining accuracy.

Sparse transformers also decrease memory requirements for large datasets, benefiting edge computing. Reduced overhead allows devices like drones or phones to process data in real-time. With fewer parameters involved in predictions, sparse models are more interpretable. Efficiency gains are crucial for reducing AI’s environmental impact, aligning with sustainability goals. Lower costs and improved scalability are driving industry adoption, potentially broadening AI access beyond cloud systems.

Real-World Applications and Industry Integration

New deep learning techniques are not just theoretical; practical applications are rapidly expanding. In healthcare, AI is used to interpret scans with expert-level precision. In finance, models predict fraud and optimize trading decisions. In retail, neural networks are used for demand forecasting and personalization. Automotive companies deploy AI vision models in self-driving systems. Manufacturing relies on advanced deep learning methods for defect detection.

Robotics leverage real-time feedback loops for enhanced motion control. Content creation systems speed up editing and media generation using AI. Customer service integrates chatbots powered by attention-based networks. Law enforcement utilizes image recognition to improve public safety systems. In the energy sector, AI helps monitor grids and predict maintenance needs. These examples demonstrate the significant impact of implementing deep learning. Faster, smaller models enable edge deployment where cloud access is limited. AI is now utilized across virtually every modern business sector, bridging the gap between theory and practical success.

Conclusion

As innovative deep learning methods enhance performance and adaptability, AI development is accelerating. Breakthroughs in transformers, sparse modeling, and self-supervised learning drive this progress. Fast, accurate technologies help industries adapt to changing demands. Today, advanced AI neural networks are applied in sectors like industry, finance, and healthcare. Additionally, improvements in deep learning training are reducing deployment time and costs. Future AI systems will be smarter, leaner, and more adaptable. Continuous improvement, responsible application, and constant refinement will drive the next wave of AI development.

zfn9

zfn9