Over the past few years, language models have evolved to become larger, more complex, and increasingly capable. However, this advancement often comes with rising computational costs and limited accessibility. To address these challenges, researchers have turned to innovative architectures, such as Mixture-of-Experts (MoE) models. One such model gaining significant attention is OLMoE, an open-source language model designed with a flexible expert-based framework to enhance efficiency without compromising performance.

OLMoE stands for Open Language Model of Experts. It builds upon the core principles of MoE to deliver a language model that is both powerful and highly scalable. What sets OLMoE apart is its open-source nature, making it available to the broader AI community for research, experimentation, and real-world application.

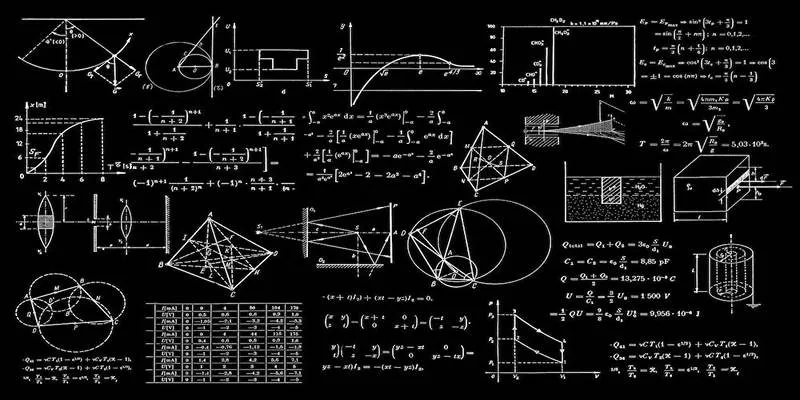

What Is a Mixture-of-Experts Model?

A Mixture-of-Experts model is a type of neural network that uses multiple smaller models, called experts, to process specific parts of an input. Instead of sending every input through a massive network, MoE models dynamically route input tokens to a subset of experts most suited for the task.

This design introduces a new level of efficiency and specialization. Only a few experts are activated during each operation, which drastically reduces the overall computation needed while improving model adaptability.

Key Benefits of MoE Architecture

- Lower Computational Cost: Only a fraction of the model is used per input, reducing processing power requirements.

- Higher Flexibility: Experts can specialize in handling certain types of data or tasks.

- Better Scaling: Models can be scaled up by adding more experts without slowing down performance.

- Improved Accuracy: By selecting experts best suited to a task, outputs tend to be more precise.

These advantages make mixture-of-experts models ideal for large-scale natural language processing (NLP) tasks, especially when efficiency is critical.

What Sets OLMoE Apart?

OLMoE takes the concept of a mixture of experts and combines it with the transparency and accessibility of open-source technology. Built on the OLMo framework, it introduces modularity and openness into expert-based language modeling. The primary goal behind OLMoE is to democratize access to high- performing language models by providing a structure that is efficient, easy to modify, retrain, and scale.

Notable Features of OLMoE

- Fully Open-Source: All weights, code, and configurations are freely available.

- Optimized Routing: A gating system determines which experts are activated for each token.

- Lightweight Training: Fewer active parameters per task mean lower training and inference costs.

- Customizability: Developers can create new experts or fine-tune existing ones for specific domains.

By leveraging these capabilities, developers and researchers can deploy language models that are not only faster but also adaptable to their unique needs.

How OLMoE Works

The internal architecture of OLMoE follows the principles of a traditional transformer-based language model but adds a MoE layer that performs dynamic routing. Each token from the input sequence passes through a router or gating mechanism, which selects the most relevant experts for processing. Typically, only two or three experts are activated per token, significantly reducing the computational overhead compared to fully dense models.

Steps in the OLMoE Pipeline:

- Tokenization: Input is broken into manageable units (tokens).

- Routing Decision: A gating network assigns tokens to appropriate experts.

- Expert Processing: Selected experts handle the tokens individually.

- Aggregation: The results from multiple experts are combined to form the final representation.

This system ensures that the model is focused, fast, and accurate, using only the parts of the network that matter most for a specific task.

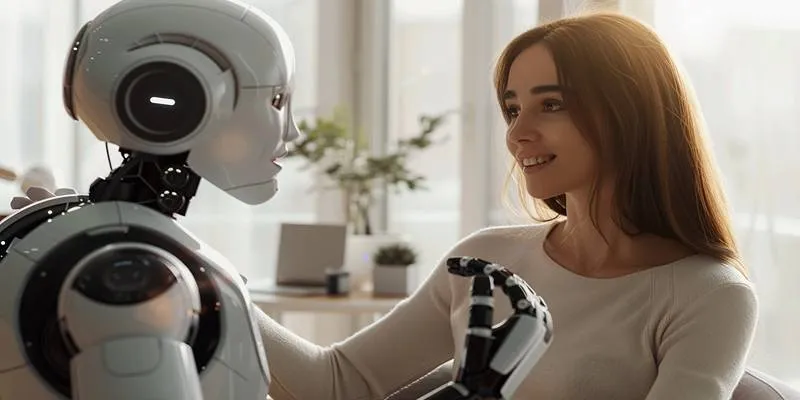

Why Open-Source Language Models Matter

Open-source AI models like OLMoE provide major benefits across both academic and industrial settings. They enable developers to experiment freely, improve transparency, and encourage community contributions.

Advantages of Open-Source AI

- Accessibility: Everyone can use and modify the model without licensing fees.

- Transparency: Users can audit and understand how the model behaves.

- Innovation: Open-source models often evolve faster thanks to community involvement.

- Ethical AI: Public access allows for responsible oversight and fair use practices.

With OLMoE being open-source, it is not just a model—it’s a collaborative platform that can grow through shared knowledge and contributions.

Real-World Applications of OLMoE

OLMoE’s flexibility makes it suitable for a wide variety of NLP tasks across industries. Whether used in a startup chatbot or a large-scale enterprise system, OLMoE delivers efficiency without compromising performance.

Common Use Cases:

- Conversational AI: OLMoE powers intelligent and responsive chatbots.

- Machine Translation: Offers fast and accurate translations for multilingual applications.

- Summarization Tools: Reduces long texts into concise and meaningful summaries.

- Coding Assistants: Helps developers generate or complete code snippets.

- Customer Sentiment Analysis: Identifies emotions and feedback in real time.

Thanks to its modular design, developers can create domain-specific experts that improve accuracy and relevance for each task.

OLMoE’s Role in the Future of AI

As demand for AI solutions grows, there is a clear need for models that are powerful yet sustainable. OLMoE addresses this gap by combining scalability, openness, and intelligence in one package. Its design also supports decentralized development, allowing communities, universities, and independent developers to build and share specialized experts for different use cases.

What the Future Holds:

- More Specialized Experts: Industry-specific expert modules will likely be developed.

- Improved Routing Systems: Smarter gates could further enhance token-to-expert assignments.

- Edge Deployment: OLMoE’s lightweight nature makes it a candidate for AI on edge devices.

- Collaborative AI Development: Open-source contributions will continue to enrich the model.

This potential makes OLMoE not just a temporary solution but a strong building block for the future of artificial intelligence.

Conclusion

In conclusion, OLMoE stands out as a groundbreaking open-source Mixture-of- Experts language model that brings together efficiency, flexibility, and accessibility. Its expert-based structure allows for faster processing and lower resource consumption without compromising performance. Unlike traditional models, OLMoE uses only the necessary experts per task, making it ideal for real-world applications across industries. Its open-source nature encourages innovation, transparency, and community-driven improvements. With growing interest in scalable and ethical AI, OLMoE offers a strong foundation for future development.

zfn9

zfn9