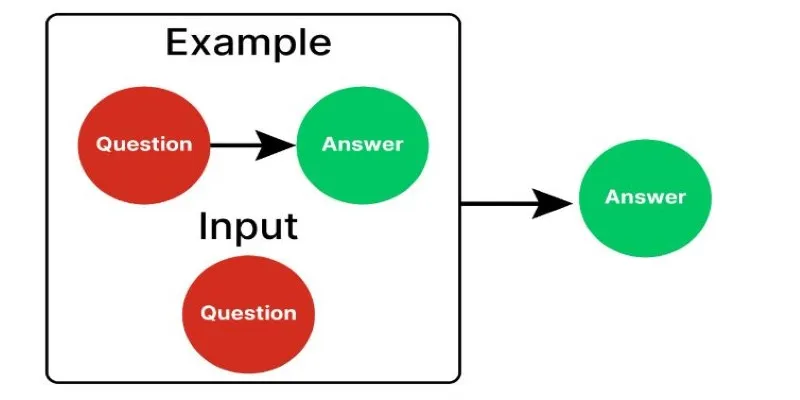

Getting an AI model to follow your lead can feel like giving vague directions to someone new in town. Sometimes, it clicks; sometimes, not so much. That’s where prompting comes in—and more specifically, One-shot Prompting. This approach gives the AI one clear example to guide its output. It’s the middle ground between zero-shot (no example) and few-shot (multiple examples) methods.

Think of it as showing one sketch to explain the whole vibe. It’s simple, fast, and often surprisingly accurate. In this article, we’ll break it all down—what it is, how it works, and why it’s becoming essential in Prompt Engineering.

Understanding the Core Idea Behind One-shot Prompting

At the heart of One-shot Prompting is the idea of clarity through example. You’re not giving the model a lecture—you’re giving it a nudge. One example, paired with the instruction or context, sets the tone for how you want the model to behave. This is useful when dealing with AI systems like GPT models that learn patterns from the structure of your input.

Let’s say you want AI to translate English into French. In a One-shot Prompt, you might write:

- Translate this:

- English: How are you?

- French: Comment ça va?

- English: Where is the library?

- French:

At this point, the model understands that it should provide the French version of the English sentence using the structure from your single example. You haven’t had to feed it dozens of examples. Just one. But that one is doing a lot of heavy lifting. The model’s internal language representation kicks in, detecting structure, tone, and intention from that lone instance.

This technique is especially useful when the task you’re asking the model to do is something it’s vaguely familiar with but needs a little extra context to do right. With just one example, the AI’s gears begin to turn in the direction you want, often producing more accurate and aligned outputs.

Comparing One-shot Prompting with Other Prompting Techniques

To fully grasp the value of one-shot prompting, one needs to understand how it stacks up against its siblings—zero-shot and few-shot prompting.

Zero-shot prompting is like diving into a conversation cold. You tell the model, “Translate this” or “Write a summary,” and expect it to know what to do without any prior context or examples. It’s impressive when it works but inconsistent if the task is vague or highly specialized.

Few-shot prompting is the overachiever of the bunch. It gives multiple examples before asking the model to continue the pattern. While effective, it can lead to longer prompts and higher processing costs—especially when using models that charge based on token length.

One-shot prompting is that balanced middle ground. You’re not overwhelming the model with examples, but you’re also not leaving it in the dark. It works especially well when:

- You want concise prompts.

- You’re working within token limits.

- You need more control without overwhelming the model.

From a Prompt Engineering standpoint, one-shot prompting also allows for faster experimentation. You can test how different single examples shape the model’s behavior. This gives creators and developers more flexibility without needing a full dataset every time.

Applications and Use Cases in Real-world AI Tasks

One-shot Prompting is not just a parlor trick—it has serious use in real applications. In fact, many tasks that rely on semi-structured input benefit from this approach.

Let’s consider AI writing assistants. When building templates for emails, social media captions, or even customer replies, a single well-crafted example in the prompt can guide the model to produce consistently styled outputs. You don’t need five examples. One is often enough to convey tone, intent, and structure.

In classification tasks, for example, sorting customer reviews as positive or negative, one-shot prompting can teach the AI to recognize the pattern with a single labeled entry. It doesn’t always beat models fine-tuned for classification, but it works surprisingly well without retraining.

Chatbots, too, use One-shot Prompting when defining their behavior. Want a chatbot to act more formal or conversational? Just show it one example of how you want it to respond. This is an efficient method in rapid prototyping where quick behavior changes are necessary.

In the broader landscape of Prompt Engineering, One-shot Prompting helps researchers and developers explore new tasks without needing massive labeled datasets. It’s also useful in low-resource settings, where data is scarce, but outputs still need to be intelligent and contextual.

Limitations and Best Practices to Make It Work

No technique in AI is flawless, and One-shot Prompting is no exception. Its effectiveness largely hinges on the strength of the single example provided. If that example is vague, inconsistent, or poorly structured, the model’s output will likely reflect that confusion. The model relies on subtle cues in phrasing, formatting, and structure—so clarity is everything.

Another key limitation is that One-shot Prompting doesn’t teach the model something entirely new. It builds on what the model already knows. If the task falls outside of the model’s training scope, the results will be inconsistent or completely off-base. This technique doesn’t replace fine-tuning or dataset training—it’s a shortcut, not a substitute.

To use it effectively, keep your example as clear and specific as possible. Maintain a consistent structure between your example and the new input. Avoid ambiguity. Explain just enough to show intent without overloading the prompt. Experimentation also helps—slight changes in phrasing or punctuation can yield vastly different outputs.

Finally, if you’re deploying this technique in a live system, regularly test and monitor its performance. One-shot prompting works well for lightweight, creative, or semi-structured tasks, but it’s not ideal for high-stakes decisions or critical automation pipelines.

Conclusion

One-shot Prompting proves that sometimes, a single clear example is all it takes to guide AI effectively. It strikes a smart balance between zero-shot simplicity and few-shot complexity, making it ideal for quick tasks, prototyping, and lightweight applications. While it isn’t flawless and depends on the model’s existing knowledge, with the right structure and clarity, it can produce impressive results. As prompt engineering continues to evolve, mastering one-shot techniques will remain a practical and efficient skill for anyone working with AI language models.

zfn9

zfn9