Meta’s Segment Anything Model (SAM) is revolutionizing image segmentation, making it easier and faster to isolate objects in images. Unlike traditional models that require hours of training and clean data, SAM can segment any object with minimal input, such as a click or a box. Its flexibility allows it to handle everything from animals to microscopic cells.

SAM’s architecture and zero-shot capabilities make it stand out in computer vision. This article explores its design, applications, and how SAM is setting a new standard for AI-driven image understanding, marking a breakthrough in visual recognition technology.

Why SAM Stands Out Compared to Traditional Models

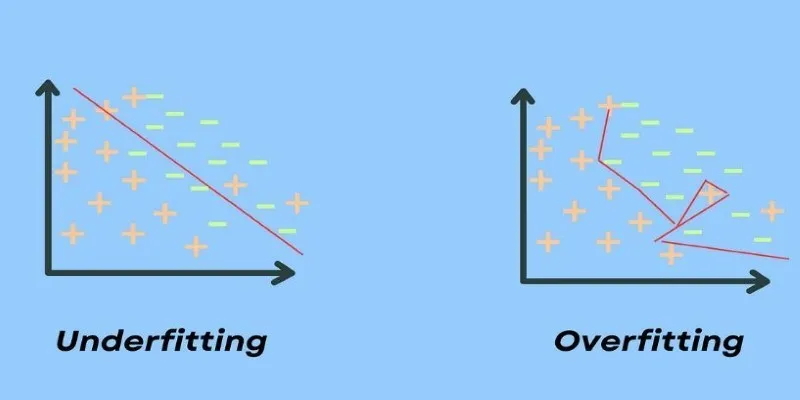

Traditional image segmentation tools typically require training on specific datasets for specific tasks. For example, if you wanted a model to identify different types of fruit, you needed to provide countless labeled images of those fruits. These models were often rigid, tightly coupled to their training data, and unable to generalize well to new tasks or object types without retraining.

SAM changes the game. What makes the Segment Anything Model so groundbreaking is its zero-shot capability—it doesn’t need to be retrained for each new segmentation task. You can give it a new image and a basic prompt (like a click or box), and it will identify what needs to be segmented. This generalization ability is similar to how large language models can write essays or answer questions without being explicitly trained for each specific one.

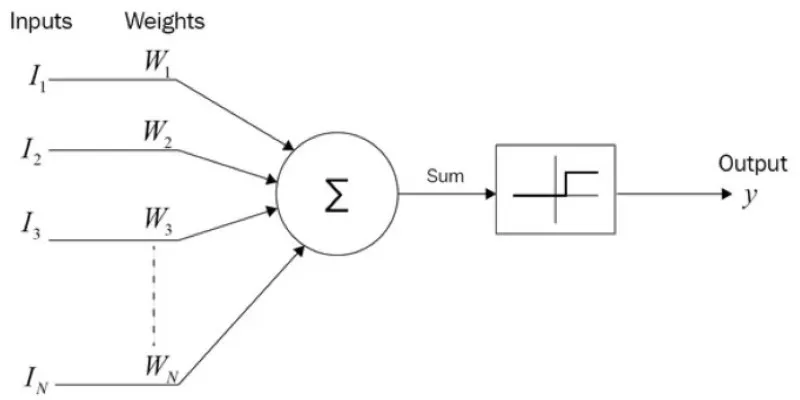

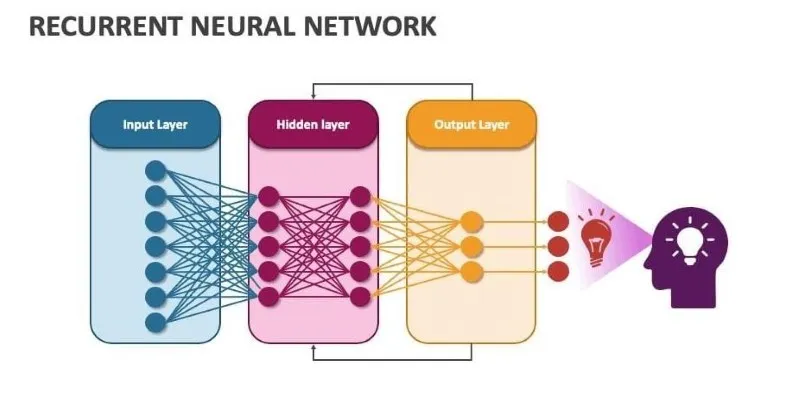

The key to this lies in SAM’s architecture. It uses a powerful image encoder to understand the entire image at a high level and then combines that with a lightweight prompt encoder. Together, these two inputs feed into a mask decoder that produces the segmented area. It’s an intelligent pipeline—first, learn the whole scene, then zoom in on where the user points, and return the result as a segmentation mask.

In technical terms, it decouples the idea of training from execution. It’s trained on a massive, diverse dataset that teaches it general segmentation logic, and that knowledge can then be applied to pretty much any input. This flexibility is rare, and it’s a big reason why Meta’s Segment Anything Model has generated so much buzz.

Under the Hood: How SAM Works

Let’s delve into how the Segment Anything Model operates through its three main components: an image encoder, a prompt encoder, and a mask decoder.

The image encoder processes the entire image and creates a high-resolution feature map—an abstract representation of the image’s content. This step is resource-intensive but only needs to be done once per image, making it efficient for handling thousands of images in real-time.

The prompt encoder is next. Unlike older models that rely solely on image input, SAM allows for interactive prompts. You can click a point, draw a box, or use a freehand tool to guide the segmentation. The prompt encoder translates these instructions into a format the mask decoder can understand.

Finally, the mask decoder takes the image representation and the encoded prompt to produce a segmentation mask—a black-and-white image showing which part of the original photo corresponds to the object of interest.

This architecture supports not just one but multiple masks. For a single prompt, it can generate several possible masks, each with a confidence score. This means you get options—and that’s valuable in both casual use and scientific analysis. For example, in medical imaging, it’s helpful to have alternate segmentations to consider, especially in ambiguous cases.

All of this is fast, too. The Segment Anything Model is designed for performance. You can run it interactively on the web or integrate it into pipelines for video, drone footage, or even augmented reality. The balance of speed, flexibility, and accuracy is part of what makes Meta’s Segment Anything Model a significant advancement.

Real-World Applications and Future Outlook

Meta’s Segment Anything Model (SAM) isn’t just a breakthrough in research—it’s transforming practical applications across industries. In design tools, SAM simplifies complex workflows, allowing users to isolate parts of images without relying on intricate Photoshop techniques.

In biology, SAM proves invaluable, enabling researchers to segment cells, tissues, and organisms from microscope images, speeding up data analysis. Additionally, SAM’s capabilities extend to satellite imaging, where it can track land use changes, deforestation, and urban growth with minimal effort.

A standout feature is SAM’s video application. With its ability to understand object boundaries quickly, SAM can segment individual frames and track objects over time. This capability is transformative for industries like surveillance, sports analysis, and filmmaking, where extracting objects or people from every video frame with a simple click becomes a reality.

Accessibility is also enhanced through SAM’s user-friendly interface. Those with limited fine motor skills can interact with the model by simply clicking on the area of interest, making it a powerful tool for democratizing AI technology across different audiences.

Looking ahead, SAM’s integration with generative models opens new creative possibilities. For example, SAM can isolate objects and feed them into text- to-image AI, allowing for novel transformations. Its SA-1B dataset—currently the largest segmentation dataset—also holds promise for training future models, spreading SAM’s influence across the computer vision landscape.

While SAM is groundbreaking, it does have limitations, such as struggling in cluttered scenes or unusual lighting. Additionally, it doesn’t “understand” the semantics of the objects it segments. However, these challenges are not roadblocks but rather opportunities for improvement in future iterations.

Ultimately, SAM represents a shift in AI models from rigid, task-specific systems to general, interactive models that adapt to user needs. This marks a significant evolution in how machines understand and process visual data.

Conclusion

The Segment Anything Model marks a significant advancement in AI-driven image segmentation. Meta’s technology simplifies the process, allowing users to segment images with minimal input, making it both powerful and user-friendly. With its versatility across industries like design, biology, and satellite imaging, SAM offers immense real-world potential. While still evolving, it represents a key shift in how AI models interact with users, emphasizing flexibility and accessibility, which will shape the future of image segmentation.

zfn9

zfn9