The Foundation of Modern AI: The McCulloch-Pitts Neuron

In the 1940s, Warren McCulloch and Walter Pitts introduced the McCulloch-Pitts Neuron, a pioneering concept that laid the groundwork for the field of artificial intelligence (AI). Instead of constructing robots or software, they focused on modeling the human brain’s logic through mathematical operations. Their model introduced the revolutionary idea that basic binary units could mimic logical thought processes.

Though not biologically accurate, this model demonstrated that simple components, when arranged correctly, could perform complex logical functions. This foundational concept continues to influence neural networks today, marking a crucial step in the development of AI and deep learning.

Understanding the McCulloch-Pitts Neuron

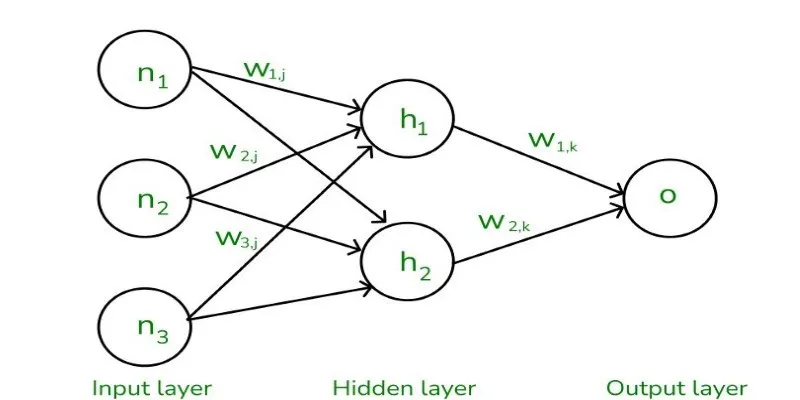

To appreciate what McCulloch and Pitts created, it’s essential to strip away the complexities modern AI has introduced over the decades—forget about weights, biases, activation functions, and backpropagation. The McCulloch-Pitts Neuron was a binary neural network logic model. Each neuron had a simple task: take several binary inputs (0s and 1s), process them using a threshold function, and produce a binary output.

At its core, a McCulloch-Pitts Neuron does the following:

- Receives multiple inputs—each being either a 0 (inactive) or 1 (active).

- Adds these inputs together.

- If the total equals or exceeds a pre-set threshold, the neuron fires (outputs a 1); otherwise, it doesn’t (outputs a 0).

The beauty lies in the simplicity. This structure allowed McCulloch and Pitts to replicate basic logic gates like AND, OR, and NOT using artificial neurons. When arranged in layers, these neurons could perform any function that a logic circuit could represent. This model was not merely brain-inspired; it was an early digital logic model capable of mimicking computation.

Their influential paper, titled “A Logical Calculus of the Ideas Immanent in Nervous Activity,” argued that neural behavior could be understood through logical operations. That idea shaped everything from early computer design to the earliest concepts of machine learning.

Why Does This Model Still Matter?

In today’s world of GPT, convolutional networks, and reinforcement learning, you might wonder why revisiting something seemingly primitive is important. Just as every skyscraper needs a foundation, the McCulloch-Pitts Neuron serves as the foundation for neural networks.

First, it showed that complex behaviors could emerge from simple units when arranged systematically. This concept is now a staple of not only neural networks but also distributed computing, swarm intelligence, and modular robotics.

Second, the McCulloch-Pitts model played a philosophical role. It bridged the gap between neuroscience and logic, proposing that brain activity could be mathematically described in terms of cause-and-effect logic circuits. This shift was revolutionary, enabling future researchers like Marvin Minsky and John McCarthy to envision machines that could reason, solve problems, and learn.

Even in its limitations, the model taught valuable lessons. It was too rigid to learn from data due to the lack of adjustment or feedback mechanisms. However, this flaw sparked the invention of more flexible models, like the perceptron, which introduced weights and learning rules. Each limitation of the McCulloch-Pitts model became a stepping stone for deeper insights.

Today, it’s also used as a teaching tool. When newcomers try to understand what a neural network is, starting with this model helps them focus on the core idea: combining simple decisions to reach complex outputs. Before introducing the complications of non-linear activation functions or gradient descent, you introduce logic. You introduce McCulloch and Pitts.

Logical Operations Through a Neural Lens

One of the most impressive achievements of the McCulloch-Pitts neuron is how it reinterpreted logical operations through neural modeling. For instance, take the logical AND gate. To model this with a McCulloch-Pitts neuron, you’d feed it two binary inputs. The neuron would fire (output a 1) only when both inputs were 1, which is exactly what an AND gate does.

The same goes for OR: a neuron that fires when at least one of the inputs is active. Building a NOT gate, however, required creative solutions. Since the model didn’t allow negative weights or inhibitory inputs by default, constructing a NOT gate involved using combinations of other units. Despite these constraints, the neural network logic model proved surprisingly adaptable.

What’s even more fascinating is that by combining these basic gates, the model could simulate any computation that a Turing machine could perform. This made the McCulloch-Pitts model not just a metaphor for brain activity but a legitimate model of general-purpose computation.

These logical constructs highlight the true potential of this model. It suggests that intelligence—or at least reasoning—doesn’t have to be mystical. It can be mechanized. It can be constructed. That was a radical idea in 1943, and it remains foundational today.

The Transition from McCulloch-Pitts to Modern Neural Networks

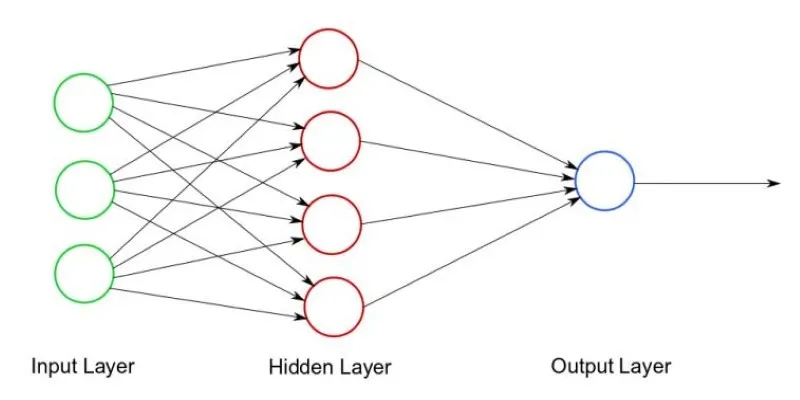

Despite its simplicity, the McCulloch-Pitts neuron laid the foundation for modern neural networks. Early models relied on basic binary inputs and outputs with simple threshold logic. This structure, though groundbreaking at the time, was limited in scope and functionality.

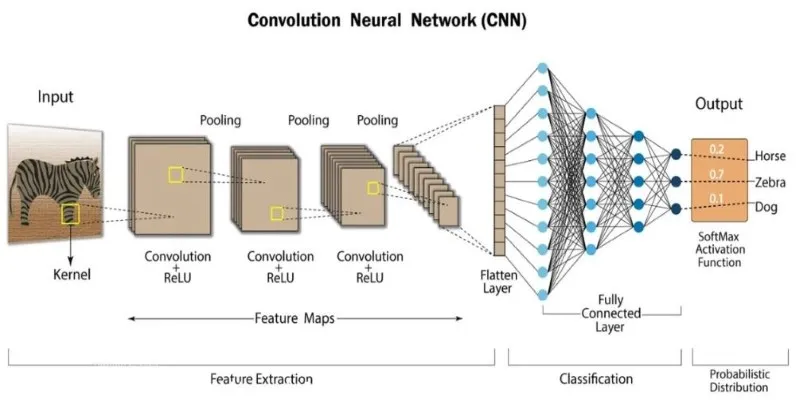

Over time, the need for more complex systems led to the introduction of new concepts, such as weighted connections and non-linear activation functions. These advancements allowed neural networks to handle more intricate patterns in data. The development of learning algorithms, notably backpropagation, enabled networks to adjust their weights based on errors, allowing them to “learn” from data—something the McCulloch-Pitts model lacked.

Today’s deep learning algorithms, which use millions of parameters, can perform sophisticated tasks like image recognition and natural language processing. Yet, despite these advancements, the core idea of using simple units to process information remains rooted in the McCulloch-Pitts neuron, highlighting its enduring influence on AI development.

Conclusion

The McCulloch-Pitts Neuron, though simple, laid the foundation for modern artificial intelligence and neural networks. By demonstrating that logical operations could be modeled using binary inputs and thresholds, it sparked further research and innovations in computation. While its limitations became apparent over time, the model’s ability to represent complex functions through simple units paved the way for the development of more sophisticated learning algorithms, marking a pivotal moment in the evolution of AI.

zfn9

zfn9