Neural networks drive much of today’s artificial intelligence, from voice assistants to self-driving cars. But how do these systems learn and improve over time? The secret lies in backpropagation, a technique that enables AI to refine its predictions by learning from mistakes. Think of it like teaching a child to recognize animals: initially, they might get things wrong, but with correction, they improve.

Backpropagation functions similarly, adjusting a neural network’s internal settings to get closer to the right answer. Without it, modern AI wouldn’t be what it is today. Let’s delve into how this fascinating process actually works.

The Core Idea Behind Backpropagation

Backpropagation is fundamentally an error-minimization learning algorithm used in neural networks. When a neural network makes a prediction, it compares that prediction with the correct response. If it’s incorrect, backpropagation helps adjust the network’s internal settings so that it can make better predictions next time. The term “backpropagation” comes from the idea of propagating errors backward through the network to enhance learning.

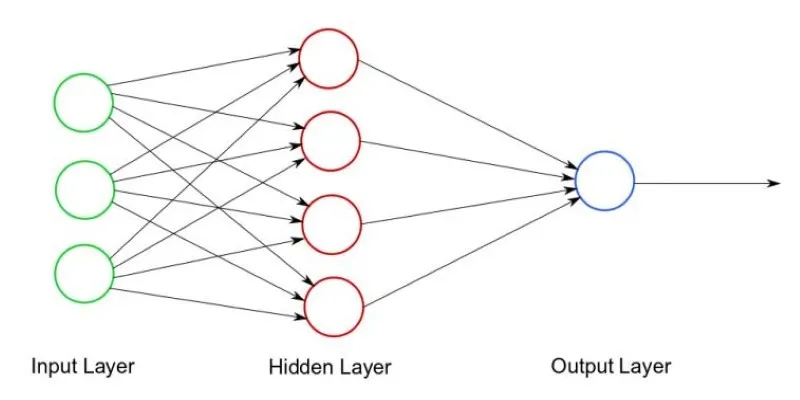

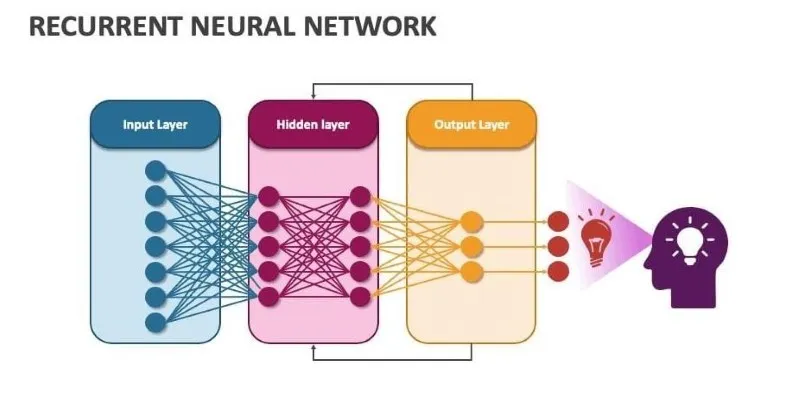

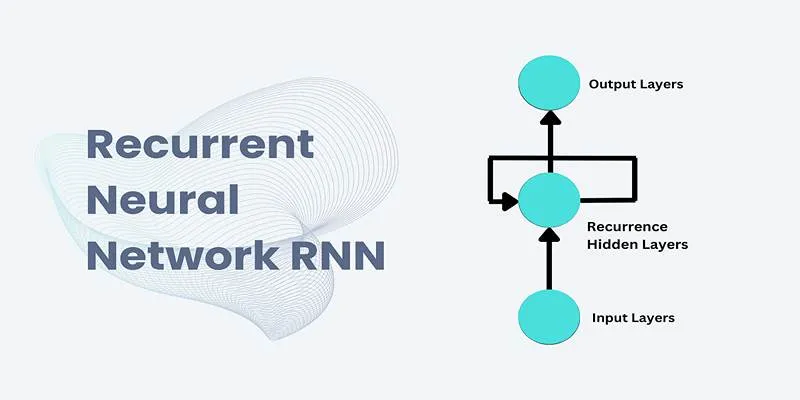

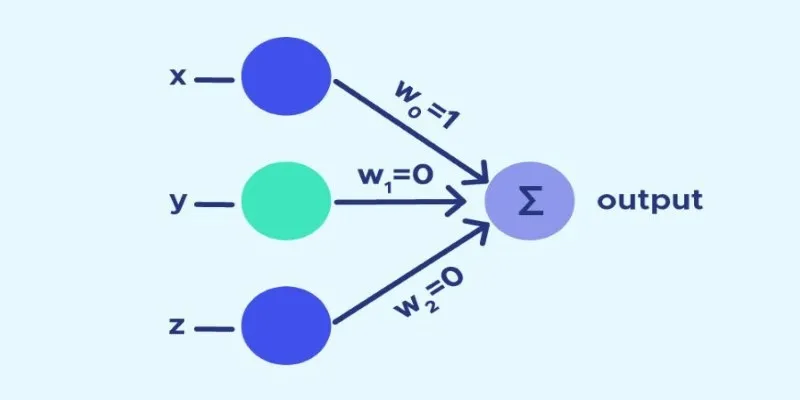

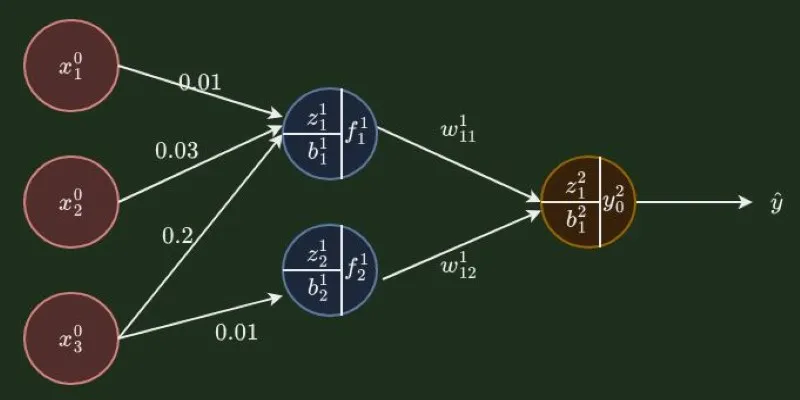

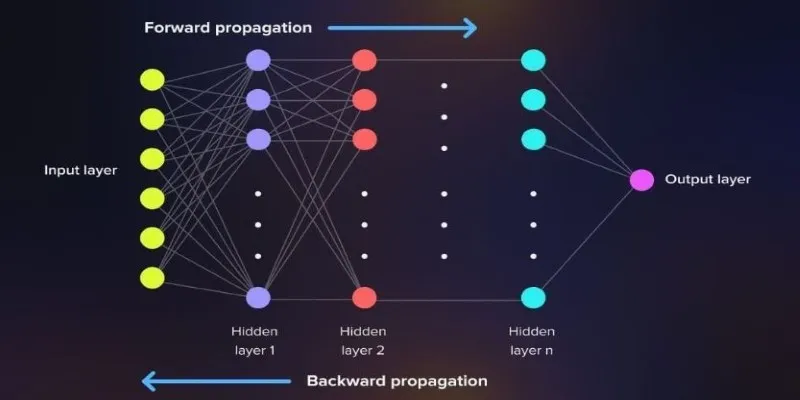

A neural network consists of layers of linked neurons that process data. Input data passes through these layers, with mathematical operations performed at each stage. The final outcome is the network’s prediction. Initially, predictions are often inaccurate, as the system is still learning. That’s where backpropagation becomes essential.

The network first determines how much its prediction deviates from the correct answer—this is known as an error. Using calculus, the network works backward, adjusting the weights of its neurons layer by layer to reduce this error. This process repeats until the network’s predictions become as accurate as possible. Essentially, backpropagation teaches the neural network how to learn from errors.

How Backpropagation Works Step by Step

Backpropagation follows a structured process to help neural networks learn from errors and improve their predictions over time.

Forward Pass: Generating an Output

The process begins with a forward pass, where input data flows through the neural network layer by layer. Each neuron applies mathematical transformations, passing the information forward until the final output is produced. If the output aligns with the expected result, no adjustments are necessary. However, if there is an error, the network must correct itself through backpropagation.

Backward Pass: Measuring the Error

When an incorrect prediction occurs, the network calculates the error by comparing the predicted output with the actual result. This difference, often represented by a loss function, helps determine how far off the network’s prediction was. The primary goal of backpropagation is to minimize this error by adjusting internal parameters.

Adjusting Weights Using Gradient Descent

Once the error is measured, the network identifies how much each neuron contributed to it. To correct mistakes, it fine-tunes the weights of neurons using an optimization algorithm called gradient descent. This method systematically updates the weights in small increments, guiding the network toward more accurate predictions over time.

Iterative Learning and Refinement

Backpropagation is a continuous learning process. With each training cycle, the network refines its weights, gradually improving its accuracy. Over multiple iterations, it learns to recognize patterns in data, allowing it to generalize and make precise predictions even on new, unseen inputs.

Why Backpropagation Is Essential for Neural Networks

Without backpropagation, training deep neural networks would be nearly impossible. This algorithm allows artificial intelligence models to refine their decision-making over time. It is the foundation behind speech recognition, image processing, natural language understanding, and countless other AI applications.

One of backpropagation’s greatest strengths is its efficiency. Instead of blindly adjusting weights, it strategically identifies where improvements are needed, leading to faster learning and more accurate results. Backpropagation also allows deep learning models to handle complex tasks that traditional algorithms struggle with.

For example, in facial recognition technology, a neural network must analyze subtle differences between faces. Without an effective learning method, the system would fail to distinguish between similar-looking individuals. Backpropagation ensures that the network continuously refines its ability to recognize unique facial features, making it more accurate with each iteration.

Despite its advantages, backpropagation is not without challenges. It requires large amounts of data and computational power, and training deep networks can be time-consuming, especially with limited resources. Additionally, there’s always the risk of overfitting, where the model becomes too specialized in the training data and struggles with new inputs. However, with proper tuning and techniques like regularization, these challenges can be managed.

The Future of Backpropagation and Machine Learning

Backpropagation has long been a fundamental technique in AI, but researchers are continuously seeking ways to improve it. Some are exploring biologically inspired learning methods that mimic how the human brain processes information, aiming to create more adaptive and efficient neural networks. Others focus on optimizing backpropagation itself, working to reduce its computational demands so that AI models can be trained faster and with fewer resources.

Despite emerging alternatives, backpropagation remains essential for deep learning. Its ability to refine predictions and improve accuracy has transformed industries like healthcare, finance, and automation. As AI continues to evolve, backpropagation will likely be enhanced rather than replaced, ensuring that machine learning models become even more efficient and capable. The future may bring hybrid approaches that combine backpropagation with new learning techniques, pushing the boundaries of AI’s potential and making intelligent systems more accessible to a wider range of applications.

Conclusion

Backpropagation in neural networks forms the backbone of modern AI, enabling machines to improve their accuracy through continuous learning. By refining errors and adjusting internal weights, this method transforms raw data into intelligent predictions. While it comes with challenges, its efficiency and effectiveness make it indispensable in deep learning. As technology progresses, backpropagation will evolve alongside it, ensuring that AI systems become even smarter and more capable.

zfn9

zfn9