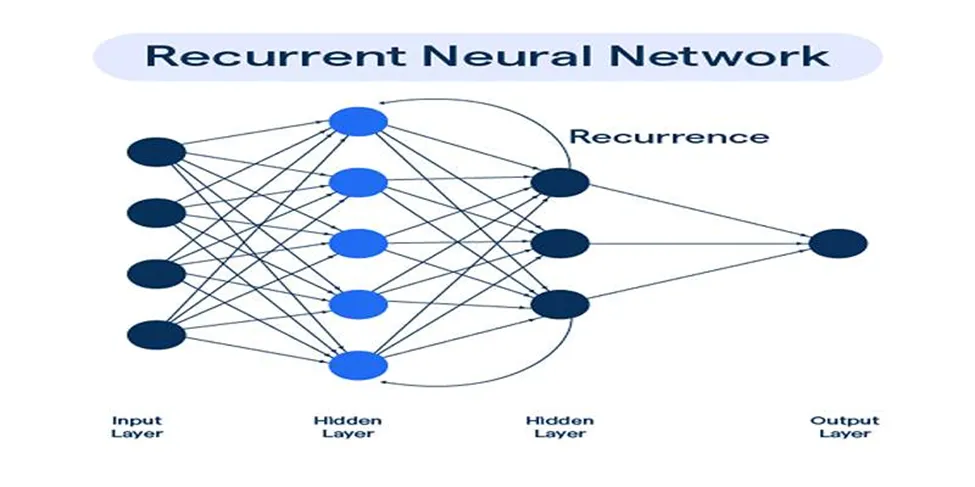

Artificial neural networks called Recurrent Neural Networks (RNNs) are designed to handle data in a specific order. Unlike traditional feedforward neural networks, RNNs can retain information from previous steps, allowing them to detect patterns over time. RNNs are ideal for tasks where data sequence matters, such as stock market predictions or text generation.

This post explores what RNNs are, their functionality, applications, and challenges. This guide aims to simplify complex concepts, ensuring everyone understands how RNNs operate and their significance in processing sequential data.

What is Sequential Data?

Sequential data is information where the order of elements is crucial. Often, the information in these sequences depends on preceding numbers, requiring processing in a specific sequence. This type of data is prevalent in various fields, including:

- Text Data : Language models use sentences and paragraphs, where word order affects meaning.

- Speech Data : Audio signals where word meanings depend on preceding sounds.

- Time-Series Data : Examples include stock prices and weather trends, where past values influence future values.

For instance, in natural language processing (NLP), understanding a sentence necessitates knowing the relationships between words and how they convey meaning. RNNs excel in these scenarios by processing sequences and utilizing past inputs.

The Basics of RNNs: How Do They Work?

RNNs function by transmitting information through repeating layers. At each time step, the network considers new data and information from previous steps. Here’s a simplified breakdown of the process :

- Input Layer : Data is fed into the RNN at each time step. For instance, in speech recognition, this could be an audio signal, while in text processing, it could be a word or character.

- Hidden State : At each time step, the RNN updates its hidden state based on current input and previous hidden state. This hidden state serves as the network’s “memory,” enabling it to remember prior inputs.

- Output Layer : The network then produces an output based on the current hidden state. This output could be a prediction, such as the next word in a sentence or the next value in a stock price series.

The primary feature of RNNs is their ability to loop through sequences, updating the hidden state as they progress. This looping mechanism allows RNNs to effectively process data where element order is critical.

Applications of Recurrent Neural Networks

RNNs are extensively used in several applications due to their ability to handle sequential data. Here are some common uses:

- Natural Language Processing (NLP): RNNs are used for language modeling, text translation, and text generation. Given that text is inherently sequential (words follow a specific order), RNNs are ideal for understanding and generating human language.

- Speech Recognition: Speech data is sequential because audio signals are recorded over time. RNNs can convert speech to text by identifying patterns in audio sequences.

- Time-Series Prediction: RNNs are commonly employed for time-series data prediction, such as stock prices or weather patterns. The model can learn from historical data to forecast future trends.

- Music Generation: RNNs can also be trained to generate music by learning from sequences of musical notes. They can create compositions that mimic existing music styles.

Key Features of RNNs

RNNs possess several features that make them suitable for processing sequential data:

- Memory: RNNs are unique because they can remember previous inputs in their hidden state, allowing them to understand the context of sequential data.

- Flexibility: RNNs can work with patterns of varying lengths, making them applicable to a wide range of data types.

- Dynamic Temporal Behavior: RNNs can adapt to different time intervals, making them suitable for time-series forecasting and other sequential data tasks.

Challenges in Training RNNs

While RNNs are powerful, they face challenges, particularly with long sequences. Common issues include:

- Vanishing Gradient Problem: When training an RNN on long sequences, gradients (used for weight updates) can become very small, hindering the model’s ability to learn from earlier sequence parts. This is known as the vanishing gradient problem.

- Long Training Times: RNNs can require extended training periods, especially on large datasets, due to their complex structure and need to process each sequence step-by-step.

Solutions to RNN Challenges

To address these challenges, researchers have developed advanced RNN variants, such as:

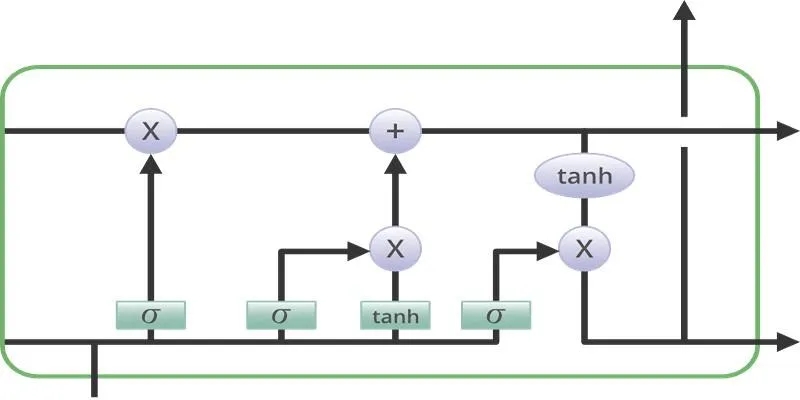

- Long Short-Term Memory (LSTM):

LSTMs are a type of RNN specifically designed to tackle the vanishing gradient problem. They have a more complex structure that allows them to maintain information over long sequences.

- Gated Recurrent Units (GRU): GRUs are another RNN variant that, like LSTMs, assist with long-term memory retention and prevent vanishing gradients. GRUs are simpler than LSTMs but still effective for many tasks.

- Gradient Clipping: To prevent exploding gradients, gradient clipping is utilized. This technique limits gradient size during training to maintain manageability.

Conclusion

Recurrent Neural Networks (RNNs) are essential for processing sequential data, offering powerful capabilities in tasks like natural language processing, speech recognition, and time-series forecasting. Despite challenges like the vanishing gradient problem, advancements such as LSTMs and GRUs have enhanced RNN effectiveness. Understanding RNNs and their applications is crucial for anyone working with sequential data. By leveraging these networks, you can build systems that not only comprehend data context over time but also predict and generate new sequences accurately.

zfn9

zfn9