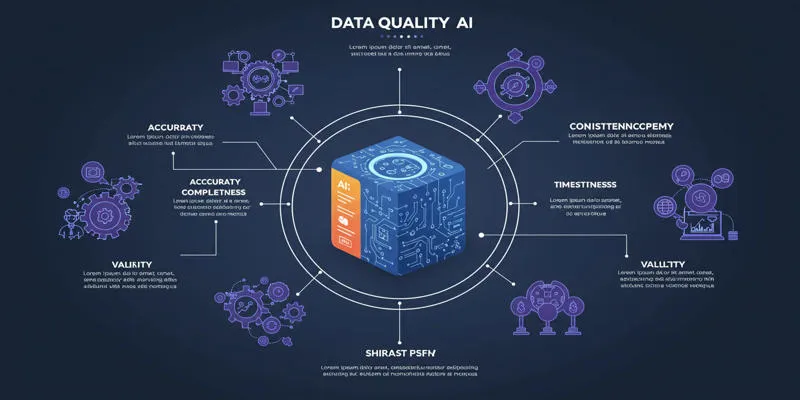

In today’s data-driven world, information is at the heart of decision-making, analytics, and automation. However, raw data is often far from perfect, plagued by inconsistencies, duplications, incorrect formats, and even outright errors. This is where data scrubbing becomes essential.

Data scrubbing is a rigorous and systematic approach that goes beyond basic data cleaning. While cleaning might fix a few typos or formatting errors, scrubbing ensures that data is accurate, consistent, and reliable for analytical or computational use. This comprehensive guide will explore the ins and outs of data scrubbing, its processes, and its significance in maintaining data quality.

Data Scrubbing vs. Data Cleaning: Understanding the Differences

Although often used interchangeably, data cleaning and data scrubbing have distinct differences:

- Data Cleaning: This process addresses minor, obvious issues like spelling errors, misplaced decimal points, or inconsistent capitalization.

- Data Scrubbing: This method includes everything in data cleaning and goes further. It involves logic-based checks, de-duplication, validation, and structural corrections to align the dataset with defined standards.

Think of data cleaning as tidying up a room, while data scrubbing is like a deep cleanse that removes unseen grime.

Key Issues Addressed by Data Scrubbing

During the scrubbing process, several data errors are targeted:

- Inaccuracies: Incorrect or outdated values.

- Duplicates: Repeated entries that skew analysis and inflate data.

- Formatting Issues: Misaligned formats that hinder proper processing.

- Null or Missing Data: Empty fields that need attention—either to be filled, flagged, or removed.

- Inconsistencies: Conflicting values for the same variable across records.

The goal is to eliminate these errors and ensure every data point adheres to predetermined rules and standards.

Core Steps in the Data Scrubbing Process

Data scrubbing typically involves a series of structured steps:

1. Data Profiling

This step examines the dataset to understand its structure, patterns, and content. Profiling highlights critical issues like excessive null values, unexpected data types, or inconsistent patterns.

2. Defining Standards

Before cleaning begins, clear rules and data quality metrics are defined. This might include formatting rules for dates, acceptable value ranges, and criteria for identifying duplicates.

3. Error Detection

Using algorithms or validation scripts, the scrubbing tool scans the dataset for issues based on the defined standards. Errors are flagged for correction or removal.

4. Correction or Removal

Depending on the issue’s severity, flagged data may be corrected, replaced, or deleted. Automated tools often assist in applying these decisions consistently.

5. Final Validation

The clean dataset is checked against the original standards to ensure all corrections have been properly applied. A quality score or error log may be generated for auditing purposes.

The Benefits of Data Scrubbing

The benefits of data scrubbing are extensive. It’s not just about tidying up spreadsheets—it directly impacts how effectively data can be used. Here are some notable advantages:

- Improved Accuracy: Correcting errors leads to better analytical outcomes.

- Consistency: Standard formats and values are applied across the dataset.

- Efficiency: Clean data reduces time spent troubleshooting errors later.

- Compliance: Adhering to internal data standards becomes easier.

- Optimization: Removing unnecessary entries makes data lighter and faster to process.

Data Scrubbing Techniques

Data scrubbing involves various techniques, each addressing different data issues. These techniques ensure the dataset is not just clean, but also reliable and ready for use:

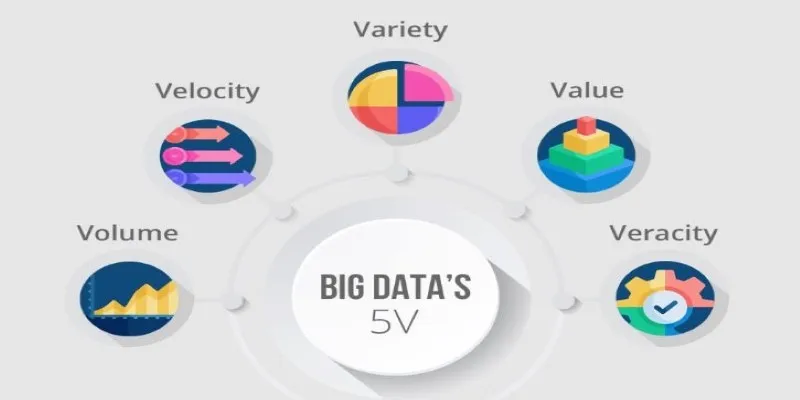

- Standardization: Ensures uniformity in data formats and naming conventions, eliminating confusion and improving grouping and reporting accuracy.

- Deduplication: Identifies and merges or eliminates duplicate entries by comparing key identifiers like names or IDs.

- Field Validation: Ensures data entries meet specific criteria, checking formats for correctness and flagging invalid inputs.

- Outlier Detection: Highlights values significantly deviating from the norm, often indicating data entry errors.

- Normalization: Converts values to a common unit or format when data comes from different sources, aligning scale and measurement systems.

These techniques form the core of an effective scrubbing strategy.

Manual vs. Automated Data Scrubbing

While small datasets can be manually inspected and fixed, most modern scrubbing tasks use software tools. Manual scrubbing is time-consuming and prone to errors, especially with large datasets.

Automated tools allow users to define validation rules, track changes, and generate reports, handling thousands or millions of records with speed and consistency. Popular platforms include both open-source tools and enterprise- level solutions, offering features like multi-language support and database integration.

When to Scrub Your Data

Regular scrubbing should be part of any structured data management workflow. It’s best to perform scrubbing:

- Before importing data into analytics tools.

- When migrating from one system to another.

- After merging data from multiple sources.

- On a scheduled basis (e.g., quarterly or semi-annually).

Even if your data is generated internally, small errors accumulate over time. Periodic scrubbing ensures datasets remain clean and usable long-term.

Conclusion

Data scrubbing is essential for maintaining high-quality, trustworthy datasets. Unlike basic cleaning, it offers a deeper, structured approach to identifying and eliminating errors.

By regularly scrubbing your data, you ensure it meets internal standards, performs well in analytics, and avoids costly mistakes. Clean data is the foundation of smart decision-making, and scrubbing is the tool that keeps it solid.

zfn9

zfn9