AI systems succeed by using high-quality data during training. Quality data leads to reliable forecasts, trustworthy insights, and sound decisions, while poorly maintained information results in faulty outputs and skewed models, potentially damaging reputations. Organizations leveraging AI for innovation must understand and address fundamental data quality issues, as their success hinges on it. This article explores nine crucial data quality problems in AI systems and offers methodical solutions to help users achieve optimal results.

Why Data Quality Matters in AI

The reliability of AI

depends entirely on the quality of data used to build AI systems. AI models

perform poorly when processing low-quality data due to missing information,

incorrect details, biased elements, or outdated characteristics. These issues

illustrate the importance of maintaining high-quality data throughout the AI

development process.

The reliability of AI

depends entirely on the quality of data used to build AI systems. AI models

perform poorly when processing low-quality data due to missing information,

incorrect details, biased elements, or outdated characteristics. These issues

illustrate the importance of maintaining high-quality data throughout the AI

development process.

Organizations must tackle common data quality challenges to optimize AI systems effectively and reduce operational risks.

9 Common Data Quality Issues in AI

1. Incomplete Data

Accurate model training requires all essential information within datasets. Missing data values create gaps that lead to inaccurate predictions, reducing model reliability. For instance, a healthcare AI system needs patient demographic information to avoid generating inaccurate diagnoses.

To ensure complete datasets, establish robust data collection methods. Imputation techniques can fill gaps without distorting results.

2. Inaccurate Data

Inaccurate data arises from errors during the collection process and measurement inaccuracies, leading to invalid outcomes in AI models. This can result in serious issues like financial errors and medical misdiagnoses.

Employ both automated and manual auditing to detect and correct errors in datasets before training sessions.

3. Outdated Data

Data becomes outdated when it no longer reflects current realities, leading decision-makers to base choices on irrelevant information. Using outdated market trends in predictive analytics can result in poor business decisions.

Schedule regular updates for datasets to maintain their relevance. Utilize automatic data stream systems when possible.

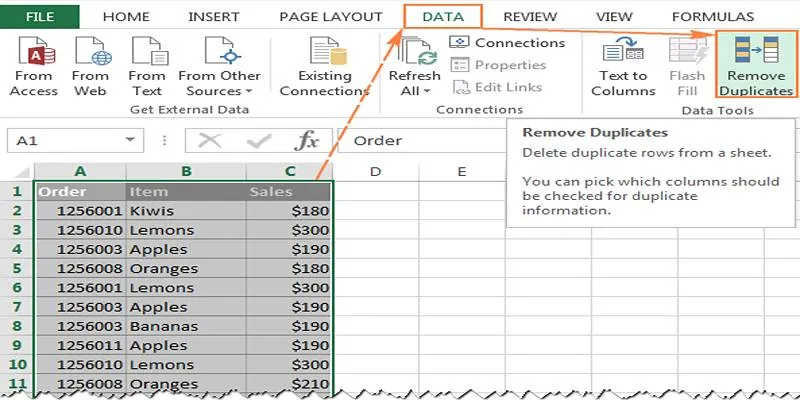

4. Irrelevant or Redundant Data

Data points without meaning or redundant information can confuse learning systems, degrading precision due to speculative elements. Unrelated customer feedback in sentiment analysis can diminish valuable insights.

Use feature selection methods to identify unnecessary variables, followed by information consolidation to create useful data formats.

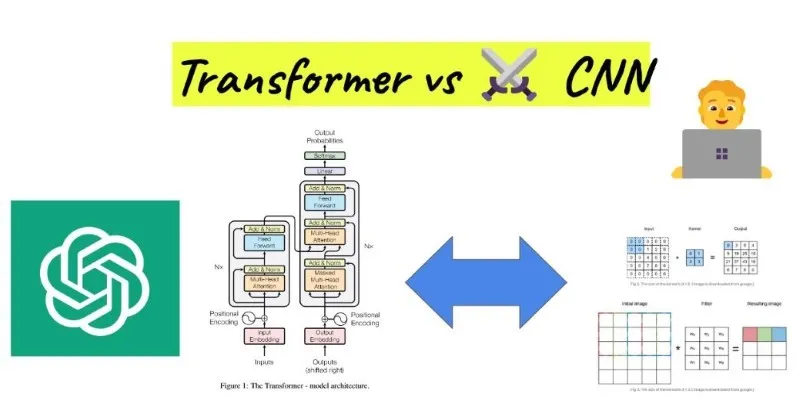

5. Poorly Labeled Data

Supervised learning heavily relies on datasets with specific labels. Labeling mistakes lead to incorrect class assignments, causing algorithms to develop faulty patterns.

Implement professional annotator teams and automated tools with active learning frameworks to achieve high-quality labeled data.

6. Biased Data

Data bias results from unbalanced group distributions in datasets, leading to discriminatory processing patterns. Facial recognition systems, for example, may fail to identify darker-skinned individuals due to racial biases in training data.

Gather training data from diverse populations using multiple demographic sources. Regular bias audits are crucial to uncover potential sources of bias.

7. Data Poisoning

Data poisoning involves malicious activity where attackers introduce faulty data into databases, resulting in biased training outcomes.

Protect against poisoning with anomaly detection systems to monitor unusual dataset patterns during preparation. Regular audits of training data integrity are essential.

8. Synthetic Data Feedback Loops

Synthetic data is increasingly used for dataset expansion, but excessive reliance can create feedback loops, disconnecting models from real-world conditions.

Use synthetic data alongside real data and validate synthetic outputs against real-world observations.

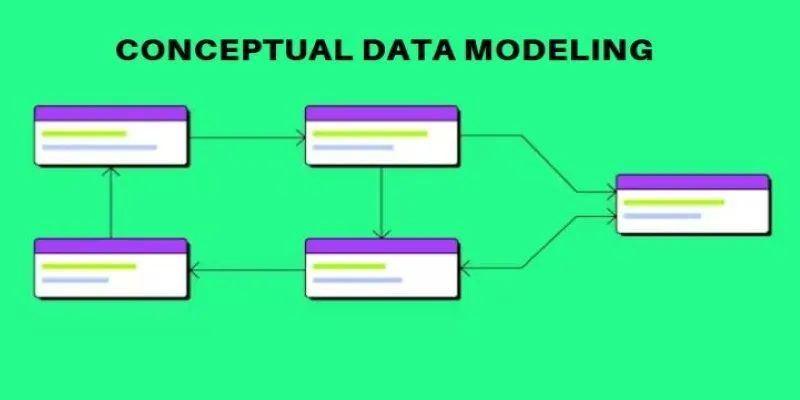

9. Lack of Governance Frameworks

Inconsistent data quality often results from the absence of proper governance frameworks, leading to data separation issues and integration errors.

Develop comprehensive governance policies to unify operational systems across departments and ensure compliance with GDPR and HIPAA standards.

Consequences of Poor Data Quality

- Poor data quality can damage software, harm reputations, and erode trust, causing financial losses.

- Faulty input data in training models results in unpredictable outcomes, impacting organizational decision-making.

- AI behavior that offends or displays bias can provoke public outrage, undermining a company’s market standing.

- Non-compliance with legal standards due to poor governance can lead to regulatory penalties.

Organizations must implement preventive measures throughout the AI lifecycle, from data collection to post-deployment monitoring.

Best Practices for Ensuring High-Quality AI Data

To address common

issues related to substandard data quality, organizations should follow these

best practices:

To address common

issues related to substandard data quality, organizations should follow these

best practices:

1. Establish Clear Standards

Set project-based guidelines to determine high-quality data levels by defining accuracy targets and representativeness parameters.

2. Automate Quality Checks

Enable automated detection mechanisms and validation scripts to identify errors without human intervention.

3. Invest in Diversity

Train data models with datasets from diverse population groups and real-life situations to reduce biases and enhance universal applicability.

4. Implement Governance Policies

Establish standardized processes that ensure adherence to GDPR and HIPAA standards through structured frameworks.

5. Monitor Continuously

Include regular performance evaluations and user feedback in post-deployment assessments to adjust system inputs based on analysis results.

6. Leverage Synthetic Data Responsibly

Use synthetic data cautiously to enhance training datasets, validating new data points against real-world observations before deployment.

Conclusion

Data quality is crucial for developing successful AI systems. Companies in sectors like healthcare and finance must prioritize data quality to ensure technical achievement, ethical behavior, and sustainable outcomes. High data quality is essential for organizations aiming to create dependable AI systems with responsible innovation.

zfn9

zfn9