Artificial intelligence (AI) has become an integral part of modern technology, powering a wide range of applications—from voice assistants and chatbots to fraud detection systems and self-driving cars. While these applications appear intelligent, their success hinges on effective development, testing, and maintenance. A crucial practice supporting this process is continuous testing.

Continuous testing ensures AI applications function reliably over time by executing automated tests at every development stage. This approach helps developers identify and resolve issues early, preventing unexpected failures and maintaining smooth operation of AI systems. For businesses utilizing AI in critical systems, continuous testing is indispensable.

Understanding Continuous Testing in AI Application Development

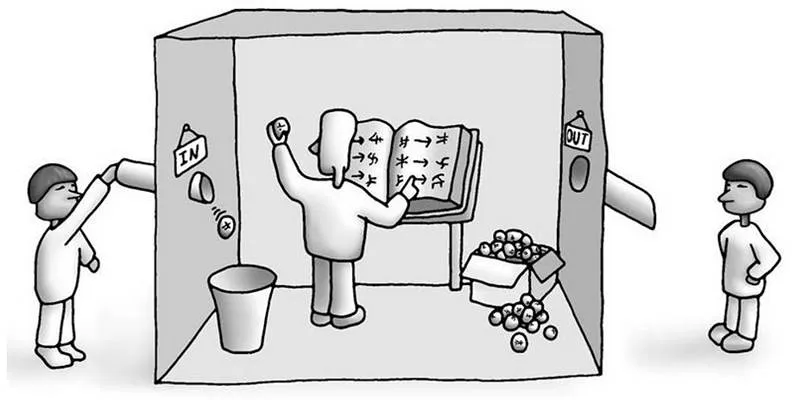

Continuous testing involves repeated and automated testing throughout an application’s development. Rather than waiting until the final stage to conduct tests, developers test the AI model, data pipeline, and code with every change.

This proactive method allows teams to uncover bugs, data issues, or performance drops much earlier. Given that AI applications rely on models trained on large datasets to make predictions, even minor data changes can significantly impact results. Hence, regular testing is crucial.

Key Characteristics of Continuous Testing

- Automatic test execution with each update

- Real-time result analysis

- Rapid feedback shared with the team

- Continuous monitoring post-deployment

By emphasizing frequent and automated testing, continuous testing mitigates risks and enhances reliability in AI-driven systems.

The Importance of Continuous Testing for AI Applications

AI applications differ from traditional software, as they learn from data and make probabilistic decisions, offering flexibility but posing control challenges. Continuous testing is crucial for several reasons:

Detecting Data Drift

Data drift occurs when incoming data diverges from the training data, potentially leading to poor decisions by the model. Continuous testing identifies these changes and alerts developers to retrain the model as needed.

Maintaining Model Performance

Regular checks are necessary to ensure AI models maintain their performance standards. Over time, metrics like accuracy, precision, or recall may degrade. Continuous testing helps verify that models continue making accurate predictions.

Ensuring Smooth Integration

AI systems are often integrated into larger applications. Continuous testing ensures the AI component seamlessly interacts with other elements, such as user interfaces, databases, and APIs.

Reducing Time to Market

Automated and ongoing testing accelerates development by identifying and addressing issues early, thus speeding up the release of new features or updates.

Implementing Continuous Testing in AI Projects

Integrating continuous testing into AI development requires strategic planning. A comprehensive strategy should encompass not only software code but also data and the model itself.

Step 1: Establish an Automated Testing Pipeline

Automated pipelines automatically execute tests upon system changes. These pipelines should manage:

- Code validation

- Model performance assessments

- Data quality checks

Tools like Jenkins, GitHub Actions, and GitLab CI/CD facilitate automation.

Step 2: Validate Input Data

AI relies on high-quality data. Poor data quality hampers model performance, making data validation a crucial testing component.

Validation should include checks for:

- Missing values

- Duplicate entries

- Inconsistent formats

- Outliers and unexpected patterns

Step 3: Test the Model Itself

AI models should be tested with diverse data types to ensure correct behavior.

Useful test cases may include:

- Testing with real-world scenarios

- Comparing predictions with ground truth

- Checking for bias in predictions

Step 4: Monitor Post-Deployment

Continuous testing doesn’t cease after AI model deployment. Real-time monitoring is essential for detecting quality drops promptly.

Monitoring can include:

- Alerts for low accuracy

- Logs for failed predictions

- User feedback analysis

This ongoing feedback loop helps enhance the system over time.

Best Practices for Continuous Testing in AI

While continuous testing offers significant benefits, it must be executed correctly to be effective. Adhering to best practices aids in avoiding common pitfalls.

Utilize Version Control

Track different versions of code, data, and models. Version control enables quick rollbacks if new updates cause issues.

Create Reusable Test Cases

Design test cases that can be reused in future projects, saving time and ensuring consistency throughout the development process.

Promote Collaboration

Engage developers, testers, data scientists, and product managers in the testing process. A shared understanding leads to improved outcomes.

Explain Model Behavior

Implement explainable AI techniques to clarify model decisions. This transparency builds trust and aids in error identification.

Tools Supporting Continuous Testing in AI

Numerous tools are available to streamline continuous testing for AI teams, each addressing different aspects of the testing process:

- MLflow – Tracks machine learning experiments, models, and metrics

- TensorFlow Extended (TFX) – Builds data pipelines and tests model performance

- Great Expectations – Validates and documents data quality

- Seldon Core – Deploys models with built-in testing and monitoring

- Jenkins – Automates the testing and deployment process

Leveraging the right tools enhances efficiency and accuracy in AI projects.

Conclusion

As AI expands into critical sectors like healthcare, finance, and transportation, the reliability of these systems becomes even more vital. Continuous testing forms the foundation for developing trustworthy, high- quality AI applications. By testing data, models, and code at every stage—and continuing post-deployment—development teams can create AI systems that are not only intelligent but also dependable. In an era where AI is ever-present, continuous testing is no longer optional—it is essential.

zfn9

zfn9