The Perceptron might not sound exciting at first, but it’s where artificial intelligence truly began. Imagine a machine that learns from experience, much like we do—this was the radical idea behind the Perceptron in the 1950s. It was one of the first steps toward teaching computers to recognize patterns, make decisions, and even “think” in a primitive way.

Even though it’s a basic model, it set the stage for today’s neural networks, which drive everything from voice recognizers to autonomous vehicles. Learning about the Perceptron is not only about history but also about the beginnings of AI today.

How Does the Perceptron Work?

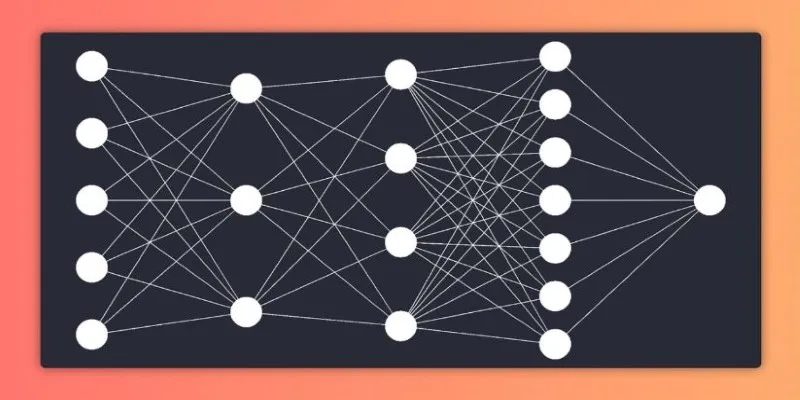

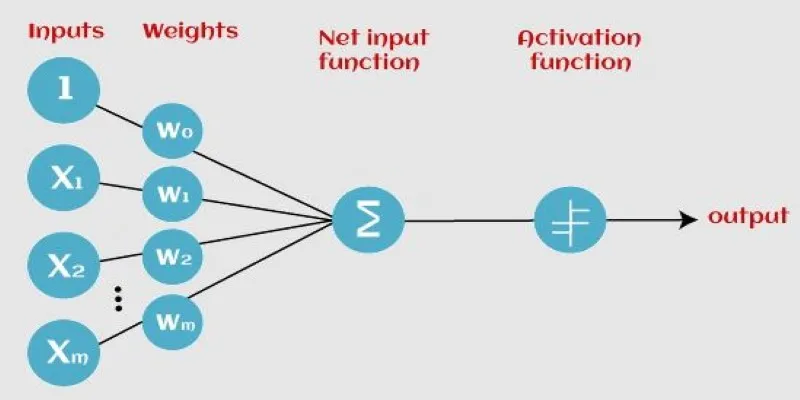

The Perceptron is a mathematical function that takes a number of inputs, subjects them to weighted calculations, and then generates an output. It works on the simple principle that it receives numeric input values, multiplies these by weights (which define how important they are), and then adds the totals. If the total exceeds some predetermined threshold, the Perceptron fires and generates a response.

A standard Perceptron has three fundamental elements: inputs, weights, and an activation function. Inputs are the numerical values entering the system that denote various aspects of a problem. Every input has a weight that changes with training to maximize precision. The activation function is a straightforward rule used to decide if the output will be 0 or 1, depending on if the weighted sum exceeds the threshold.

Perceptron training involves modifying the weights through a process called the Perceptron Learning Rule. This enables the model to learn patterns from the provided dataset. When the Perceptron predicts incorrectly, it adjusts the weights by comparing its output with the correct label. Through several iterations, these weight modifications improve the accuracy of the model. However, the Perceptron only accepts linearly separable problems since it finds difficulties with complex assignments that call for deeper learning.

Limitations and Evolution into Neural Networks

Despite its significance in machine learning history, the Perceptron has notable limitations. One of its biggest shortcomings is its inability to solve problems involving non-linearly separable data. This issue was famously highlighted by the XOR problem, where a Perceptron failed to distinguish between patterns that weren’t separated by a straight line. Because of this, AI research slowed down for a time until the introduction of multi-layer neural networks.

The key breakthrough came with the development of multi-layer Perceptrons (MLPs), which expanded the basic structure by adding hidden layers between inputs and outputs. These additional layers enabled models to capture more complex patterns, allowing them to process data in ways the original Perceptron could not. The introduction of backpropagation, a technique for adjusting weights across multiple layers, further enhanced learning capabilities.

Today, modern neural networks have evolved into deep learning models with thousands of layers, handling complex tasks such as natural language processing, self-driving cars, and medical diagnostics. While the original Perceptron was limited to simple binary classifications, its fundamental principles remain embedded in today’s most sophisticated AI models. The transition from single-layer Perceptrons to deep networks showcases the rapid advancement of machine learning, proving that even the simplest ideas can evolve into groundbreaking innovations.

The Perceptron’s Role in Shaping Modern AI

The influence of the Perceptron extends far beyond its original design. It introduced key ideas that paved the way for artificial intelligence as we know it today. The ability to process input data, adjust weights, and make decisions based on learned patterns is at the core of all neural networks. Even though the Perceptron itself is limited to simple tasks, its concepts are embedded in modern AI systems that drive everything from voice assistants to autonomous vehicles.

Deep learning, which powers advanced AI models, relies on principles derived from the Perceptron. Techniques like convolutional neural networks (CNNs) and recurrent neural networks (RNNs) build upon the idea of weighted connections between neurons. These advancements enable AI to perform complex tasks such as image recognition, speech translation, and even creative endeavors like generating art. While the Perceptron might seem outdated, it remains a historical milestone that continues to shape how machines learn and evolve.

Applications of the Perceptron in Artificial Intelligence

Though the Perceptron is an early model, it still plays a role in understanding and developing AI solutions. In its basic form, it is useful for binary classification problems, such as distinguishing between spam and non- spam emails or identifying whether a patient has a medical condition based on test results. These applications are fundamental to supervised learning, where labeled data helps the model improve over time.

Beyond simple classification, Perceptron’s principles serve as the foundation for more complex machine learning techniques. The concept of adjusting weights and learning from experience is at the heart of modern artificial intelligence. Neural networks, deep learning architectures, and other AI- driven solutions all trace their origins back to the Perceptron.

The Perceptron also plays a role in robotics, automation, and predictive analytics. Early implementations of AI-based control systems relied on Perceptron-like structures to make decisions based on sensor data. In stock market analysis, Perceptron models were among the first to test algorithmic trading strategies by predicting market movements based on historical data. While today’s systems are far more advanced, they still rely on the fundamental learning mechanisms introduced by the Perceptron.

Conclusion

The Perceptron is a foundational concept in machine learning, marking the early stages of artificial intelligence. While its simplicity limits its modern applications, its core principles—weight adjustments, learning from data, and binary classification—remain vital in advanced AI models. The shift from single-layer Perceptrons to deep learning networks illustrates how machine learning has evolved. Though today’s AI systems are far more complex, understanding the Perceptron provides valuable insight into how intelligent algorithms function. It remains a stepping stone for those exploring AI, bridging the gap between basic mathematical models and the sophisticated neural networks that power modern technology.

zfn9

zfn9