When people hear the phrase “train a language model,” they often picture something overly complex—an academic rabbit hole lined with math equations and infinite compute. But let’s be honest: at its core, this process is just about teaching a machine to predict the next word in a sentence. Of course, there’s a bit more to it than that, but the bones are simple. You’re feeding it text, breaking that text into smaller bits, and helping it understand patterns. The real challenge lies in doing this efficiently—and with purpose. So, let’s roll up our sleeves and walk through how to train a language model from scratch, using Transformers and Tokenizers.

How to Train a New Language Model from Scratch Using Transformers and Tokenizers

Step 1: Build a Clean, Meaningful Dataset

Training any model without solid data is like teaching someone to write poetry using broken fragments of a manual. The quality of the input determines what the model will learn, so this step matters more than most realize.

Start by gathering your corpus. This could be anything: open-source books, web text, news archives, or domain-specific documents. The more consistent your dataset is, the more cohesive the final model will feel. For general-purpose models, variety is key. But for niche use cases—like legal or medical fields—focus on tight relevance.

Once collected, scrub it clean. Strip out non-text elements, HTML tags, repeated headers, and anything that doesn’t help your model learn language patterns. Keep the formatting loose but coherent—punctuation, line breaks, and spacing give the tokenizer more to work with later.

Decisions about token-level quirks (like case sensitivity or digit normalization) need to be made at this stage. Once your model starts training, it’s hard to reverse those calls without starting over.

Step 2: Train the Tokenizer

Now comes the part people tend to overlook—training your tokenizer. This isn’t optional. Transformers don’t read words or letters; they read tokens. And how those tokens are split can shift how well your model understands language.

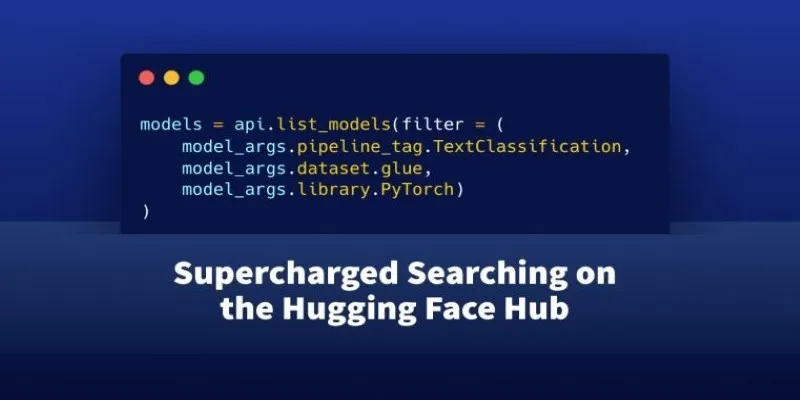

You can use a tokenizer from Hugging Face’s tokenizers library. Byte-Pair Encoding (BPE) is a solid place to start, especially for models dealing with Western languages. WordPiece and Unigram are also valid choices, but let’s keep it focused.

Here’s what you do:

- Feed the dataset into the tokenizer trainer. Specify how many tokens you want in your vocabulary (32,000 to 50,000 is typical).

- Let it learn token splits based on frequency. The more often two characters or words appear together, the more likely they’ll be fused into a single token.

- Save the tokenizer config. This file will later guide how your model processes text during training and inference.

What you’re building here is essentially a new alphabet. And like any alphabet, it shapes what can be written well and what can’t. If your tokenizer struggles with hyphenated words or uncommon compound nouns, your model will too.

Step 3: Define the Transformer Architecture

Once the tokenizer is ready, you’re set to build the skeleton of the model itself. Transformers follow a fairly standard recipe—think of them as customizable templates more than fixed structures. Still, decisions made here lock in the behavior of your model, so don’t rush.

Use Hugging Face’s transformers library with AutoModel or define a custom model with BertConfig, GPT2Config, etc., depending on your goals. Here’s what you’ll need to choose:

- Number of layers (depth): Start with 6–12 for small to medium models.

- Number of attention heads: Match this with the model’s hidden size (usually divisible by it).

- Hidden size: Common values range from 256 to 1024.

- Feed-forward size: Typically 4x the hidden size.

- Dropout rate: Useful during training to avoid overfitting. Keep it modest—around 0.1.

Also, don’t forget to match the tokenizer’s vocab size and padding scheme. These small misalignments can trigger bizarre training bugs.

At this point, your model is technically alive, but barely. It’s just a stack of linear layers and attention modules waiting for guidance. That guidance comes in the next step.

Step 4: Train the Model on Your Dataset

With everything else in place, you’re finally ready to train. This is the step where your Transformer stops being an empty container and starts becoming a model that understands language.

You’ll need a trainer loop. Hugging Face’s Trainer class is the go-to here—it wraps most of the boilerplate while still letting you inject control where needed.

Set up your training script like this:

- Load the dataset into a Dataset object. Use datasets from Hugging Face to format your text and tokenize on the fly.

- Group the tokens. Transformers train best when tokens are grouped into uniform blocks—say, 128 or 512 tokens at a time.

- Define the training arguments. Choose your batch size, number of epochs (usually 3–10), evaluation steps, and whether you want to use mixed-precision (FP16).

- Initialize the optimizer.

AdamWis still the favorite for this kind of task, with linear learning rate scheduling and warm-up steps. - Begin training. This part takes time. Even on good GPUs, don’t expect miracles in minutes.

Monitor the loss, but don’t obsess over it every few steps. What you want is a steady decline and a consistent evaluation loss. Sudden spikes are a red flag, usually pointing to faulty batching, poor learning rates, or bad tokenization.

Once training ends, save your model and tokenizer together. You now have a fully functional language model that can generate, classify, or analyze text depending on how you fine-tune it later.

Wrapping Up

Training a new language model from scratch isn’t something you jump into casually, but it also isn’t wizardry. If you’ve got a clear dataset, a well-configured tokenizer, and a reasonable Transformer design, then what follows is mostly iteration. Each step builds directly on the last—miss one, and the whole thing wobbles.

More than anything, what defines a good model is the quiet, invisible work done before a single training epoch begins. Good token splits. Clean data. Sharp architectural choices. That’s where the real performance comes from. And the rest? That’s just letting it learn.

For further reading, explore the Hugging Face documentation to deepen your understanding of these tools.

zfn9

zfn9