In recent years, BERT has emerged as a cornerstone model for natural language processing (NLP) tasks, including sentiment analysis and search optimization. Its capabilities are impressive, but deploying BERT at scale often brings challenges, particularly related to latency and inference costs. In production environments where low response times and high throughput are crucial, performance bottlenecks can occur. Hugging Face Transformers make model deployment more accessible, yet even with these tools, performance can hit a wall. This is where AWS Inferentia steps in—a specialized hardware accelerator designed to speed up inference at a lower cost.

Understanding the Need for Faster BERT Inference

BERT models are powerful but not exactly lightweight. Larger versions, such as BERT-large or RoBERTa, contain hundreds of millions of parameters and deep layers that deliver strong results—but at a cost. Even streamlined versions like DistilBERT can experience slow inference times, especially when run on standard CPUs or general-purpose GPUs. The challenge extends beyond a single prediction; it involves maintaining consistent performance when processing thousands of predictions per second without system lag.

This lag can become a real issue in live environments. Consider a support chatbot that must understand and respond instantly or a recommendation engine delivering results as someone types. Waiting a few extra milliseconds might not seem significant, but at scale, these delays accumulate quickly.

While GPUs offer a solution, they are not always ideal. They are expensive to run continuously, and in many workloads, they remain idle more often than not. CPUs, meanwhile, lack the necessary power for heavy real-time inference tasks. Enter AWS Inferentia, designed specifically for deep learning inference and seamlessly compatible with Hugging Face Transformers. It provides the performance needed without the typical overhead of high-powered hardware.

AWS Inferentia: Purpose-Built for AI Inference

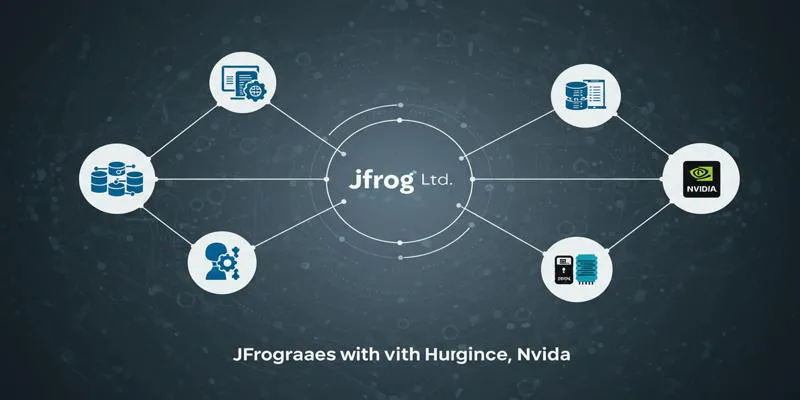

AWS Inferentia is a custom chip developed by AWS to lower costs and increase the speed of inference workloads. It supports popular frameworks, such as PyTorch and TensorFlow, through the AWS Neuron SDK. The Neuron runtime converts models into a form optimized for Inferentia’s architecture, enabling more inferences per dollar with enhanced performance.

Unlike general-purpose CPUs or GPUs, Inferentia is tailored for deep learning tasks, providing high throughput and low latency, ideal for BERT models. This makes it a suitable option for businesses aiming to serve real-time language predictions without the overhead of running GPU clusters. One of its key strengths is scalability—you can integrate Inferentia into Amazon EC2 Inf1 instances, which are priced lower than GPU-based alternatives while still offering excellent performance for inference.

Using Inferentia requires some initial setup, including converting your models to be compatible with the Neuron runtime. Fortunately, Hugging Face and AWS have collaborated to simplify this process through the Optimum library.

Hugging Face Transformers with Optimum and Neuron

The Hugging Face Optimum library bridges the gap between model training and hardware-optimized inference. It offers tools and APIs to convert standard Transformer models into formats supported by Neuron without needing deep expertise in hardware acceleration.

To start, you typically fine-tune a BERT model using standard Hugging Face pipelines. Once trained, Optimum allows you to export it into a Neuron-compatible format. This exported model can then be deployed on an EC2 Inf1 instance running the Neuron runtime. The process is streamlined, allowing developers to focus more on the model and less on the infrastructure.

Here’s a high-level view of the workflow:

- Load your BERT model using Hugging Face Transformers.

- Convert the model using Optimum’s Neuron export tools.

- Deploy it to an EC2 Inf1 instance configured with the Neuron runtime.

- Run inference with latency and throughput that outperforms traditional hardware.

The performance improvements are measurable. Inferentia-powered inference can reduce costs by up to 70% compared to GPU-based deployment while significantly increasing throughput, depending on the model and batch size.

Real-World Impact and Use Cases

Deploying BERT with Inferentia has substantial impacts on real-world applications. Consider a customer support system that uses BERT for ticket classification and automated replies. With thousands of queries pouring in every hour, even a minor reduction in latency can lead to significant improvements in customer experience and operational efficiency.

Another scenario is search optimization on an e-commerce platform. BERT can re-rank search results based on intent understanding. Doing this in real-time means inference speed matters—a lot. Inferentia allows these platforms to scale horizontally at a fraction of the cost, making real-time BERT inference feasible in ways that weren’t practical before.

Even smaller startups can benefit. By using Hugging Face’s interface and the ready-to-go AWS hardware, teams without deep MLOps expertise can deploy optimized models. This democratizes access to AI, allowing companies to focus on solving business problems rather than managing infrastructure.

The ecosystem is mature, with documentation, tutorials, and pre-built environments readily available. What once required a team of engineers can now be accomplished with a few lines of code and some initial setup. And since everything runs in the cloud, there’s no upfront investment in specialized hardware.

Conclusion

BERT has transformed how we use language in software, but running it efficiently in production remains challenging. Hugging Face Transformers offer model flexibility, and AWS Inferentia provides the hardware support to scale those models. With the Optimum library connecting the two, teams can deploy advanced models without complex setups. This setup reduces costs, latency, and resource usage while maintaining accuracy, using tools familiar to many developers. It’s not just about performance gains; it’s about making smart applications more responsive. Whether you’re building a chatbot, search tool, or classifier, this approach makes accelerating BERT inference a real, usable option.

zfn9

zfn9