When people hear “Hugging Face,” many instantly think of Transformers and PyTorch. That’s not surprising since most of their open-source models and documentation highlight PyTorch as the go-to framework. But this doesn’t mean TensorFlow is a footnote in their strategy. Hugging Face has taken a deliberate, careful approach when it comes to supporting TensorFlow.

They don’t just offer it as an afterthought—they see it as an important piece of the puzzle for a wide set of users, particularly in industry environments where TensorFlow is deeply embedded. This blend of user needs, interoperability, and practical constraints shapes Hugging Face’s TensorFlow philosophy.

Coexistence, Not Competition

Hugging Face doesn’t treat PyTorch and TensorFlow as rivals—it treats them as parallel tracks for reaching different groups. TensorFlow has long been favored in production settings, especially among companies that rely on the TensorFlow Extended (TFX) ecosystem or require seamless deployment with TensorFlow Serving and TensorFlow Lite. Hugging Face recognizes this and supports TensorFlow in a way that respects how developers work in these contexts.

Most Hugging Face Transformers have TensorFlow equivalents, and the team ensures that these versions are stable and usable out of the box. TensorFlow versions of models can be loaded using the same simple interfaces that PyTorch users enjoy. Hugging Face’s goal isn’t to convert TensorFlow users into PyTorch ones—it’s to allow them to stay in their preferred stack while still accessing state-of-the-art models.

Many companies are already locked into TensorFlow-based workflows. Telling them to switch ecosystems is neither helpful nor realistic. Hugging Face avoids that trap by focusing on coexistence, making both PyTorch and TensorFlow viable paths without treating either as the “lesser” option.

Bridging Through the Transformers Library

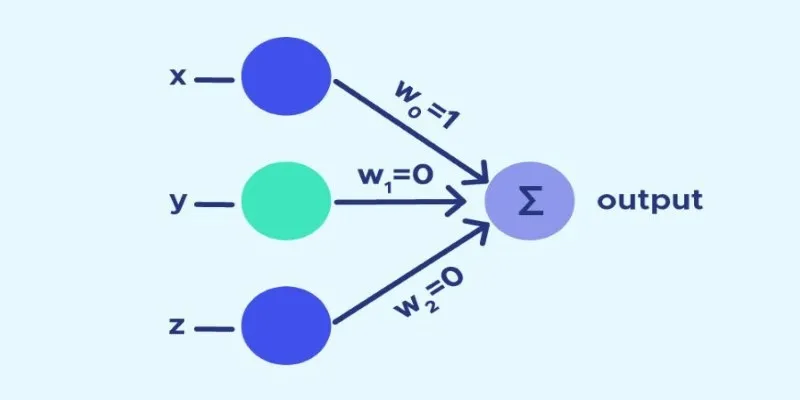

One of Hugging Face’s key decisions was building a unified Transformers library that works across both frameworks. This is not just about convenience; it’s about lowering the barrier for developers who might otherwise hesitate to adopt new models. Instead of maintaining two separate codebases for TensorFlow and PyTorch, Hugging Face uses an architecture that supports both backends with shared tokenizers, model classes, and training scripts. This consistency allows developers to switch between frameworks when needed or even write code that works on both.

The Hugging Face Transformers library abstracts much of the backend complexity. For example, when you load a model like TFAutoModelForSequenceClassification, it uses TensorFlow under the hood but mirrors the structure and naming of its PyTorch counterpart. This dual support allows the documentation and tutorials to remain consistent across both ecosystems, minimizing confusion.

It’s not always a one-to-one mapping, though. Some newer models or features debut in PyTorch first and are ported to TensorFlow later. This is often a result of how contributors interact with the codebase—many community contributions default to PyTorch. Even so, Hugging Face makes a point to review and add TensorFlow support for popular models, and they accept TensorFlow contributions actively. The aim is parity, even if it isn’t perfect.

Production Readiness and the TensorFlow Edge

While PyTorch has gained serious traction in research circles, TensorFlow still holds advantages in deployment-heavy scenarios. Hugging Face is fully aware of this and integrates with TensorFlow tools that are used in production pipelines. TensorFlow’s compatibility with TensorFlow Serving, TensorFlow Lite, and TensorFlow.js allows models to be deployed across cloud servers, mobile devices, and browsers. Hugging Face supports converting their models to these formats, either directly or via conversion tools.

This is particularly helpful for companies working on AI products that run on edge devices. TensorFlow Lite is still the dominant framework for mobile deployment, and Hugging Face’s support allows developers to fine-tune models and then optimize them for mobile use. There’s also interest in using Hugging Face models in real-time applications, where TensorFlow’s static graph execution can sometimes provide performance advantages.

Beyond deployment, TensorFlow’s ecosystem includes tools like TFRecord for data handling, Keras for model management, and TensorBoard for visualization. Hugging Face provides examples and guides on how to integrate their models with these tools, making it easier for teams to plug into their existing systems without having to tear everything down.

Another key point is training at scale. While PyTorch’s ecosystem has grown rapidly in this area, many enterprise workflows still depend on TensorFlow’s distribution strategies. Hugging Face supports training Transformers with TensorFlow using TPU accelerators on Google Cloud and integrates with tools like tf.distribute.MirroredStrategy to enable multi-GPU setups. This flexibility helps organizations keep their training pipelines intact while updating their model architectures.

Community, Maintenance, and the Long-Term View

Supporting two frameworks is a long-term commitment, and Hugging Face doesn’t treat TensorFlow support as a box to tick. Their approach is more about building a sustainable system that ensures TensorFlow users are not left behind. Documentation is gradually improving, and the examples provided in the Transformers repo increasingly include TensorFlow versions. The Keras-compatible layers also make integration easier since developers can build hybrid models or combine Hugging Face Transformers with their layers and custom losses.

The community plays a large role in making this possible. Hugging Face encourages TensorFlow contributions, both in terms of code and educational content. There are open calls for TensorFlow tutorials, fine-tuning examples, and performance benchmarks. This openness helps balance out the PyTorch-leaning nature of much of the open-source AI space.

Looking ahead, Hugging Face’s strategy suggests they’re in it for the long haul when it comes to TensorFlow. They’re not chasing trends—they’re trying to give users tools that work where they are. The consistent effort to maintain TensorFlow support in parallel with PyTorch shows respect for developer preferences and real-world constraints. It also builds trust, especially among teams that have long relied on TensorFlow and need to be confident that the support won’t vanish six months down the line.

Conclusion

Hugging Face’s TensorFlow philosophy values practicality over popularity. Instead of steering users away from TensorFlow, it offers full support through shared tools, seamless model access, and production-ready integrations. This lets developers stay within familiar environments while using advanced language models. It’s a grounded, developer-first mindset that focuses on reliability and long-term support, making Hugging Face a trusted option for real-world AI projects without forcing a shift in frameworks.

zfn9

zfn9