Artificial Intelligence (AI) has become a transformative force across various industries and everyday life, but its success hinges on a fundamental principle: AI cannot learn without data. While technology is advancing at a rapid pace, access to high-quality data often lags behind. Many companies face challenges not because their ideas lack innovation, but because their AI models are deprived of the necessary data to learn effectively.

This issue, known as data scarcity, presents significant hurdles in training AI systems. Without bridging this gap, even the most sophisticated AI technology fails to meet its potential. Understanding data scarcity is crucial for unlocking the full potential of smarter, more reliable AI solutions.

The Importance of Data in AI Training

Data is the cornerstone of artificial intelligence, shaping how it learns, evolves, and performs. Regardless of how advanced an AI model may appear, its foundation is built on data. Teaching an AI system is akin to educating a student. A student exposed to diverse books, situations, and experiences naturally becomes more knowledgeable and capable. Similarly, AI requires vast amounts of diverse, high-quality data to recognize patterns, make predictions, and respond accurately.

However, gathering this data is often a challenging task. Industries such as healthcare, finance, and security deal with sensitive information that cannot be freely shared due to privacy and legal concerns. In other instances, the data required simply does not exist yet. For example, training an autonomous vehicle demands millions of images depicting different traffic scenarios, weather conditions, and road types. Without such data, AI systems may underperform in unfamiliar situations.

Data scarcity not only constrains performance but can also lead to biased AI systems. If training data lacks diversity or contains biases, AI may produce results that favor certain groups over others, leading to misrepresentation. Addressing data scarcity is not merely a technical issue—it’s vital for creating accurate, fair, and reliable AI.

Common AI Training Challenges Caused by Data Scarcity

Data scarcity introduces several challenges during AI model training. One major issue is poor generalization. When AI is trained on a limited dataset, it may perform well on that specific data but fail to deliver accurate results on new or real-world data. This phenomenon, known as overfitting, occurs because the AI system learns too much from a limited sample and lacks the ability to handle new scenarios.

Another challenge is detecting rare events. In fields like medical diagnosis or fraud detection, the occurrences that need identification are infrequent. Training data for these rare events is often scarce, making it difficult for AI models to learn effectively. This issue becomes even more critical when these rare events carry high risks or severe consequences.

Data scarcity also hampers the development of specialized AI systems. While general AI models might manage common tasks, highly focused applications—such as identifying rare diseases or forecasting machine failures in industrial contexts—require substantial amounts of specific data. Without such data, creating effective AI solutions becomes nearly impossible.

Moreover, limited data availability often results in higher costs. Companies may need to invest significantly in collecting or purchasing data and hiring experts to clean and label it properly, making AI development more expensive and time-consuming.

Strategies to Overcome Data Scarcity

Overcoming data scarcity requires innovative strategies. One popular solution is data augmentation. This technique involves generating new data samples from existing ones by making small modifications, such as rotating images, altering colors, or adding noise. Data augmentation enhances dataset size and diversity, enabling AI models to learn more effectively.

Another strategy is the use of synthetic data. In scenarios where collecting real data is challenging or expensive, synthetic data can be generated using computer simulations or other AI models. This approach is widely employed in industries like gaming, robotics, and autonomous driving, where simulated environments offer a safe and cost-effective way to create large datasets.

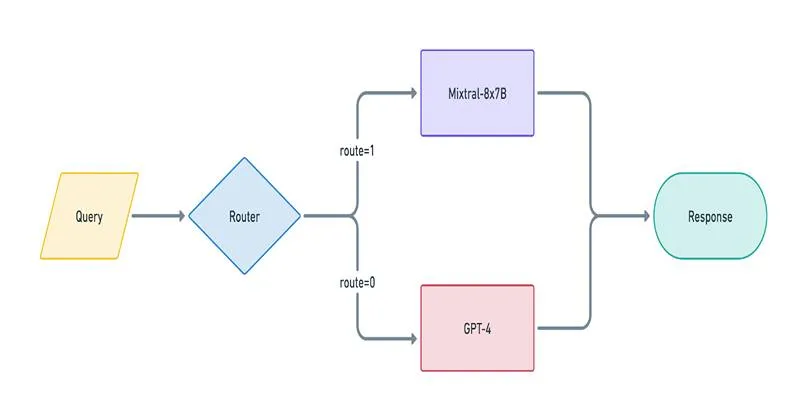

Transfer learning serves as another valuable method. It involves leveraging a pre-trained AI model, which has already learned from a large dataset, and adapting it to a new but related task. This method reduces the amount of data needed to train new models and accelerates the development process.

Federated learning is an emerging solution that enables AI models to learn from data stored in various locations without transferring the data to a central server. This approach is especially beneficial in healthcare and finance, where privacy is paramount. With federated learning, companies can collaborate and train AI models without exposing sensitive data.

Collaboration between industries, organizations, and research institutions can also help address data scarcity. Sharing anonymized data or contributing to open-source datasets allows developers to access more information and build better models. However, such collaborations must adhere to strict privacy and security guidelines.

Finally, the development of improved algorithms is essential. AI researchers are continuously working on models that can learn effectively from small datasets. Techniques like few-shot learning and zero-shot learning aim to create AI systems that require minimal data to understand and perform tasks.

Conclusion

Data scarcity and AI training challenges are significant obstacles in the pursuit of creating smarter technology. Without sufficient quality data, AI systems struggle to perform optimally in real-world scenarios. However, overcoming this challenge is not impossible. Through innovative solutions like data augmentation, synthetic data, transfer learning, and collaborative efforts, developers can enhance their models and mitigate the impact of limited data. As industries increasingly rely on AI for critical tasks, addressing data scarcity remains essential. The future of AI depends on discovering smarter ways to train systems, ensuring accuracy, fairness, and readiness for practical use.

zfn9

zfn9