Retrieval-augmented generation (RAG) has emerged as a powerful solution for building more intelligent, responsive, and accurate AI systems. However, its true potential is only realized when paired with effective document retrieval. That’s where ModernBERT makes a significant difference.

As an enhanced version of the classic BERT model, ModernBERT brings optimized performance to retrieval tasks, helping RAG pipelines become faster, more relevant, and more scalable. This post explores how ModernBERT transforms the effectiveness of RAG systems, provides use cases, and offers practical guidance on integrating it into AI workflows.

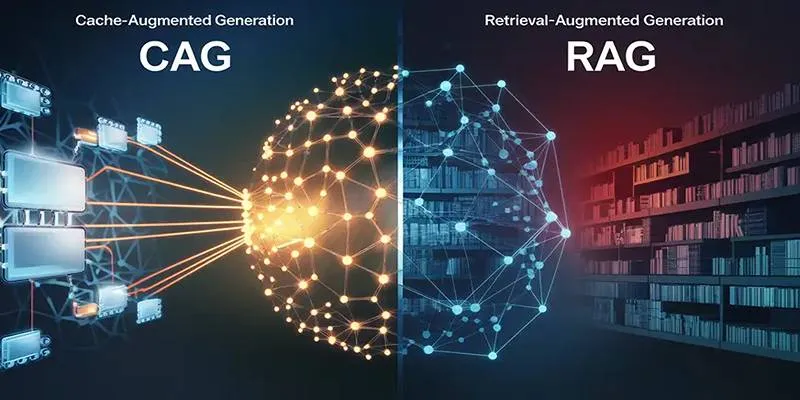

What Is Retrieval-Augmented Generation (RAG)?

Retrieval-augmented generation is an advanced approach in natural language processing that separates knowledge retrieval from language generation. Instead of relying solely on a language model’s internal parameters, RAG systems search an external knowledge base to fetch relevant information and then generate answers using both the question and the retrieved data.

RAG offers major benefits over traditional language models, including:

- Reduced hallucination : Answers are grounded in real documents.

- Lower compute needs : Smaller generation models can perform well with good retrieval.

- Up-to-date information : Retrieval allows access to new or evolving data.

Still, the effectiveness of RAG heavily depends on how well the retrieval component performs. If irrelevant or low-quality documents are fetched, the final response may be inaccurate or misleading.

Why Traditional Retrieval Falls Short in RAG

Most RAG systems use either sparse or dense retrievers to fetch documents. Sparse retrieval methods like BM25 rely on keyword matching, which can be brittle when the query is phrased differently. Dense retrievers, on the other hand, generate vector representations of both the query and documents to perform similarity matching.

However, earlier dense retrievers often failed to capture deep semantic meaning or lacked efficiency at scale. This mismatch between user intent and retrieved content could result in mediocre RAG performance. That’s where ModernBERT changes the game.

What Is ModernBERT?

ModernBERT is a refined transformer-based encoder model built upon the BERT architecture, specifically tailored for retrieval-focused tasks. While classic BERT was trained primarily for language understanding, ModernBERT has been adapted and fine-tuned to excel in tasks like semantic search, dense retrieval, and document ranking.

Its improvements include:

- Faster inference using streamlined layers and optimized attention mechanisms

- Better embeddings for understanding and matching intent

- Robust performance on a variety of benchmark datasets, including MS MARCO and BEIR

In the context of Retrieval-Augmented Generation, ModernBERT serves as an upgraded retriever that significantly boosts the quality of the RAG pipeline.

How ModernBERT Unlocks RAG’s Full Potential

By incorporating ModernBERT into the retrieval phase of RAG, AI developers and researchers gain a much stronger foundation for generating accurate and grounded responses. Here are several ways ModernBERT enhances RAG systems:

Improved Semantic Matching

ModernBERT generates embeddings that represent deeper contextual understanding. It helps match user queries with documents even when the wording differs significantly.

- Users benefit from more relevant results.

- Responses align better with the user’s true intent.

- Lesser reliance on keyword overlap.

Faster and Scalable Retrieval

ModernBERT’s optimized architecture enables fast embedding generation and comparison. When integrated with vector databases like FAISS or Qdrant, it enables real-time search across millions of documents.

- Low latency for live applications.

- Scalable across large document stores.

- Supports high-throughput systems like enterprise bots or AI search engines.

Higher Precision and Lower Noise

RAG pipelines often struggle with noisy results due to irrelevant document retrieval. ModernBERT’s precision ensures that only the most contextually relevant documents are passed to the generation model.

- More accurate and grounded answers.

- Reduced post-processing or filtering.

- Better user trust in the output.

Practical Use Cases of ModernBERT + RAG

The combination of ModernBERT and Retrieval-Augmented Generation is already being explored in various industries. Below are a few examples where this pairing proves particularly effective:

Healthcare Knowledge Assistants

- Retrieves evidence-based content from medical literature.

- Assists healthcare professionals with accurate clinical suggestions.

Financial Research Tools

- Pulls real-time data from reports and filings.

- Helps analysts summarize insights using generation models.

Customer Support Chatbots

- Searches internal documentation or FAQs.

- Generates personalized, precise responses in seconds.

Legal Document Review

- Locates similar cases, clauses, or regulations.

- Summarizes findings in plain language for legal teams.

These use cases benefit from ModernBERT’s ability to retrieve data that aligns semantically with the query, leading to better generative responses.

Steps to Integrate ModernBERT into a RAG Pipeline

Building a ModernBERT-powered RAG system involves several components. Below is a simplified roadmap:

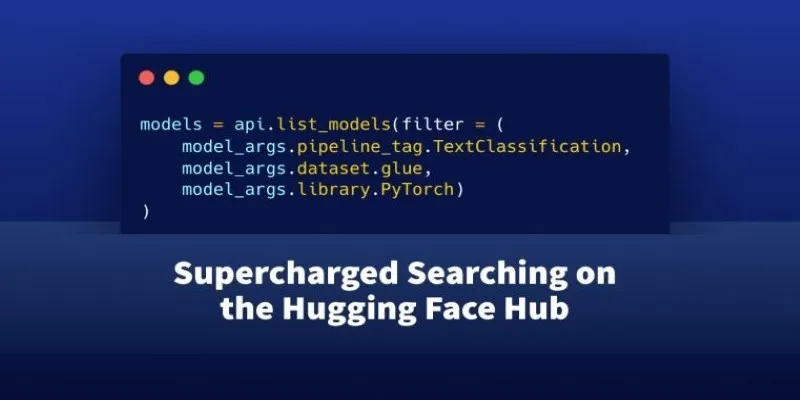

- Select a Pretrained ModernBERT Model

- Choose a model fine-tuned for retrieval from Hugging Face or other ML platforms.

- Generate Document Embeddings

- Use ModernBERT to create vector embeddings of each document or passage.

- Store Embeddings in a Vector Database

- Use tools like FAISS, Pinecone, or Weaviate for efficient storage and search.

- Embed Incoming Queries

- Convert user questions into vector form using the same ModernBERT model.

- Retrieve Top-k Relevant Documents

- Perform a similarity search in the vector database to find matching documents.

- Generate Final Output

- Pass the retrieved documents and the query to a language model (e.g., LLaMA, GPT-4) for answer generation.

This setup results in a highly efficient and intelligent system capable of producing factually accurate responses with real-time context.

Best Practices for Maximizing Impact

When deploying ModernBERT with RAG, the following practices help optimize outcomes:

- Fine-tune ModernBERT on your domain-specific data for better alignment.

- Use document chunking to avoid missing details in long passages.

- Periodically update the vector store with fresh embeddings.

- Evaluate relevance metrics such as Recall@k or NDCG to measure performance.

- Implement feedback loops to improve retrieval quality over time.

Following these steps helps keep the system efficient, relevant, and reliable.

Conclusion

ModernBERT brings a critical upgrade to the retrieval layer of RAG systems. Its ability to deeply understand queries, retrieve semantically aligned content, and do so at scale makes it an invaluable tool for any AI workflow that involves dynamic information retrieval. By integrating ModernBERT into their RAG pipelines, developers and organizations can unlock a new level of intelligence and accuracy in their language-based applications. From medical assistants to legal research, customer service to enterprise AI search, ModernBERT helps the Retrieval-Augmented Generation live up to its promise—an intelligent generation grounded in knowledge.

zfn9

zfn9