NumPy is a leading Python library for numerical and scientific computing, renowned for its ability to handle matrix and array structures effectively. However, when working with large datasets in NumPy, excessive memory usage can slow down system performance and lead to errors. Therefore, optimizing memory usage in NumPy arrays is crucial for achieving better processing speeds and efficiency.

Why Memory Optimization is Crucial in NumPy

Every byte of memory

is critical when managing numerical datasets. Without an optimization

strategy, programs handling large arrays can exhaust available Random Access

Memory (RAM), resulting in sluggish performance and potential system failures.

Memory optimization is vital in several key scenarios:

Every byte of memory

is critical when managing numerical datasets. Without an optimization

strategy, programs handling large arrays can exhaust available Random Access

Memory (RAM), resulting in sluggish performance and potential system failures.

Memory optimization is vital in several key scenarios:

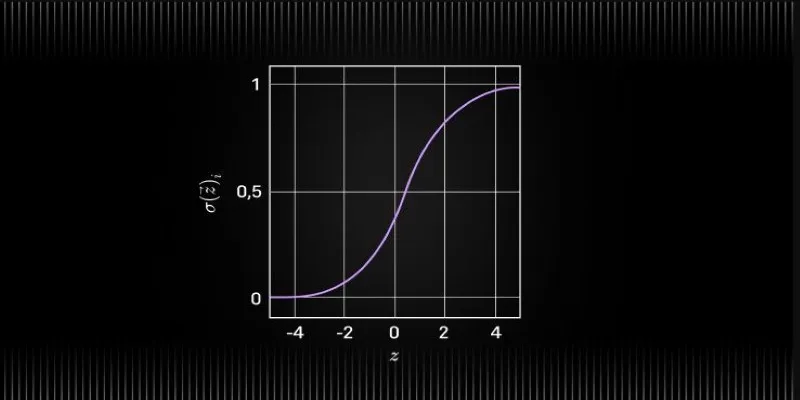

- Machine Learning and AI: Training deep learning models requires processing large datasets, making efficient memory management essential.

- Data Science and Analytics: Efficiently processing millions of data points can save computational time and resources.

- Scientific Computing: Numerical simulations and modeling often involve complex calculations with large matrices.

Optimizing memory usage helps avoid excessive RAM consumption, ensures computations run faster, and enables handling more extensive datasets without the need for hardware upgrades.

Using Efficient Data Types for NumPy Arrays

One of the most effective methods to reduce memory usage is by selecting appropriate data types for your data. NumPy, a powerful library for numerical computing, supports a wide range of data types, including integers and floating-point numbers.

Choosing smaller, more suitable data types—such as int8 instead of int64

or float32 instead of float64—can significantly lower memory consumption,

especially with large datasets. This optimization not only improves memory

efficiency but can also lead to faster computations by reducing the data

processed by your system.

Choosing the Right Numeric Data Type

NumPy defaults to large data types (such as float64 and int64), which

might be unnecessary for many applications. Using smaller data types helps

reduce memory usage without losing precision.

Switching from float64 to float32 reduces memory usage by half while

maintaining sufficient precision for most tasks.

Using Integer Types Efficiently

If you are working with whole numbers, using smaller integer data types like

int8, int16, or int32 instead of int64 can help save memory.

Choosing the right data type can make a significant difference in memory consumption, particularly for large datasets.

Avoiding Unnecessary Copies of Arrays

NumPy can sometimes create extra copies of arrays, leading to higher memory use and slower performance, especially with large datasets. These extra copies often occur during operations like slicing, reshaping, or using certain functions, even if they aren’t always necessary.

Understanding when and why NumPy makes these copies and learning to use views or in-place operations instead can enhance code efficiency. By reducing unnecessary copies, your programs can run faster and manage larger datasets more effectively.

Using Views Instead of Copies

A view is a reference to the same memory location as the original array, while a copy creates a separate memory allocation. Using views instead of copies can significantly reduce memory overhead.

Using In-Place Operations

Performing operations in-place prevents unnecessary memory allocation for new arrays, reducing memory overhead.

Using Memory Mapping for Large Files

When working with large datasets that do not fit into RAM, memory mapping allows NumPy to access only the required portions of the data, preventing excessive memory usage.

Memory mapping is particularly useful in machine learning and big data applications, where reading entire datasets into RAM is impractical.

Optimizing Sparse Matrices for Memory Efficiency

When working with large matrices that mostly contain zeros, using sparse matrices instead of normal NumPy arrays can be very beneficial. Sparse matrices only store non-zero values and their locations, which saves a significant amount of memory compared to regular arrays that store every single value, including zeros. This makes sparse matrices ideal for machine learning, graph algorithms, or any task where conserving memory and handling large data efficiently is important.

Why Sparse Matrices are Useful

- Dense matrices consume more memory as they store every value, including zeros.

- Sparse matrices, on the other hand, optimize memory by storing only non-zero values.

- The output displays the sparse representation, capturing only non-zero elements and their positions. This approach is far more efficient for handling large datasets with numerous zeros.

Using NumPy’s Structured Arrays for Efficient Storage

Structured arrays allow

efficient storage of mixed data types, such as integers, floats, and strings,

within a single array. They reduce memory overhead compared to using standard

Python objects by storing data in a compact, low-level format. This makes them

particularly useful for handling large datasets or performing computations on

heterogeneous data efficiently.

Structured arrays allow

efficient storage of mixed data types, such as integers, floats, and strings,

within a single array. They reduce memory overhead compared to using standard

Python objects by storing data in a compact, low-level format. This makes them

particularly useful for handling large datasets or performing computations on

heterogeneous data efficiently.

Conclusion

Optimizing memory usage in NumPy arrays can significantly enhance performance, especially when dealing with large datasets. You can achieve this by selecting the right data types, using views instead of making copies, taking advantage of memory mapping, and applying sparse matrices.

zfn9

zfn9