In today’s era of big data and artificial intelligence (AI), enterprises are under pressure to extract meaningful business insights from their vast data collections. Traditional AI models often fall short in processing enterprise- specific information effectively. The Retrieval-Augmented Generation (RAG) system is transforming enterprise data management by delivering context-driven results tailored to business needs. This article delves into how RAG functions, its benefits, and its applications in maximizing enterprise data potential.

The Need for RAG in Enterprises

Daily,

enterprises gather an enormous amount of data, including customer records,

financial transactions, and market research reports. Effective data mining

poses challenges due to:

Daily,

enterprises gather an enormous amount of data, including customer records,

financial transactions, and market research reports. Effective data mining

poses challenges due to:

- Information fragmentation across multiple systems and diverse file formats.

- Standard language models (LLMs) use pre-trained databases that may not contain current or relevant business data.

- Hallucinations in generic AI models occur when domain-specific knowledge is required.

RAG technology enables enterprise knowledge management by allowing LLMs to retrieve relevant information from external sources before generating output. This ensures accurate results with enterprise-specific context.

How RAG Works

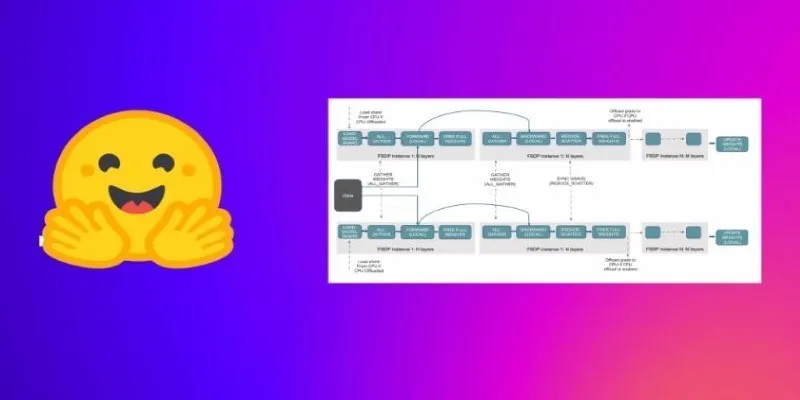

RAG uses a two-step process involving retrieval and generation to deliver precise results:

1. Information Retrieval

When a user submits a query, the retrieval model searches databases or document repositories for matching content. Sources include:

- Proprietary enterprise databases

- Publicly available datasets

- APIs or real-time feeds

The system converts extracted content into vector space, allowing for efficient query matching.

2. Augmentation of LLM Prompts

The relevant data is added to the user’s original query, providing contextual input for the LLM. This process ensures the generative model has up-to-date domain-related data.

3. Response Generation

The LLM generates a response using both its pre-trained information and the contextual data retrieved. This approach yields more accurate and relevant outcomes compared to standalone LLMs.

Benefits of RAG for Enterprises

RAG connects static AI systems with dynamic enterprise information sources, offering several advantages:

1. Improved Accuracy

RAG improves response accuracy through verified truth checks, ensuring outputs meet both factual and enterprise-specific criteria.

2. Real-Time Insights

RAG’s ability to access real-time data makes it ideal for applications needing up-to-date information, such as tracking market trends or regulatory changes.

3. Enhanced Personalization

RAG allows enterprises to provide personalized responses by using knowledge base information tailored to individual users, enhancing customer service and e-commerce experiences.

4. Cost Efficiency

By leveraging existing knowledge bases, RAG reduces the need for expensive proprietary datasets, optimizing cost-effectiveness.

5. Scalability Across Use Cases

RAG’s modular design facilitates application across various enterprise scenarios without significant infrastructure changes.

Applications of RAG in Enterprises

RAG is

a versatile solution applicable across different sectors, including:

RAG is

a versatile solution applicable across different sectors, including:

1. Customer Support Automation

Enterprises can deploy RAG-powered chatbots to answer complex customer inquiries using real-time retrieval from FAQs, product manuals, or ticket records. For instance:

- Telecom companies use RAG to offer instant device troubleshooting through user guide databases specific to each device.

2. Financial Analysis

Investment firms utilize RAG to process market reports, earnings calls, and historical data, streamlining portfolio management and supporting investment decisions.

3. Healthcare Applications

Hospitals leverage RAG to access patient information, clinical protocols, and pharmacological databases, aiding in medical recommendations and physician summary reports.

4. E-Commerce Personalization

Retail operations enhance customer satisfaction with RAG systems by suggesting products based on customer preferences and real-time stock levels.

5. Legal Document Review

RAG expedites the review of legal documents and case laws, providing quick retrieval, automatic summaries, and clause identification for ongoing court cases.

Challenges in Implementing RAG

Implementing RAG presents several challenges:

- Real-time data integration requires robust infrastructure and governance to unify different data sources.

- Optimizing real-time retrieval speed is crucial to avoid performance delays.

- Organizations must ensure data security through encryption and access restrictions.

Proper prompt engineering is essential to align the model’s generative capabilities with retrieved information.

Future Trends in RAG Technology

Several trends are shaping the future of RAG technology as more enterprises adopt it:

Hybrid models combining RAG with reinforcement learning will create context- sensitive platforms for various business tasks.

Advancements in vector search algorithms will significantly reduce execution times.

Enterprises will gain greater control over data prioritization and system behavior under specific operational conditions.

Specialized RAG solutions will emerge for different industries as adoption expands.

Conclusion

Retrieval-Augmented Generation is a groundbreaking approach that empowers enterprises to extract valuable insights from extensive data collections, facilitating strategic decision-making. By merging retrieval methods with LLM capabilities, RAG systems enhance accuracy, personalization, and efficiency. In a competitive landscape, adopting RAG technology is crucial for driving innovation and maximizing enterprise data value. Early adopters will harness its transformative power across industries, from finance to healthcare.

For further reading on the impact of AI in enterprise solutions, consider checking authoritative sources like [Gartner’s insights on AI](https:/www.gartner.com/en/information-technology/insights/artificial- intelligence).

zfn9

zfn9