Static embedding models have been a mainstay for years, offering a dependable method to transform text into vectors. However, speed has always been an issue. When developing search tools, recommendation systems, or classification models, training embeddings can be a slow process, especially when dealing with datasets in the millions. Enter Sentence Transformers, which offer a remarkable boost in training speed.

With careful setup and adjustments, you can now train your static embedding models up to 400 times faster than traditional methods. This is not merely a matter of saving a few seconds; it’s about transforming days into mere hours, making these models far more practical for real-world applications.

The Importance of Static Embeddings

Despite the emergence of large language models that generate dynamic embeddings, the relevance of static embedding models cannot be overstated. They are faster at inference, cost-effective, and easier to scale. Once trained, they can be stored and reused without the need for a model to run in the background. This makes them perfect for environments with limited resources or high-speed requirements, such as mobile apps, search indexing, or recommendation engines.

Traditional methods for training these embeddings, like Word2Vec or GloVe, rely heavily on unsupervised learning and co-occurrence statistics. While these approaches have their merits, they often lack semantic depth and require extensive training times. Sentence Transformers, initially developed to build dynamic embeddings for tasks such as semantic search and similarity, now provide a shortcut for training static embeddings with greater efficiency.

How Sentence Transformers Speed Up The Process?

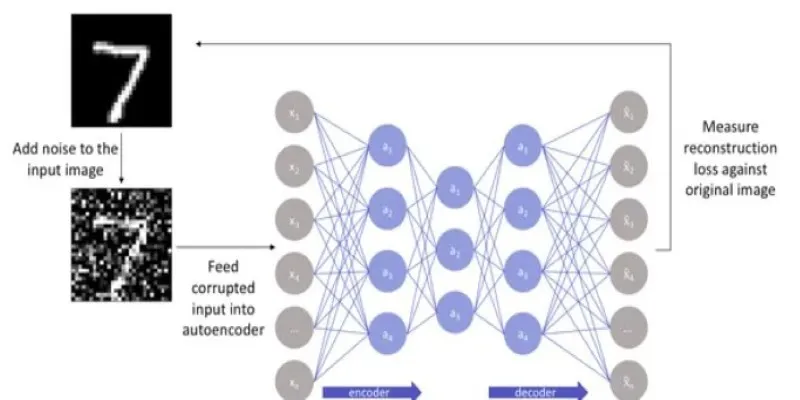

Sentence Transformers are based on pre-trained transformer models like BERT and RoBERTa, but they are refined for sentence-level understanding. The distinguishing factor is their architecture: using a Siamese or triplet network allows for the comparison of pairs or sets of sentences in parallel. When transformed to generate static embeddings, they can be used to project any text into a fixed-length vector that encodes meaning with high accuracy.

The acceleration in speed comes from several factors. Firstly, Sentence Transformers allow for batched, GPU-accelerated encoding, enabling the processing of thousands of sentences in parallel. This drastically reduces time compared to older, CPU-bound models. Secondly, these models use pooling techniques—like mean pooling or CLS token extraction—that don’t require attention scores for every token, making them faster and lighter at inference.

However, the most significant advantage comes when you omit the fine-tuning process altogether. By using pre-trained Sentence Transformer models, you can generate embeddings on the fly without retraining anything. But if domain-specific static embeddings are required, the fine-tuning process can still be done much quicker, thanks to the use of contrastive learning with smart sampling techniques.

For instance, when training a model to distinguish between similar and dissimilar texts, instead of feeding in random sentence pairs, you can generate hard negatives using a semantic search over the dataset. This results in the model learning more useful distinctions per training step, reducing the time required to achieve a high level of performance.

Creating Your Fast Static Embeddings

To get started, you’ll need a well-defined dataset that reflects the types of text you’ll be embedding. This could be product descriptions, FAQs, or legal documents, depending on your use case. From there, you can fine-tune a Sentence Transformer model using contrastive loss. This trains the model to draw similar sentences together in vector space and push dissimilar ones apart.

If speed is your primary objective, avoid training from scratch. Begin with a pre-trained Sentence Transformer, such as all-MiniLM-L6-v2 or paraphrase-MiniLM-L3-v2. These are compact, efficient models with solid general-purpose performance. Utilize a GPU to fully exploit parallelism. Set up your training with mixed precision to reduce memory usage and increase throughput.

During training, carefully sample your positive and negative pairs. Hard negatives, which are semantically close but contextually incorrect, teach the model faster than random negatives. Libraries like sentence-transformers offer in-built tools for mining hard negatives from your dataset. Training can often be completed in minutes instead of hours or days.

Once training is complete, freeze the model and use it to generate your static embeddings. These vectors can be stored in a database or used with approximate nearest neighbor libraries like FAISS or ScaNN for efficient retrieval. Because the embeddings are static, you no longer need the model at runtime—just the vectors.

Applications and Trade-offs

Training static embeddings this way paves the way for faster, cheaper, and more scalable NLP systems. You can use these embeddings for semantic search, duplicate detection, clustering, or as features in downstream machine learning models. And since they’re generated by a fine-tuned Sentence Transformer, they’re rich in contextual understanding.

However, there are trade-offs. Static embeddings are fixed at the time of training. If new data comes in, you either need to retrain or accept some quality degradation. They’re also not as adept at capturing nuances from complex contexts as dynamic embeddings are. But for many applications—especially those needing fast, repeatable, and scalable text processing—these limitations are worth the compromise.

Another factor to consider is interpretability. Because these embeddings come from deep neural networks, they’re not as transparent as traditional count-based vectors. However, this is a common issue across modern NLP, and tools are emerging to help understand and visualize embedding spaces.

The secondary keyword “fast sentence embeddings” correlates with a broader shift toward embedding efficiency. As demand grows for quick, reliable text understanding, fast sentence embeddings are becoming a popular solution. Whether you’re dealing with millions of support tickets or organizing internal documentation, this approach offers a practical way to scale.

Conclusion

Using Sentence Transformers to train static embedding models 400 times faster isn’t just a technical curiosity—it’s a game-changer for anyone working with text at scale. It allows teams to build smarter systems without sinking days into training or running heavyweight models at inference. With the right tools and setup, you can create fast sentence embeddings that are accurate, reusable, and lightweight enough for large-scale applications. This shift brings us closer to real-time NLP without sacrificing quality, making it easier for developers and researchers to work smarter with the text data they already have.

zfn9

zfn9