The arena of vision-language models has experienced rapid expansion in recent years, with larger architectures leading the way. However, a unique trend is now taking shape. Instead of focusing on size, researchers are concentrating on the efficiency and performance of smaller models. SmolVLM, a forerunner in developing efficient open-source vision-language models, has pushed this concept a step further with the introduction of its 250M and 500M models.

Why Smaller Vision-Language Models are Gaining Traction

Often, the assumption is that larger AI models offer superior performance. Giants in the field, such as Flamingo and GPT-4V, boast billions of parameters, necessitating substantial computational resources and energy consumption. While these models deliver remarkable results, they are often inaccessible to smaller labs, independent researchers, and practical applications not requiring such extensive power.

This is where SmolVLM’s 250M and 500M vision-language models come in. The primary goal of SmolVLM is to develop efficient models capable of competitive multimodal reasoning, without the need for extensive infrastructure.

Spotlight on the 250M and 500M Models

The new SmolVLM models, available in 250 million and 500 million parameters, offer a significant reduction from the conventional billion-plus parameter range. This is not merely about reducing the size; the design focuses on performance and usability.

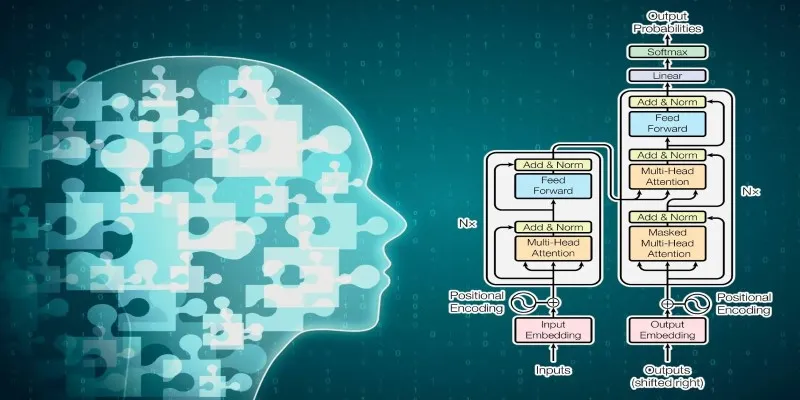

The models are built on well-known architectures like SigLIP for vision and Mistral for text. They efficiently process visual input and translate it into text, enabling tasks like image description and question answering.

Training Efficiency and Accessibility

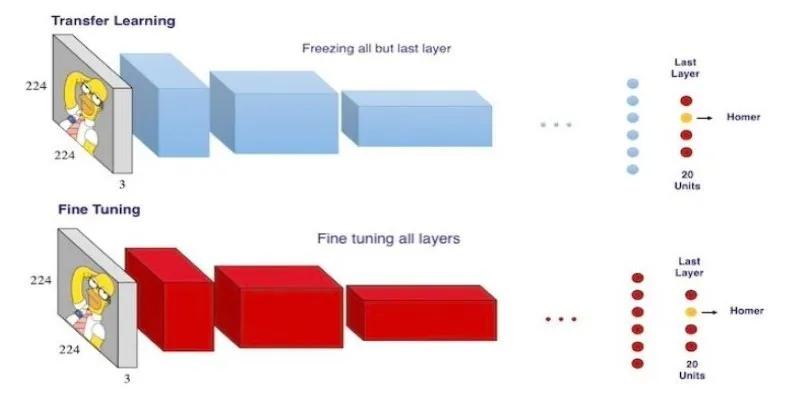

Smaller models come with their set of challenges. With fewer parameters, capturing and retaining nuanced patterns in data becomes more difficult. However, SmolVLM addressed this with a strategic setup using pre-trained encoders, a clean instruction-tuned dataset, and a balanced mix of vision-language benchmarks.

Both the 250M and 500M models are fully open-source, providing researchers, developers, and hobbyists the ability to inspect, modify, and deploy the models without reliance on closed APIs. This transparency allows for greater innovation and builds trust.

Future Direction

SmolVLM’s smaller models are not just a technical novelty; they signify a potential shift in the AI field. As models that can run outside large data centers become more appealing, the 250M and 500M versions represent a step towards a future where powerful, practical tools are light enough for everyday use.

The open-source nature of these models encourages experimentation. Developers can fine-tune the models for specific tasks or environments. There’s also potential for further size reduction through methods like quantization or pruning, further reducing memory requirements and inference time.

Conclusion

SmolVLM’s 250M and 500M models prove that vision-language AI does not have to be massive to be effective. These compact models deliver solid performance and faster responses, while requiring less hardware. Their open-source nature offers a practical solution for developers, researchers, and small teams working with limited resources. By shifting focus from scale to efficiency, SmolVLM is reshaping how we view AI development, highlighting a future where smarter, smaller models can do more with less.

zfn9

zfn9