Many envision AI training as a process of feeding vast datasets into models from scratch. However, today’s reality often involves a more efficient approach: transfer learning. Instead of starting from the ground up, machines reuse previously acquired knowledge, making training faster, more cost- effective, and highly efficient. At the core of this approach are transfer learning and fine-tuning—two techniques that allow developers to adapt pre- trained models for specific tasks without starting over.

Imagine a pianist learning the violin; foundational skills transfer smoothly despite the differences. Leveraging existing AI knowledge in this manner significantly streamlines development, enabling faster, practical, real-world applications. Ultimately, transfer learning makes AI more accessible and efficient by intelligently building on prior learning.

Understanding the Basics of Transfer Learning

Transfer learning is based on a simple idea: knowledge gained from solving one problem can be applied to another related problem. In AI, it involves taking an existing pre-trained model—a model that has already learned from a large dataset—and applying it to a new problem. Instead of starting from scratch, you use a model that already recognizes some patterns, such as shapes, colors, or grammar.

For instance, a model like BERT, trained on extensive volumes of English text, possesses a deep understanding of language. Rather than retraining it from scratch for a specific task, such as answering customer questions in a help desk application, you begin with BERT and fine-tune it to fit the nuances of your domain. This method saves time and resources, resulting in quicker, improved outcomes.

Think of it like hiring an experienced employee. While they may not know your company’s processes in detail, they already have relevant skills and knowledge. Pre-trained models offer this head start.

Choosing the right base model is crucial. If you’re working with text, models like GPT or RoBERTa are ideal, while for image recognition, a model trained on a dataset like ImageNet is a better fit. The key is to match the model’s strengths with your task’s needs.

What Fine-Tuning Actually Involves?

Fine-tuning involves taking a pre-trained model and further training it on your specific dataset. This process adjusts the model’s internal weights slightly, so it better suits your task. It’s like tailoring a suit—while the base model is already well-built, fine-tuning ensures it fits your needs perfectly.

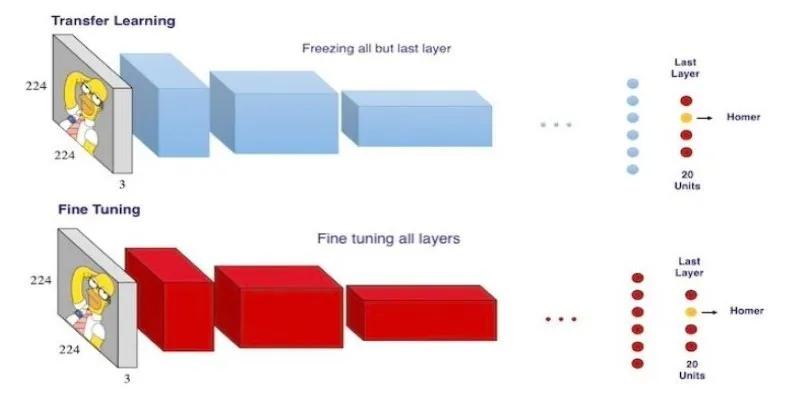

There are several methods for fine-tuning. In some cases, you freeze the early layers of the model (which capture general features) and train only the later layers on your dataset. This maintains the model’s foundational knowledge while allowing it to specialize in your problem. In other instances, you might choose to fine-tune the entire model, especially if your task significantly differs from the original.

Fine-tuning is less resource-intensive than training a model from scratch. It reduces training time, cuts computational costs, and makes the process more accessible for smaller teams. Tools like Hugging Face Transformers, TensorFlow Hub, and PyTorch Lightning have made fine-tuning easier than ever.

The benefits go beyond convenience. Fine-tuned models generally perform better on domain-specific tasks because they already understand the fundamental patterns, requiring less effort to adapt to your unique needs.

Real-World Applications and Benefits

Transfer learning and fine-tuning are integral to many everyday tools. Voice assistants like Siri and Alexa use fine-tuned speech models to understand commands, while image recognition apps apply pre-trained vision models for tasks like document scanning and plant identification. AI writing assistants also rely on fine-tuned language models for tasks like grammar correction, translation, and customer communication.

This approach supports rapid prototyping and faster deployment cycles. For startups and researchers, the ability to reuse powerful models reduces both cost and risk. They can build custom solutions with limited data and test them in real-world settings without massive infrastructure.

In healthcare, a vision model trained on general images can be fine-tuned to detect early signs of disease in X-rays or CT scans. The model already knows how to identify shapes and contrasts—it just needs a push to focus on medically relevant features. This kind of adaptation saves lives and resources.

Education also benefits. Language models fine-tuned for grading essays or providing writing feedback can personalize learning experiences at scale. They take the heavy lifting off teachers while offering tailored support to students. Again, the base model provides the structure, and fine-tuning shapes it for a specific purpose.

The beauty of this process is that it democratizes AI development. You don’t need massive data centers or armies of researchers to build something smart. With a good base model and thoughtful fine-tuning, even a small team can produce state-of-the-art results.

Challenges and Best Practices to Keep in Mind

While transfer learning and fine-tuning offer clear advantages, several challenges need attention. First, data quality plays a crucial role. A pre- trained model is only as good as the data it’s exposed to during fine-tuning. If the dataset is biased, noisy, or insufficient, the model may misinterpret essential patterns or reinforce harmful biases, leading to poor generalization.

Overfitting occurs when a model becomes too closely tailored to a small, specific dataset, causing it to lose its general understanding from pre- training. While it may perform well on training data, it often fails in real- world situations. Balancing general knowledge and task-specific tuning is essential.

Understanding which parts of the model to fine-tune is also key. In deep neural networks, early layers capture general features, while later layers focus on task-specific ones. Knowing when to freeze certain layers can significantly affect performance.

Ethics are also important. Pre-trained models may have learned from biased or unfiltered data, which doesn’t automatically improve with fine-tuning. Developers must apply safeguards to ensure ethical output.

Emerging best practices, such as few-shot learning, regular validation, and contributions from open-source communities, are addressing these challenges and making transfer learning a more reliable approach for AI development.

Conclusion

Transfer learning and fine-tuning are powerful techniques that make AI development faster and more efficient by building on pre-trained models. However, challenges such as data quality, overfitting, and ethical concerns need careful attention. By understanding how to balance generalization with task-specific training, developers can unlock the full potential of these methods. As best practices continue to evolve, transfer learning will remain a crucial tool in creating smarter, more adaptable AI systems.

zfn9

zfn9