As artificial intelligence becomes increasingly integrated into our personal and professional lives, the demand for accessible, private, and efficient tools has grown significantly. While cloud-based AI platforms like OpenAI’s ChatGPT are widely used for content generation, coding, and research assistance, they come with certain limitations, particularly regarding privacy, cost, and dependency on the internet.

This is where GPT4All steps in. GPT4All offers a local, open-source alternative to commercial AI tools, enabling users to run large language models (LLMs) directly on personal computers. Designed for accessibility, offline functionality, and transparency, GPT4All is emerging as a preferred solution for developers, researchers, and privacy-conscious users.

This post explores the key features of GPT4All and how its offline system works to provide a secure, efficient AI experience.

Popular Models Available in GPT4All

GPT4All supports a variety of models tailored to different needs. Some models are optimized for creative output, while others focus on concise answers or performance efficiency. Common choices include:

- GPT4All Falcon – Lightweight and commercially licensed.

- Groovy – Balanced performance with support for creative tasks.

- Orca – Designed with instructional data and legal for commercial use.

- Snoozy – Offers deeper, context-rich responses but at slower speeds.

- Hermes and Vicuna – Instruction-tuned but with restrictions on commercial use.

Each model is labeled with its architecture type (e.g., LLaMA, Falcon), quantization level, and licensing limitations to help users make informed choices.

Key Features

Several distinct features set GPT4All apart from cloud-based solutions. These include:

1. Local Execution and Offline Access

One of GPT4All’s biggest strengths is its ability to run without an internet connection. Once installed, users can operate models entirely offline, allowing for AI integration in remote environments, secure facilities, or situations where internet access is unreliable or restricted.

2. Strong Data Privacy

Unlike cloud-based tools that process and store user input externally, GPT4All ensures that all data remains on the user’s machine. For businesses and researchers working with proprietary, legal, or personal information, this privacy-first design minimizes the risk of data exposure.

3. Cost Efficiency and Licensing Freedom

GPT4All is free to use and supports various models released under permissive licenses like GPL-2, allowing for commercial use and further development. There are no subscription fees or API limits, making it a budget- friendly option for independent developers and smaller organizations.

4. Portability Across Devices

Due to the compact size of its models (generally 3GB to 8GB), GPT4All can be installed on a wide range of hardware, including mid-range laptops and desktops. Its portability even allows it to run from external drives, expanding its usability across different environments.

How GPT4All Works?

GPT4All is designed to function as an on-device AI system, enabling users to download, store, and run large language models (LLMs) directly on their personal computers. Unlike cloud-based platforms that require constant internet access, GPT4All performs offline inference , meaning all processing happens locally without data being sent to external servers.

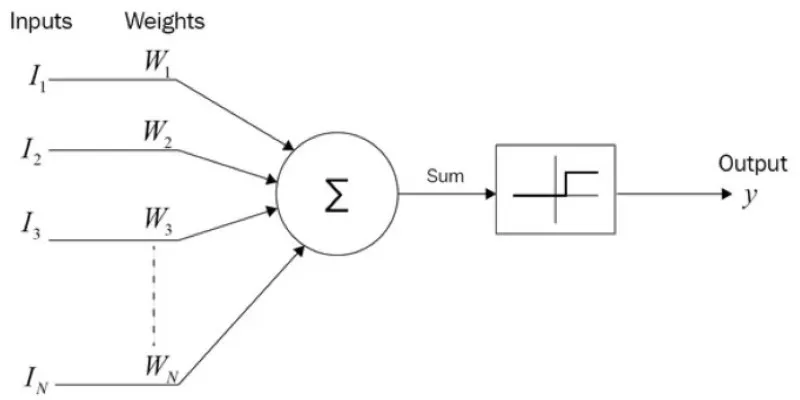

Quantization for Lightweight Performance

At the heart of GPT4All’s local functionality is model quantization. This process reduces the size and computational demands of traditional LLMs by lowering the precision of numerical operations. Instead of requiring high-end GPUs or 32GB+ of RAM, GPT4All’s models can operate on systems with just 4 to 16 GB of RAM and standard CPUs.

Quantized models like LLaMA, GPT-J, Falcon, and MPT are commonly used within GPT4All. These models, scaled down to a few gigabytes, maintain strong performance for general tasks while consuming fewer system resources.

CPU-Based Execution

Unlike many modern AI tools, GPT4All is optimized to run entirely on CPUs. Users do not need specialized hardware such as GPUs to interact with the model. It makes it far more accessible to everyday users, small teams, and developers operating on budget-friendly or legacy hardware.

The performance varies slightly based on model size and system specifications. Still, for many tasks—such as generating text, summarizing content, or answering queries—the speed and accuracy remain practical and reliable.

Offline Inference and Data Privacy

GPT4All’s offline-first design means that once installed, the tool operates independently of the internet. User data, queries, and model outputs never leave the device. It eliminates common privacy concerns associated with cloud- based AI services, where user data may be logged, stored, or analyzed remotely.

By ensuring that everything stays on local hardware, GPT4All appeals to professionals handling confidential data and to users who want to maintain control over their interactions with AI tools.

Atlas: Transparent Data Management

GPT4All is supported by Atlas, a data infrastructure developed by Nomic AI. Atlas serves as a vector database and visualization tool that maps the datasets used to train available models. It allows greater transparency into what data the models were trained on and how it was organized.

In a field where many models rely on opaque datasets, this degree of openness helps users better understand model behavior and data quality—particularly useful for those interested in ethics, bias reduction, or academic research.

Simple Setup and Model Flexibility

Installing GPT4All is straightforward. A one-click installer is available across major operating systems, and users can choose from a variety of models based on size, performance, or licensing needs. Once set up, GPT4All functions independently, requiring no API keys, subscriptions, or online accounts.

Users can switch models at any time or store multiple models locally for different purposes. This flexibility contributes to GPT4All’s growing appeal as a lightweight yet capable AI platform for local use.

Conclusion

GPT4All is more than just another language model—it’s a shift toward accessible, transparent, and secure AI usage. In a landscape where data privacy and digital independence are becoming essential, GPT4All gives users control without sacrificing functionality.

It may not yet rival the full capabilities of cloud-based platforms, but it serves as a powerful foundation for local AI applications. For developers, educators, small businesses, and privacy-minded users, GPT4All represents a practical, ethical, and cost-effective way to explore and benefit from language models.

zfn9

zfn9