Artificial intelligence is rapidly evolving, with one significant shift being the ability to run large language models on your own machine. Concerns about privacy, control, and performance are driving more people towards local solutions. Enter LM Studio: a tool that simplifies setting up local LLMs with its clean, user-friendly interface.

No servers. No data sharing. Just powerful AI running directly on your device. This guide will show you how to run a large language model (LLM) locally using LM Studio, making local AI more accessible than ever before — even if you’re just starting out.

What is LM Studio and Why It Matters for Local LLM?

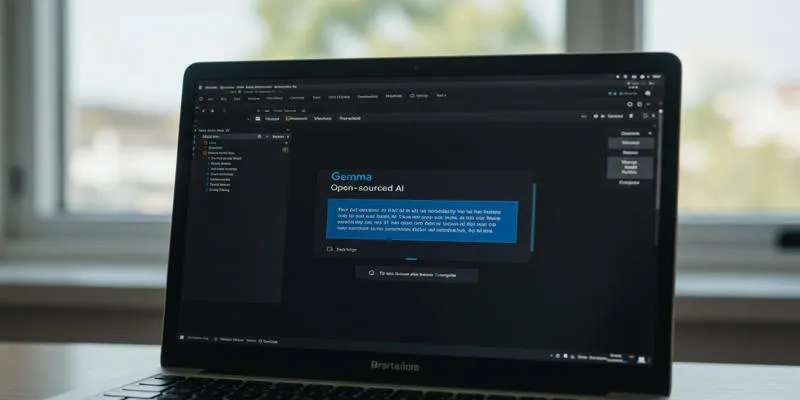

LM Studio is a desktop application designed to simplify the use of large language models (LLMs) for developers, researchers, and casual users. Its user-friendly interface allows you to download, run, and manage AI models directly on your local machine—no cloud access required. LM Studio’s accessibility makes it appealing; you don’t need to be an AI expert to start experimenting with powerful models.

Most people are familiar with LLMs through cloud platforms like ChatGPT or Claude. While convenient, these services raise concerns about privacy, cost, and dependency on internet access. Running an LLM locally addresses these issues by keeping everything on your device. Your data stays private, there’s no server lag, and you’re free from usage limits or monthly fees.

LM Studio supports a wide range of LLM architectures and can integrate into your workflows or apps. If you’re curious about AI or want more control over how you use it, LM Studio gives you the tools to build and experiment freely—without the need for a server farm.

System Requirements and Model Selection

Before diving into the technical steps, it’s important to ensure your system is ready to run an LLM. Running large language models locally requires decent hardware resources. The more powerful your hardware, the faster and smoother the experience.

Typically, LM Studio runs well on modern desktops or laptops with at least:

- A multi-core CPU (Intel i5/i7, AMD Ryzen)

- 16GB or more RAM

- A dedicated GPU for accelerated performance (optional but recommended)

- Adequate storage (models can range from a few GBs to over 30GB)

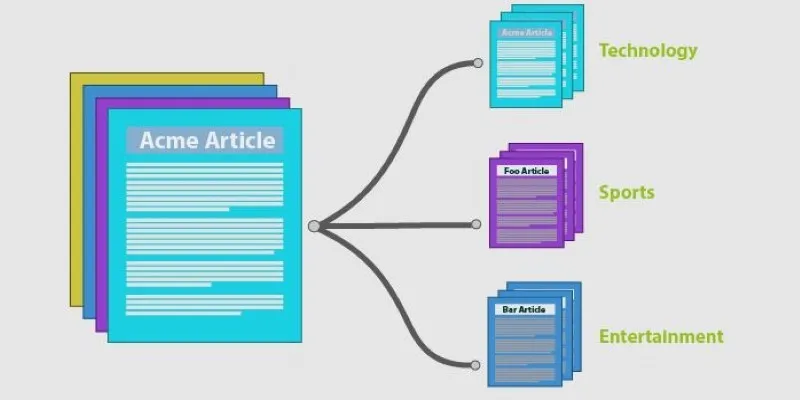

LM Studio allows users to choose from different LLM models based on their needs. Some models focus on general language generation, while others are fine-tuned for coding, summarization, or data analysis.

For beginners, start with smaller models like GPT-J or GPT-NeoX for basic tasks. If your hardware allows, moving up to larger models like Llama, Falcon, or MPT provides better performance and deeper capabilities.

Downloading models directly from LM Studio’s model hub is easy. The application provides descriptions, size estimates, and usage details for each model, making it a preferred choice for running LLMs locally.

Step-by-Step Guide to Running LLM Locally Using LM Studio

Setting up LM Studio is designed to be simple, even for those with minimal technical experience. Once you’ve installed the application from its official website, the journey begins.

First, launch LM Studio and explore its clean and intuitive interface. You’ll see an option to browse available language models. Pick a model suitable for your machine’s resources. Once selected, LM Studio will automatically handle the downloading and installation process.

After the model is installed, you can interact with it using the built-in chat interface. Simply type a prompt, and the local LLM responds without sending data to the cloud. The speed is impressive, especially on machines with good hardware, because everything happens locally.

Advanced users may want to configure LM Studio’s settings for better control. Options include adjusting context size, controlling temperature (which affects creativity), and managing memory usage.

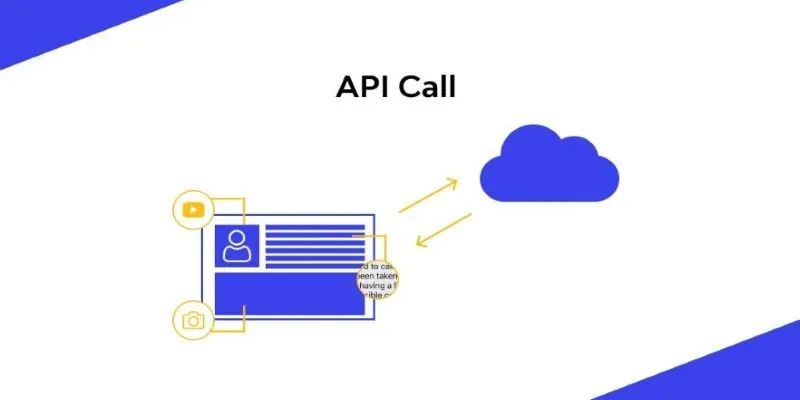

LM Studio’s plugin system sets it apart by allowing users to extend its capabilities. You can connect external APIs, automate repetitive tasks, and build tailored workflows. This transforms LM Studio from a simple interface into a flexible, local development hub for advanced AI applications.

For developers, LM Studio offers API endpoints for local applications to interact with the LLM. This means you can build chatbots, writing assistants, or data analysis tools that work entirely offline.

Benefits and Future of Running LLM Locally Using LM Studio

As data privacy and offline functionality become increasingly important, more users are turning to local deployment of language models. Running LLM locally using LM Studio offers a simple yet powerful solution that’s gaining traction across industries. It allows individuals and organizations to harness the capabilities of large language models without relying on cloud-based services.

One of the standout benefits is cost efficiency. Unlike hosted LLMs that charge based on API calls or monthly quotas, LM Studio enables unlimited usage after the initial download — no hidden fees, no metered access. Over time, this translates to significant savings, especially for developers or businesses that rely on frequent interactions with language models.

Security is another major advantage. Because the model operates entirely on your device, there’s no risk of data leakage through external servers. Sensitive or proprietary information remains in your hands, making it ideal for sectors like healthcare, law, and finance.

LM Studio also supports customization. You can fine-tune models, train on your data, and adapt performance to your hardware. With constant improvements and new model support, the tool is only getting better.

What used to require massive infrastructure can now run on a desktop. That’s not just a technical shift — it’s an empowering one.

Conclusion

Running LLM locally using LM Studio shifts control back to the user. It’s not just about avoiding cloud costs — it’s about privacy, speed, and full ownership of your AI tools. LM Studio simplifies what used to be a complex process, making it easy to install and use powerful models right on your device. Whether you’re building apps or just exploring AI, this setup offers flexibility and peace of mind. Local AI is no longer a future trend — it’s here now.

zfn9

zfn9