For years, the conversation around artificial intelligence has been dominated by large language models (LLMs) like GPT-3, GPT-4, and Google’s Gemini. These powerful systems, with billions—even trillions—of parameters, have captured the imagination of the public and driven countless innovations. But a quiet shift is underway. A new class of models, known as Small Language Models (SLMs) , is rapidly gaining ground—and they may just be the future of AI.

SLMs are leaner, faster, and more efficient, and they’re designed for the kind of real-world applications most people and businesses actually need. Let’s explore why SLMs are positioned to become the foundation of future AI solutions.

What Are Small Language Models?

Small Language Models are scaled-down AI models designed to perform many of the same tasks as LLMs—like generating text, answering questions, or summarizing content—but using a fraction of the computational power.

While there’s no universal standard for what parameter count defines an SLM, they typically have tens of millions to a few billion parameters , compared to hundreds of billions or more in LLMs. Models like Microsoft’s Phi-3 Mini , OpenAI’s GPT-4o Mini , Meta’s LLaMA-3 , and Google’s Gemini Nano are recent examples of powerful SLMs. Despite their size, these models often achieve surprisingly strong results, especially when tailored to specific use cases.

SLMs vs. LLMs: What’s the Difference?

The core distinction between small and large language models goes beyond just parameter count. It encompasses their design philosophy, energy efficiency, responsiveness, and real-world applicability.

- Model Size: LLMs typically feature 100 billion or more parameters, allowing them to generalize across many domains. SLMs, by contrast, range from 1 to 10 billion parameters, making them leaner and faster.

- Training Data: LLMs are trained on vast, diverse datasets scraped from the internet. SLMs, however, often rely on cleaner, high-quality, and domain-specific datasets, enabling better performance in targeted scenarios.

- Speed: Due to their reduced size, SLMs deliver faster response times and lower latency, especially when deployed on lightweight or embedded devices.

- Power Consumption: LLMs demand extensive hardware and energy to train and run. SLMs consume significantly less power, making them more sustainable and affordable.

- Accuracy: While LLMs are strong generalists, SLMs can outperform them in niche use cases thanks to their capacity for fine-tuning on specific tasks.

Examples of popular SLMs include Google’s Gemini Nano, Microsoft’s Phi-3, OpenAI’s GPT-4o Mini, and Anthropic’s Claude 3 Haiku—each representing the growing shift toward efficient, purpose-driven AI.

Why Small Language Models Are Taking Over?

Small language models are no longer just “lite” versions of their larger counterparts—they’re becoming the go-to choice for many developers, businesses, and users. Their flexibility, efficiency, and ease of deployment are driving a major shift in how we think about AI implementation.

Here’s why SLMs are rapidly becoming the smarter option:

1. Lower Training and Operational Costs

Training a large model like GPT-4 can cost upwards of $100 million , not including the infrastructure and energy costs needed to keep it running. These models rely on thousands of high-end GPUs , enormous server farms, and a constant stream of internet data.

In contrast, SLMs can be trained with modest hardware setups , often using fewer GPUs, and can even be run entirely on CPUs or mobile devices. This drastically lowers the barrier to entry for smaller AI companies, research labs, and enterprise teams building their own custom AI tools.

2. On-Device Deployment and Offline Use

One of the most revolutionary features of SLMs is their ability to run directly on devices , without relying on cloud services. Google’s Gemini Nano, for example, runs natively on Pixel smartphones.

This offers several game-changing benefits:

- Privacy: Data stays on the user’s device.

- Speed: No need for network round-trips.

- Availability: Works offline, even with poor or no internet connectivity.

From smartphones to edge devices in factories or hospitals, SLMs open the door to AI that’s available anytime, anywhere—without draining bandwidth or battery.

3. Improved Responsiveness

Because of their smaller size, SLMs offer faster response times —a critical factor for real-time applications like:

- Voice assistants

- Customer service chatbots

- Automotive infotainment systems

- AR/VR interfaces

SLMs have lower latency, meaning they can generate results quicker than their larger, cloud-based counterparts. When timing is critical, smaller is often better.

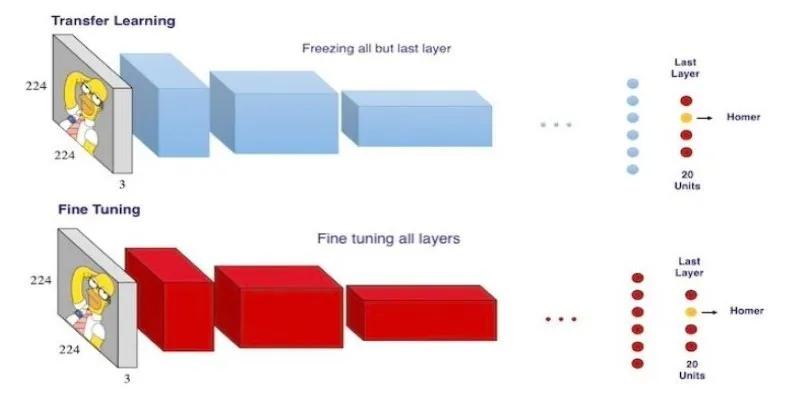

4. Better Data Efficiency and Fine-Tuning

SLMs are not just cheaper to train—they’re also easier to fine-tune. Their smaller scale allows developers to customize them with high-quality, domain- specific data for use cases like:

- Healthcare diagnostics

- Legal document analysis

- Financial modeling

- HR automation

This level of personalization is harder and more costly with LLMs, which often require enormous datasets and computing power to adjust even slightly.

5. Enhanced Accuracy in Niche Domains

You might assume bigger models are always more accurate. But when it comes to specialized tasks , SLMs can actually outperform LLMs. Why?

Because SLMs are trained on targeted, high-quality datasets , rather than the vast, noisy data oceans that feed LLMs. This makes them ideal for:

- Internal business tools

- Technical support assistants

- Industry-specific research

A focused, compact model trained on clean, relevant data will usually provide more accurate results in that domain than a larger general-purpose model.

The Rise of Hybrid AI Systems

Interestingly, the future may not be all about small models—but rather smart combinations of small and large. Hybrid systems can use:

- LLMs for powerful, complex generation tasks (like summarizing scientific papers)

- SLMs for real-time interaction and on-device personalization (like voice responses or local file summarization)

This architecture offers the best of both worlds: performance when you need it, efficiency when you don’t.

Conclusion

SLMs are no longer just scaled-down versions of LLMs—they’re purpose-built tools reshaping how we use AI. They offer speed, security, affordability, and high-performance customization that suits the way people use technology today.

As edge computing becomes the norm and privacy regulations grow stricter, SLMs will continue to expand their reach. While LLMs will always have a place in research and enterprise, the future of AI for everyday use may very well be small.

zfn9

zfn9