The AI landscape is ever-evolving, and Google’s Gemma 2 is a testament to that change. As the newest member of the Google Gemma family of large language models, Gemma 2 offers more than just incremental improvements. It delivers faster performance, enhanced reasoning capabilities, and a stronger alignment with real-world requirements—all while maintaining its open-weight and transparent nature.

Where previous models focused on compactness and speed, Gemma 2 brings multilingual fluency, ethical safeguards, and robust deployment support to the forefront. This article delves into what distinguishes Gemma 2 and why it represents Google’s commitment to developing accessible, responsible, and high-performing AI systems.

What Distinguishes Gemma 2 from the Original Google Gemma Family?

To appreciate Gemma 2’s advancements, it’s essential to reflect on the origins of the Google Gemma family of large language models. The initial release prioritized compactness and openness. Designed with responsible AI principles, the original Gemma models were open-weight LLMs optimized for general-purpose use. They were compact (Gemma 2B and 7B), trained with safety-focused reinforcement learning techniques, and compatible with major machine learning platforms like PyTorch and JAX.

Enter Gemma 2. Rather than reinventing the wheel, it refines it. Trained on updated data with an optimized pipeline, Gemma 2 offers superior accuracy in multilingual tasks, smoother performance in fine-tuning workflows, and a natural alignment with user intent. It’s not just smarter—it’s more precise, more responsible, and less resource-intensive.

So, what’s new under the hood? Google has enhanced tokenizer efficiency, allowing for faster inference with fewer tokens, particularly for non-English languages. Additionally, improved sparsity control enables the model to use attention more selectively, making it faster during inference and less memory- intensive.

These are not mere architectural tweaks—they are significant quality-of-life upgrades for developers, researchers, and companies aiming to integrate LLMs into real-world applications.

Use Cases and Real-World Applications

While benchmarks and architecture are important, Gemma 2 truly shines in practical applications. The Google Gemma family of large language models has always been designed for versatile use—from academic settings to early commercial prototypes. Gemma 2 enhances this flexibility, addressing a range of needs with fewer barriers.

For developers building custom chatbots or internal automation tools, Gemma 2 is compatible with popular frameworks like Hugging Face’s Transformers and TensorFlow. The open-weight model allows for easy fine-tuning without restrictive licensing constraints. Google has also introduced pre-built adapters for specific domains such as legal, finance, and education, simplifying domain specialization.

In the research community, Gemma 2’s cleaner and more transparent training data pipeline enhances reproducibility—an ongoing challenge in LLM development. Researchers can evaluate datasets and architectures to cross- verify results and minimize “black box” behavior.

On the product side, early adopters have reported faster inference times with minimal quality loss—especially on devices with limited computing power. Whether powering a voice assistant on a mobile device or assisting a support team in drafting responses, Gemma 2’s efficiency makes it ideal for edge and hybrid environments.

These practical advantages make it not only a better tool but also broaden its usability.

A Step Toward Open and Ethical AI

Beyond speed and accuracy, Gemma 2 signifies a philosophical shift in AI sharing. The Google Gemma family of large language models positions itself as an alternative to closed systems. While companies like OpenAI and Anthropic maintain restricted model access, Google embraces the concept that AI can be both powerful and open if managed responsibly.

With Gemma 2, Google enhances transparency. Detailed training information, including dataset composition, pre-processing techniques, and safety fine- tuning steps, is more accessible than before. Built-in misuse detection guardrails and recommended use cases make ethical development straightforward, without the fine print.

Even the model weights are optimized for efficiency, reducing energy consumption—a crucial consideration for large-scale deployment as concerns over LLMs’ energy use grow.

Another notable feature is international accessibility. Unlike earlier models with inconsistent language performance, Gemma 2 excels in handling multilingual queries. This opens the door for broader global adoption, especially in underserved languages that have historically lacked support from major LLMs.

Thus, Gemma 2 is not just a technological upgrade—it’s a cultural one, advocating for inclusivity, sustainability, and transparency in AI.

Community Adoption and Ecosystem Growth

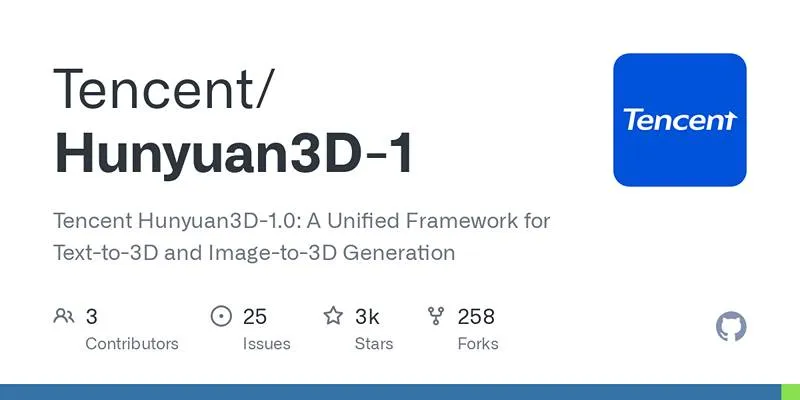

Gemma 2 has made a significant impact on the open-source and developer communities. Its compatibility with platforms like Hugging Face, JAX, and TensorFlow facilitates seamless integration into existing workflows. This ease of use promotes rapid experimentation, with developers fine-tuning the model for various applications, from custom chatbots to scientific research assistants.

GitHub repositories featuring Gemma 2 are expanding rapidly, showcasing its real-world applications and validating its capabilities. Community forums and machine learning groups appreciate it for performance and transparency—often lacking in major models.

Gemma 2’s collaborative ecosystem is growing organically, driven by shared insights, reliable benchmarks, and real-world success. It is evolving into more than just a tool, serving as a catalyst for decentralized, accessible AI that empowers a broader community beyond traditional tech circles.

Conclusion

Gemma 2 builds on the strengths of the original Google Gemma models by offering improved speed, enhanced multilingual support, and responsible AI design. It’s more than an upgrade—it’s a glimpse into the future of open and efficient language models. With broader accessibility and practical performance improvements, Gemma 2 is a thoughtful step forward. For developers, researchers, and users, it represents a future where powerful AI doesn’t have to be closed, complex, or out of reach.

zfn9

zfn9