Language models have become surprisingly adept at listening—not just to English but to dozens, sometimes hundreds, of languages. Yet, even with all that progress, the leap from “pretty good” to “actually usable” in real-world systems often comes down to the art of fine-tuning. When adapting Meta’s MMS (Massively Multilingual Speech) for Automatic Speech Recognition (ASR), it’s all about smart adjustments, minimal overhauls, and knowing exactly what to tweak without starting from scratch.

In this guide, we’ll explore how to fine-tune MMS using adapter modules—small, trainable layers that enable you to specialize the model for different languages without retraining everything. It’s a practical and efficient approach to achieving robust multilingual ASR results with less computing power and more control.

Why Use Adapter Models?

Adapters are like cheat codes for model fine-tuning. Instead of retraining the entire MMS model, you freeze most of it and tweak the added layers—typically a few bottleneck-style modules slotted between the main layers. This approach offers two major benefits: it reduces training time and makes deployment lighter. In multilingual ASR, those gains are significant.

Let’s say you’re working on five languages. Without adapters, you’d end up fine-tuning five separate models. With adapters, you have one base with five light heads. You save on computing resources, storage, and energy, all while maintaining performance.

This method also prevents catastrophic forgetting. The base model retains its broader multilingual understanding while each adapter learns language-specific traits. It’s like switching the accent, not the brain.

Step-by-Step: Fine-Tuning MMS with Adapters

Fine-tuning MMS with adapters isn’t overly complicated, but it requires discipline. Missing a key detail, like choosing the wrong freezing strategy, can quickly derail your efforts. Here’s a focused step-by-step flow to keep things smooth and reproducible.

Step 1: Choose Your Base MMS Model

Start with the right version of the MMS model. Meta offers variants of different sizes, but for most adapter-based tasks, you want one of the larger pre-trained ASR models. These have enough depth for adapters to latch onto without losing essential features.

Ensure the model has adapter hooks (some forks of MMS include them pre-baked). If not, you’ll need to patch the architecture to include them.

Step 2: Freeze Core Parameters

Freeze everything except the adapter modules. This includes convolutional layers, transformer stacks, and any pre-final classification blocks. Your training script should explicitly set requires_grad=False for these layers to prevent unintentional updates.

Why freeze? It stabilizes training and reduces compute usage. You’re refining how the model responds, not relearning how to listen.

Step 3: Insert and Configure Adapters

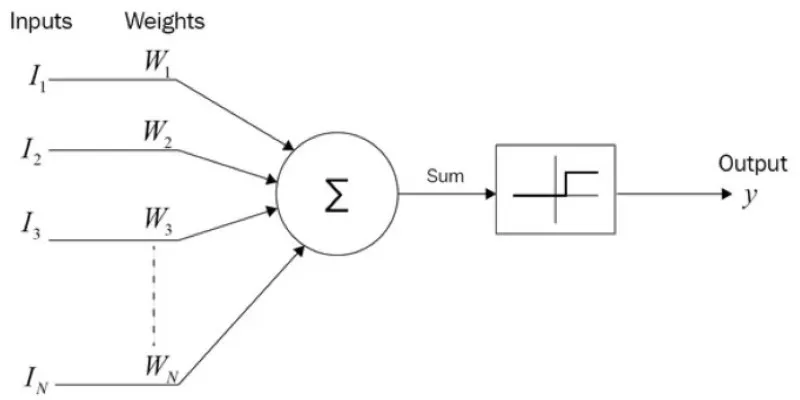

Adapters usually sit between the transformer layers. Use lightweight modules—typically two fully connected layers with a non-linearity in between. You can experiment with the bottleneck size, often between 64 and 256 units, depending on language complexity and data volume.

Keep initialization in check. Too large, and you risk destabilizing the model; too small, and training stagnates. Opt for Xavier or Kaiming initialization to strike the right balance.

Step 4: Prepare Your Language-Specific Dataset

Your dataset should be clean, segmented by utterances, and well-aligned with transcripts. While MMS is resilient to noisy inputs, your fine-tuning won’t be. A poor dataset leads to language drift and poor accuracy, especially in tonal or morphologically rich languages.

Use forced aligners to validate timestamps. Normalize transcripts (e.g., strip diacritics, unify punctuation) while maintaining phonetic integrity. ASR models prioritize content over format.

Step 5: Train with Careful Scheduling

Use an optimizer like AdamW with a low learning rate, typically between 1e-4 and 5e-5. Employ a learning rate scheduler that warms up slowly and decays linearly. Adapter training benefits from consistency over aggression.

Keep batch sizes reasonable and use gradient accumulation if memory is a concern. Save checkpoints every few epochs with validation against a held-out set. Always track CER/WER (character or word error rate), not just loss.

Step 6: Evaluate on Diverse Speech Patterns

Once training is complete, test the model beyond your test split. Try speech with varying accents, speaking speeds, and environmental backgrounds for a true robustness check.

Also, test on edge cases like overlapping speech, soft voices, numbers, and code-switching. If performance holds up, you’ve likely achieved a stable model.

Key Challenges That Don’t Sound Like Challenges at First

Some of the trickiest parts of fine-tuning aren’t evident in logs. Here are a few common pitfalls:

Dataset Imbalance

If your training data is biased towards one speaker or dialect, your adapter might end up biased. Subsampling or weighted loss functions can help balance things out.

Overfitting on Small Languages

For low-resource languages, adapter tuning may cause memorization rather than generalization. Early stopping and regular dropout layers are essential here.

Tokenization Mismatch

If your tokenizer wasn’t designed with the target language in mind, you’ll hit a ceiling fast. In some cases, it’s worth retraining a tokenizer or using a language-specific one and mapping back.

Decoding Strategy Traps

Greedy decoding might yield decent results, but beam search with language modeling significantly boosts ASR accuracy. The adapter can’t fix poor decoding; it only feeds into it.

Final Thoughts

Fine-tuning MMS using adapters might seem like a small detour in the grand scheme of model training. However, it’s a strategic route that enables you to work smarter, not harder. Instead of brute-forcing every language into a new model, you’re allowing adapters to do the lifting—quietly, efficiently, and with just enough customization to make a difference where it counts: the accuracy of what your system hears and transcribes.

zfn9

zfn9