Introduction

Globally, artificial intelligence groups are refining large language models to ensure safer, more accurate performance. These behind-the-scenes tasks include prompt adjustment, model testing, and data cleansing. Researchers manage model behavior and risks by balancing innovation with responsibility. The process requires close collaboration across technical teams. Ethical checks and user feedback loops are key in model release decisions.

Both open-source and proprietary developers aim to ensure consistent model performance. They strive to meet practical needs while upholding high standards. Developing responsible large language models builds public confidence and trust. Though often overlooked, these technical improvements drive the evolution of artificial intelligence. A model’s success relies heavily on continual refinement.

The Tuning Process: From Pretraining to Alignment

Large language models begin as raw systems trained on large volumes of diverse data. At this stage, the models remain unrefined. Engineers must first refine these models through a careful tuning process. The process starts by narrowing the model’s behavior using specific examples and targeted prompts. Reinforcement learning reduces undesirable outputs and improves response quality. Alignment ensures the model behaves in expected, safe ways.

Experts test performance across a wide range of inputs to evaluate consistency. Noted errors guide improvements for future deployment. This cycle continues until results meet quality benchmarks. Human input is essential for shaping responses that match real-world expectations. Safety steps are embedded at each phase of tuning. These steps reduce bias and improve model reliability. Tuning large language models makes artificial intelligence tools more practical and trustworthy. Each development phase increases user trust and expands usage potential. Consistent iteration with every release ensures smarter, safer, and more stable AI systems.

The Role of AI Research Groups in Model Evolution

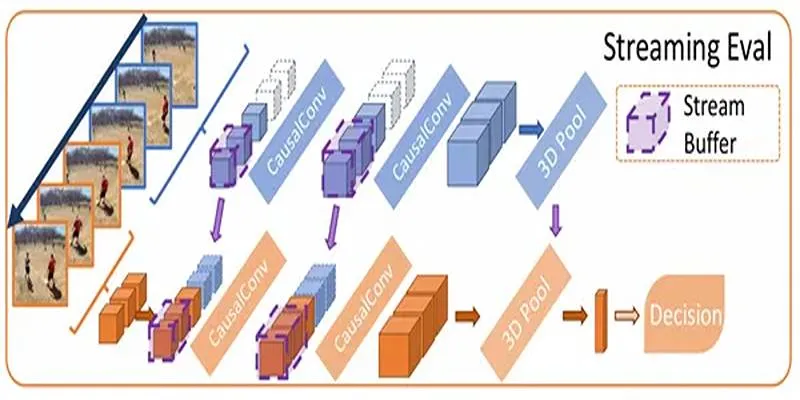

Artificial intelligence research groups are critical in designing and refining large language models. These teams optimize training strategies and evaluate model performance under various conditions. They develop metrics to track accuracy, fairness, and bias across outputs. Data scientists and ethicists often collaborate to ensure responsible AI development. Researchers experiment with model size, architecture, and temperature parameters to improve performance. These adjustments directly impact how models function in real-world environments.

Innovation in prompt engineering often stems from the work of these expert teams. Their contributions influence enterprise-grade systems and open-source models alike. Collaborations between academia and industry lead to faster breakthroughs and higher-quality results. Research groups publish whitepapers, create demonstrations, and expand global knowledge bases. Their efforts ensure large language models evolve with changing user and market needs. These teams lay the groundwork for safer and more transparent AI systems. Continuous testing and refinement make modern AI tools increasingly reliable and trustworthy.

Challenges in Releasing Language Models to the Public

Releasing a language model involves complex technical, legal, and ethical considerations. These deployments carry risks such as misinformation, bias, and system misuse. Businesses must prioritize legal compliance and reduce hallucinations in generated content. Developers address these concerns early in the training and evaluation process. Regulatory frameworks vary across countries and industries, complicating global deployment efforts. In addition to compliance, technical challenges such as low-latency performance and infrastructure stability must be resolved.

Public model releases attract heavy scrutiny, especially during high-profile launches. Experts examine ethical transparency, openness, and response fairness. Open-source models face added risks, including a lack of central oversight to prevent misuse. Contributors must monitor potential abuse with limited enforcement tools. Early user feedback helps identify blind spots, prompting rapid adjustments before large-scale rollout. Successful releases combine red-teaming with detailed documentation. Every model launch reveals insights that guide future improvements. Careful planning reduces public distrust and limits potential damage from flawed outputs.

Open-Source vs Proprietary AI Development Models

Open-source and private frameworks dominate the two main directions of artificial intelligence development. Both carry special hazards as well as strengths. Projects with open sources provide community comments and openness. One can audit codes and propose changes by anyone. Two instances of this are Hugging Face and Meta. OpenAI’s GPT models and other proprietary systems restrict access. Safety, performance, and user control come first. Closed-loop testing polishes these models. Proprietary companies make security and big deployment investments. Closed systems are criticized, nevertheless, for lack of openness.

Conversely, open-source technologies run the danger of being abused. Harmful use situations get more difficult to stop without strict control. Though different, both techniques depend on big language model tuning. Faster experimentation is made possible by open-source tools. Tools for proprietary concentrate on compliance and control. Many scholars advocate hybrid techniques for balanced development—every kind of model shapes upcoming artificial intelligence norms. The decision one makes between them affects ethical alignment, safety, and creativity.

User Feedback Loops: The Final Layer of Model Improvement

User feedback becomes essential after large language models are deployed in real-world environments. Practical use often reveals issues that training failed to detect, such as unusual outputs, biased responses, or performance inconsistencies. Developers analyze these anomalies to refine model behavior and enhance future rollouts. Many platforms feature built-in tools for rating model responses, helping teams assess quality and user satisfaction. Ratings and comments guide targeted tuning, allowing systems to better match human expectations.

Community contributions are especially valuable in open-source projects. Users frequently identify overlooked translation errors and cultural nuances that labs may miss. Feedback loops significantly improve model reliability and user trust. These loops act as the final filter before widespread adoption. Research groups and AI companies examine trends in user behavior to inform ongoing safety updates and retraining. Real-world interaction ensures that models continue evolving. Without continuous human input, progress in language model quality would slow down drastically.

Conclusion

Before release, AI research groups invest heavily in improving large language models for real-world use. Their work includes tuning, safety audits, and user feedback cycles to ensure consistent, ethical performance. Public trust relies on responsible AI tuning and transparent deployment processes. Real-world data helps refine model accuracy and minimize risks. Whether open-source or proprietary, each tuned layer boosts dependability and value. These behind-the-scenes efforts enable smarter, safer AI systems. Large language model adjustment is now necessary—not a choice—for achieving long-term success and societal acceptance of artificial intelligence.

For further exploration, consider visiting OpenAI and Hugging Face to learn more about AI model development and community collaboration.

zfn9

zfn9