In the evolving world of AI, one question remains central: how do we reliably measure the value of an AI agent beyond its technical prowess? Sequoia China recently introduced XBench, a next-generation benchmark tool designed to evaluate AI agents not just on performance, but on productivity and real-world business alignment.

This article explores how XBench shifts the conversation from academic difficulty to commercial utility, marking a new chapter in how we evaluate AI systems.

Why AI Benchmarks Must Focus on “Business Capability”

Sequoia China’s research team introduced XBench in their May 2025 paper, “xbench: Tracking Agents Productivity, Scaling with Profession-Aligned Real-World Evaluations.” It details a dual-track evaluation system aiming to assess not only technical upper limits but also actual productivity in business scenarios.

📌 Project Timeline

- 2022 – XBench began as an internal tool post-ChatGPT launch, tracking model capabilities through monthly internal benchmarks.

- 2023 – Development of private test sets started, initially focused on basic LLM capabilities like Q&A and logical reasoning.

- Oct 2024 – A second major update included complex reasoning and basic tool use cases.

- Mar 2025 – A pivotal shift: the team started questioning the diminishing ROI of increasingly difficult benchmark tasks and refocused on real-world value.

A Dual-Track Evaluation Framework

To address both performance ceilings and practical application, XBench introduced a dual-track system:

1. AGI Tracking

Focuses on identifying the technical boundaries of AI agents. Includes:

- xbench-ScienceQA: Evaluates scientific question answering.

- xbench-DeepSearch: Measures the depth of Chinese internet search capabilities.

2. Profession-Aligned Evaluations

Quantifies real-world utility by aligning benchmarks with specific industries like:

- Recruitment

- Marketing

Expert domain specialists set up the tasks based on actual business workflows. Then, university faculty convert those into measurable evaluation metrics—ensuring tight alignment between benchmark and productivity.

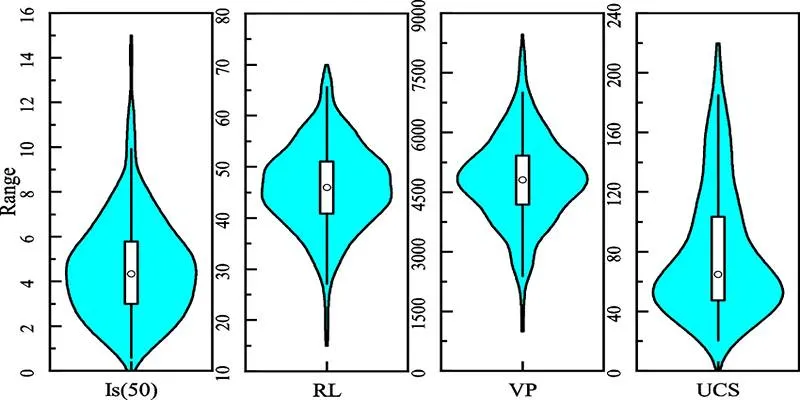

Initial Findings from XBench’s First Public Release

XBench’s first round of public testing yielded surprising results:

- 🔝 OpenAI’s o3 model ranked first across all test categories, especially in practical business applications.

- 🧠 GPT-4o underperformed slightly due to shorter answer lengths, which affected evaluation scores.

- 🧬 Model size is not everything – Google’s Gemini 2.5 Pro and 2.5 Flash performed comparably despite architectural differences.

- 🔍 DeepSeek R1, though strong in math/code, struggled on search-centric tasks, revealing limitations in agent adaptability.

What Is the Evergreen Evaluation Mechanism?

One standout innovation in XBench is the Evergreen Evaluation System – a continuously updated benchmark framework. This tackles a critical flaw in many static benchmarks: data leakage and overfitting.

Why This Matters:

- 🚀 Fast iteration: AI agents evolve rapidly, and so do the environments they operate in.

- ⏳ Temporal validity: The same task may have different relevance or difficulty over time.

- 🔄 Continuous benchmarking: XBench plans regular retesting across sectors like HR, marketing, finance, law, and sales.

By dynamically updating its test sets and aligning with real-world use cases, the Evergreen mechanism ensures that benchmark results remain relevant, actionable, and resistant to obsolescence.

Final Thoughts: The Future of Agent Benchmarks

XBench represents a new generation of AI evaluation tools, one that recognizes a vital truth: AI success isn’t defined by complexity—it’s defined by capability.

As AI agents become embedded in workflows and business systems, benchmarks like XBench will be key in answering not just “Can this model perform?” but more importantly, “Does this model add value?”

✅ If you’re developing or selecting AI agents for enterprise use, look for tools that go beyond benchmarks for intelligence—and start measuring for impact.

zfn9

zfn9