Looking through large image datasets can often feel slow and inefficient, especially when you’re searching for specific types of images. While Hugging Face’s datasets library is typically associated with text, it also supports image datasets in a remarkably practical and flexible way. Instead of manually downloading image collections or writing long preprocessing scripts, you can load, filter, and search for specific images using just a few lines of Python.

Whether you’re training a model, testing outputs, or reviewing a dataset before labeling, having an easy way to search through image data makes a big difference. Here’s a clear, step-by-step guide to performing image search with Hugging Face datasets.

Loading Image Datasets Using the Hugging Face Library

To begin working with images, the load_dataset function from the Hugging Face datasets library is your starting point. This function works with many dataset formats, including image-based ones hosted online or stored locally.

Take the “beans” dataset as an example. It’s a collection of bean leaf images categorized by plant diseases. Here’s how you load it:

from datasets import load_dataset

dataset = load_dataset("beans")

This will give you a dictionary with separate splits: “train”, “validation”, and “test”. Each image sample is represented as a dictionary, typically containing an ‘image’ key (a PIL image object) and a ’labels’ key for the category.

To preview the first image:

dataset["train"][0]["image"].show()

This lets you immediately confirm what the dataset looks like, which is especially helpful if you’re unsure about its structure or quality.

The beauty of this approach is that it abstracts away the file paths and formats. You don’t need to manually handle image directories—everything is structured and ready to use.

Filtering Images by Label and Image Attributes

Now that the dataset is loaded, you may want to narrow it down. Maybe you’re only interested in images labeled as “healthy” or those meeting a certain size requirement.

First, check the available labels:

labels = dataset["train"].features["labels"].names

print(labels)

You might see something like: ["angular_leaf_spot", "bean_rust", "healthy"].

To get only the images labeled as “healthy,” use the filter method:

healthy_images = dataset["train"].filter(lambda x: x["labels"] == 2)

Now, healthy_images contains just those samples. The index 2 comes from the label list printed earlier.

You can also filter by image size. Suppose you want all images with a width of at least 300 pixels:

large_images = dataset["train"].filter(lambda x: x["image"].width >= 300)

This makes it easy to slice through large datasets based on any attribute that PIL exposes—width, height, mode, or anything you can write a condition for.

These filters can be chained or combined, letting you perform targeted image searches without needing external tools or manual sorting.

Writing a Custom Image Search Function

To make your workflow cleaner and reusable, it’s a good idea to wrap your filtering logic into a function. That way, you can perform different searches without repeating code.

Here’s a basic image search function:

def search_images(dataset, label=None, min_width=None, min_height=None):

labels = dataset.features["labels"].names

def match(example):

conditions = True

if label is not None:

conditions &= (example["labels"] == labels.index(label))

if min_width is not None:

conditions &= (example["image"].width >= min_width)

if min_height is not None:

conditions &= (example["image"].height >= min_height)

return conditions

return dataset.filter(match)

You can use it like this:

results = search_images(dataset["train"], label="bean_rust", min_width=250)

This returns a filtered dataset of images labeled as “bean_rust” and at least 250 pixels wide. The results are just like the original dataset—only smaller and more relevant to your needs.

You can easily extend this function to handle more complex filters, such as specific image formats or pixel intensity averages. Since the Hugging Face library is built for speed and handles datasets lazily, even large image sets won’t slow down your notebook or script too much.

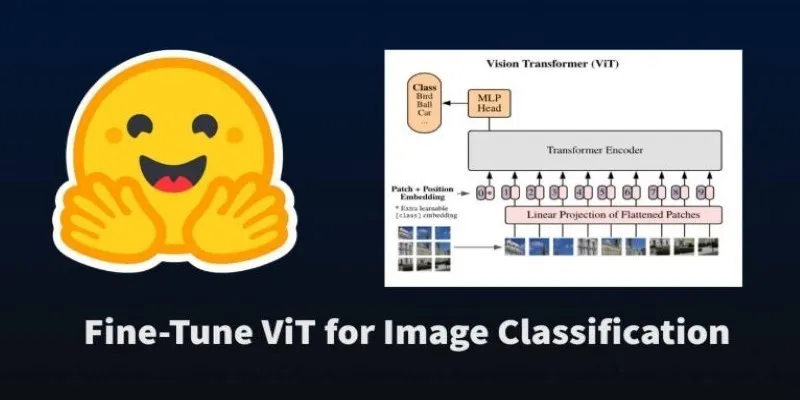

Using Image Embeddings for Visual Similarity Search

Basic filters work well for straightforward use cases, but sometimes, you want to search for images that look similar, even if they aren’t labeled the same. For that, you’ll need image embeddings—numerical representations of images that capture their visual content.

You can generate embeddings using a pre-trained vision model, such as CLIP or Vision Transformer (ViT). Hugging Face Transformers makes this accessible:

from transformers import AutoProcessor, AutoModel

import torch

processor = AutoProcessor.from_pretrained("openai/clip-vit-base-patch32")

model = AutoModel.from_pretrained("openai/clip-vit-base-patch32")

def get_embedding(image):

inputs = processor(images=image, return_tensors="pt")

with torch.no_grad():

features = model.get_image_features(**inputs)

return features.squeeze().numpy()

Now, generate an embedding for each image in your dataset:

dataset = dataset.map(lambda x: {"embedding": get_embedding(x["image"])})

Once embeddings are added, you can perform a similarity search. For example, to find images that are visually close to a query image, calculate its embedding and compare it to others using cosine similarity:

from sklearn.metrics.pairwise import cosine_similarity

import numpy as np

query_embedding = get_embedding(query_image).reshape(1, -1)

all_embeddings = np.stack(dataset["train"]["embedding"])

scores = cosine_similarity(query_embedding, all_embeddings)[0]

top_indices = np.argsort(scores)[-5:][::-1]

similar_images = [dataset["train"][i]["image"] for i in top_indices]

Now, you’ve found the five most visually similar images to your query. This method is useful when labels are inconsistent, missing, or too broad.

For large-scale datasets, you can use more efficient tools like FAISS or Annoy for faster approximate search, but the concept remains the same—compare embeddings instead of labels or file names.

Conclusion

Searching images within Hugging Face datasets can be surprisingly practical and efficient. With a few lines of code, you can load image datasets, filter them by labels or properties, and even run similarity searches using embeddings. You don’t need to deal with folder structures, filenames, or manual parsing. Whether you’re preparing data for training or just trying to understand what’s inside a dataset, this approach keeps everything in one place—code, images, and logic. Hugging Face datasets make image search both simple and flexible, allowing you to focus on what matters most: the images themselves, their patterns, their structure, and how they contribute to solving visual learning problems efficiently.

For more on Hugging Face, check out Hugging Face’s official documentation for further reading.

zfn9

zfn9