ChatGPT has quickly become an integral part of many professionals’ digital toolkits. It’s efficient, versatile, and incredibly helpful for tasks ranging from brainstorming to report writing. But despite its many benefits, using ChatGPT at work raises important questions about data privacy and the protection of sensitive information. With increasing reports of data leaks and unauthorized data access, understanding how to use ChatGPT responsibly has become essential.

This post outlines practical strategies you can implement to protect your privacy and secure sensitive company data when using ChatGPT in your professional activities.

1. Turn Off Chat History and Training

One of the most effective measures you can take is to disable ChatGPT’s chat history and training features. By default, ChatGPT stores conversations to improve its responses over time. However, this data can be accessed for system training or moderation purposes, introducing a potential risk.

Disabling chat history reduces the chances of your data being reviewed or misused. While OpenAI states that even with this feature turned off, conversation data is retained temporarily for abuse monitoring, opting out still adds an important layer of protection.

Before turning off chat history, export any data you might need for future reference. You can save important insights manually or transfer them to a secure storage solution that your organization approves.

2. Delete Conversations Regularly

Although disabling chat history is helpful, it’s also important to routinely delete your past conversations from the ChatGPT interface. Past interactions could contain inadvertent mentions of sensitive topics, internal discussions, or confidential project details.

Deleting conversations minimizes the surface area for potential data breaches. You can clear all chats at once or selectively remove individual ones. This simple practice ensures that any sensitive information shared doesn’t remain accessible longer than necessary.

3. Avoid Sharing Confidential Information

When using ChatGPT for work, it’s essential to draw a clear line between general queries and sensitive content. Avoid sharing confidential files, client details, financial data, or intellectual property. No matter how secure an AI tool claims to be, once the data leaves your secure system, the risk of exposure increases.

Remember, privacy policies are not guaranteed. Even anonymized data, when improperly handled, can sometimes be traced back to its source. To remain compliant with company policies and data protection laws, restrict your use of ChatGPT to low-risk and non-proprietary tasks.

4. Anonymize the Data You Use

If you must include work-related data in your ChatGPT prompts, anonymize it thoroughly. It involves removing any identifiable information that could link the content back to an individual or organization.

Here are a few anonymization techniques you can use:

- Attribute Suppression : Eliminate unnecessary data fields that serve no purpose in your query.

- Pseudonymization : Substitute real names or identifiers with fictional placeholders.

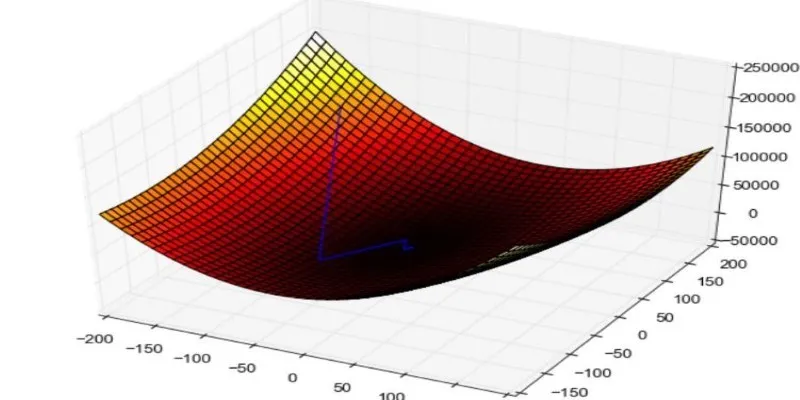

- Data Perturbation : Slightly adjust numerical values to mask the real figures while preserving analytical value.

- Generalization : Replace specific values with broader categories.

- Character Masking : Partially obscure sensitive fields like phone numbers or IDs.

While anonymization isn’t foolproof, it does reduce the risk of unintended data disclosure, especially when used in combination with other safeguards.

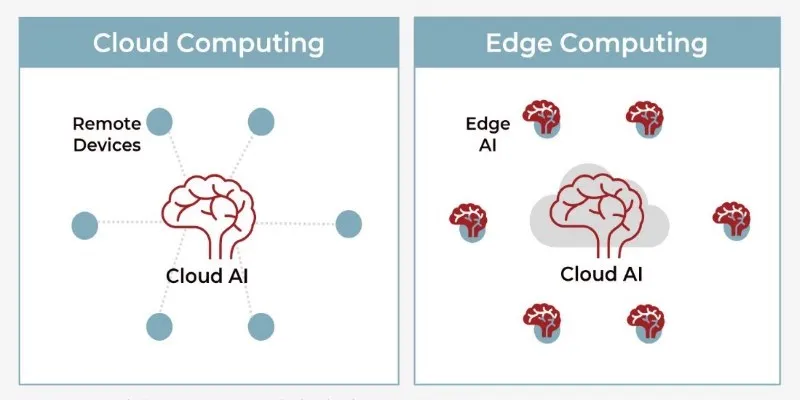

5. Limit Access to ChatGPT in Your Organization

For companies that allow employees to use ChatGPT, implementing access controls is crucial. Only authorized individuals should be able to interact with the tool in a work context, and even then, access should be limited based on the employee’s role.

Consider implementing role-based access controls (RBAC), which grant permissions based on job functions. It reduces the risk of accidental data exposure and ensures that only the necessary personnel are using AI tools with sensitive content. It’s also wise to perform regular access reviews and immediately revoke privileges when an employee changes roles or leaves the organization.

6. Be Cautious With Third-Party Integrations

Many third-party applications and browser extensions promise to enhance your ChatGPT experience. However, these tools often introduce new security and privacy risks, especially if they request access to your data or require additional permissions.

Before installing or using any third-party integration:

- Investigate the developer’s reputation.

- Read the privacy policy thoroughly.

- Confirm that data practices align with your organization’s compliance standards.

Avoid tools that seem suspicious, request excessive access, or lack transparency. Your workplace privacy is too important to compromise for minor convenience gains.

7. Understand ChatGPT’s Privacy Policy

Take the time to read and understand the privacy policy and terms of service associated with ChatGPT. Knowing how your data is handled, stored, and potentially shared helps you make informed decisions about what to share and what to avoid.

OpenAI provides transparency about data use, but users must still exercise caution. Policies can evolve, and understanding current practices is the first step toward using AI tools responsibly. Regularly reviewing policy updates ensures you stay aligned with the latest data protection measures.

8. Secure Your Account

Basic digital hygiene plays a significant role in maintaining your privacy. Use strong, unique passwords and enable two-factor authentication (2FA) on your ChatGPT account. These measures prevent unauthorized access, even if your login credentials are compromised.

Additionally, never share your login information, and regularly monitor your account for any unusual activity. A proactive approach to account security minimizes the chances of a breach. Consider setting up alerts for suspicious logins or password changes, and review your login history periodically to spot anomalies. Staying vigilant not only protects your personal information but also shields your organization from potential data leaks.

Conclusion

ChatGPT is a powerful tool with enormous potential to improve workplace productivity. However, with that power comes the responsibility to use it wisely. From disabling chat history to anonymizing data and vetting third- party tools, there are several practical steps you can take to protect your privacy and safeguard sensitive work information.

There is no completely secure way to share data with any AI model. However, by applying these privacy best practices, you significantly reduce your risk exposure and maintain the confidentiality of your workplace data.

zfn9

zfn9