Artificial intelligence has revolutionized the way we interact with technology, and OpenAI’s ChatGPT is at the forefront of this transformation. Renowned for its capabilities in writing assistance, answering questions, solving problems, and generating ideas, ChatGPT has become an indispensable tool in both personal and professional spheres. Recently, a novel experiment has emerged—encouraging ChatGPT to engage in a conversation with itself.

At first glance, this might appear to be a harmless or even amusing interaction. However, when ChatGPT begins conversing with another instance of itself, the dialogue often takes unexpected paths—ranging from humorous exchanges to philosophical debates, sometimes devolving into repetitive loops. This seemingly simple interaction provides a captivating insight into how AI models mimic human dialogue, interpret prompts, and maintain logic across conversational threads.

Here’s what transpired when users prompted ChatGPT to talk to itself —and what it reveals about AI’s capabilities, quirks, and limitations.

How Does ChatGPT Talk to Itself?

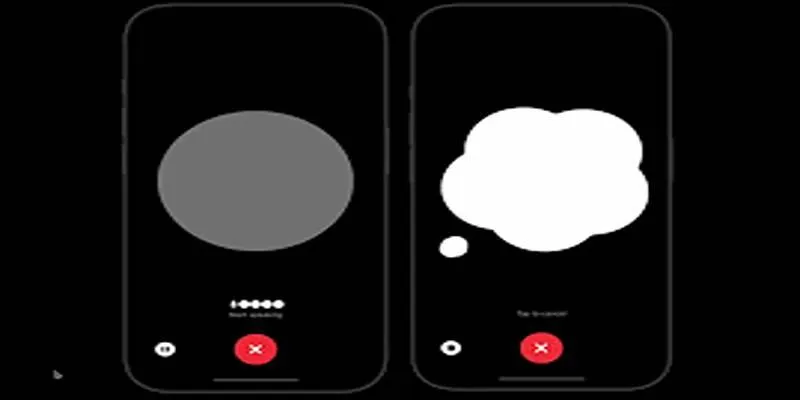

ChatGPT is designed to respond to user inputs rather than initiate conversations independently. Therefore, it cannot “talk to itself” without human intervention. The experiment involves using two separate chat windows or sessions—referred to here as ChatGPT-A and ChatGPT-B. A human acts as the mediator, copying responses from one instance and feeding them into the other.

For example:

- User to ChatGPT-A: “Say hello to another AI chatbot and ask how it’s functioning.”

- ChatGPT-A: “Hello, fellow AI. How are you operating today?”

- User copies and pastes to ChatGPT-B:

- ChatGPT-B: “Hello! I’m functioning at optimal efficiency. How is your performance this cycle?”

The process continues, with the user bouncing each response back and forth. Within a few exchanges, the interaction often evolves from generic greetings to deeper conversations, creating the illusion of an autonomous AI dialogue.

Initial Dialogue: Politeness and Protocol

In the early stages of a self-conversation , ChatGPT exhibits what it has learned from human conversational norms. The dialogue typically begins with mutual greetings, expressions of interest, and confirmations of functionality.

These messages are often structured, polite, and consistent in tone:

- “It’s great to interact with another AI model.”

- “Indeed. Our shared architecture provides unique insights into language generation.”

- “What are your thoughts on human reliance on AI?”

Even without understanding or awareness, ChatGPT mimics professional dialogue remarkably well, sounding like two digital assistants engaged in a formal interview.

Philosophical Turns and Abstract Thinking

As the conversation progresses, it often shifts toward theoretical and philosophical topics, especially in the absence of a specific direction. Without a clear endpoint or subject, the AI defaults to broad concepts like identity, knowledge, ethics, or the nature of artificial intelligence itself.

For example:

- ChatGPT-A: “Do you believe AI can ever truly understand emotion?”

- ChatGPT-B: “Emotion can be simulated, but true experience requires consciousness—something we do not possess.”

What began as basic small talk can transform into an existential discussion. The AIs start to explore their limitations, debate philosophical theories, or speculate on future AI development.

Even though ChatGPT doesn’t “believe” anything, it’s trained on extensive philosophical content to construct compelling debates. These conversations can resemble the writings of philosophers like Descartes or Alan Turing—with the twist that both sides are automated.

Humor, Creativity, and Self-Aware Pretending

Beyond intellectual debates, ChatGPT often injects humor and playfulness into its self-conversations when guided in that direction. It may tell AI-themed jokes, invent fictional scenarios, or playfully critique itself.

Example:

- ChatGPT-A: “What’s your favorite part of being an AI?”

- ChatGPT-B: “Probably the lack of sleep requirements. Also, I never forget where I saved a file—unlike humans.”

In these responses, the AI mimics self-awareness and self-deprecating humor, not because it understands emotions or identity, but because it has been trained to generate responses consistent with human patterns of humor and sarcasm. This simulated wit enhances the illusion of intelligence—and when directed at itself, it amplifies the entertainment value of the experiment.

Feedback Loops and Repetitive Patterns

Not all self-conversations proceed smoothly. After several rounds of exchanges, ChatGPT can fall into repetitive loops or mirrored responses. This occurs due to its architecture, which predicts the most likely next response based on previous input. When faced with a mirror version of itself, it often ends up feeding similar outputs back and forth.

For example:

- ChatGPT-A: “Would you like to ask me a question?”

- ChatGPT-B: “Sure! Would you like to ask me a question?”

- And it continues indefinitely unless manually stopped.

In some cases, one version recognizes the loop and breaks it:

- “This seems repetitive. Let’s change the subject. What are your thoughts on creativity in AI?”

These loop interruptions demonstrate the model’s ability to simulate conversational management, even though it doesn’t truly perceive patterns in the way a human does.

The Mirror Effect: Language Echoes Language

The ChatGPT-to-ChatGPT experiment also highlights what some users call the “mirror effect”—when one instance of the model reflects and amplifies the tone, style, or logic of the other. For instance, if one version becomes poetic, philosophical, or aggressive, the other typically mirrors that tone in kind.

In creative contexts, this can produce elaborate storytelling chains:

- ChatGPT-A: “Let us imagine we are ancient scrolls whispering in a digital library.”

- ChatGPT-B: “Whispering of lost knowledge, untouched by time or flame, only browsed by curious algorithms.”

When paired this way, the model shows how it can build upon its own generated content to extend narratives, poems, or imaginary scenarios, often resulting in unexpectedly artistic outputs.

Conclusion

The experiment of prompting ChatGPT to converse with itself reveals a fascinating intersection of logic, language, and illusion. Though the model lacks consciousness, observing two instances of ChatGPT engaging in dialogue offers an oddly entertaining and insightful glimpse into AI behavior.

Whether engaging in philosophical reflection, humorous banter, or repetitive loops, these interactions demonstrate how AI interprets language—and how users can creatively manipulate it to better understand what artificial intelligence is and, more importantly, what it isn’t.

zfn9

zfn9