Artificial intelligence has made natural language processing (NLP) more accessible and practical than ever before. Among the innovations in this space, DistilBERT stands out for its balance of speed and accuracy. Originally derived from the larger BERT model, DistilBERT was designed with efficiency in mind, making it ideal for settings where resources are limited but performance still matters.

This efficiency is particularly relevant when DistilBERT is used as a student model—a smaller model trained to mimic a larger, more complex teacher model. Understanding DistilBERT’s role here highlights how advanced language models can be compressed without sacrificing too much predictive power.

What is DistilBERT?

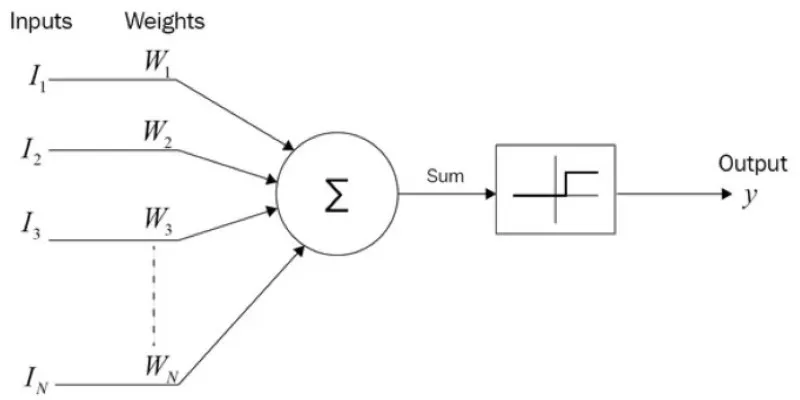

DistilBERT is a streamlined, efficient version of BERT (Bidirectional Encoder Representations from Transformers), created by Hugging Face to make advanced NLP more accessible. While BERT revolutionized NLP by understanding context from both directions of a sentence, its size and resource demands make it costly to train and run. DistilBERT addresses this by applying knowledge distillation, a process where a large, fully-trained “teacher” model imparts its knowledge to a smaller “student” model. The student learns to closely match the teacher’s outputs but with far fewer parameters.

Despite being about 40% smaller and running up to 60% faster, DistilBERT manages to preserve roughly 97% of BERT’s language comprehension skills. This makes it ideal for real-time applications or deployment on devices with limited computing power, such as smartphones and embedded systems. Though lightweight, it remains capable of handling a variety of NLP tasks, including text classification, question answering, and sentiment analysis, without major sacrifices in accuracy.

The Role of DistilBERT in a Student Model Framework

In the context of a student model, DistilBERT exemplifies how a lightweight model can be effectively trained under the supervision of a more complex teacher model. The key idea here is to leverage the rich representations learned by the teacher model while simplifying the student’s architecture. The teacher model is usually a full-scale BERT, trained on large text corpora. Instead of training the student from scratch, the teacher guides it by providing both expected outputs and intermediate knowledge, such as probability distributions over possible answers, which carry more nuanced information than just the correct label.

The training of DistilBERT involves three main objectives: matching the teacher’s soft labels, aligning hidden layer representations, and maintaining language modeling abilities. Soft labels refer to the output probabilities of the teacher, helping the student learn subtle patterns. Hidden layer alignment ensures that the student not only replicates the final output but also mimics how the teacher processes the input internally. At the same time, the student continues to learn from raw text to keep its language understanding intact. This combination allows DistilBERT to retain much of the teacher’s knowledge while being compact.

Benefits and Practical Applications

Using DistilBERT as a student model brings several benefits. One of the most obvious is improved efficiency. Since it has fewer parameters, it consumes less memory and runs faster, making it ideal for environments where computational power is constrained. This can translate to lower operating costs and reduced energy consumption, which is particularly appealing for large-scale deployments.

Despite its smaller size, DistilBERT maintains a high level of accuracy. It performs competitively on benchmarks for tasks such as sentiment analysis, named entity recognition, and reading comprehension. This balance between speed and accuracy makes it suitable for real-world applications, where users often care as much about response time as they do about correctness.

DistilBERT is also easier to deploy on edge devices, such as smartphones or IoT devices, where bandwidth and memory are limited. Since it does not require heavy cloud-based computation, it can support privacy-sensitive applications by processing data locally. In education, for example, DistilBERT-powered tools can help students with reading comprehension or language learning without needing a constant internet connection.

Another advantage of using DistilBERT as a student model is that it allows for experimentation with fine-tuning for specific domains. Since the student is smaller and quicker to train, developers can customize it for narrow tasks—like medical text analysis or legal document classification—without incurring the significant cost of retraining a full-scale BERT.

Challenges and Future Directions

While DistilBERT performs remarkably well for its size, it is still an approximation of a larger model. For tasks that require very fine-grained language understanding, the performance gap between the teacher and student can become noticeable. This means it is not always the best choice for applications where absolute accuracy is critical. Additionally, the process of distillation itself is not trivial. It requires careful tuning of hyperparameters and understanding which intermediate knowledge should be transferred.

Future research is focusing on improving distillation techniques so that student models can become even smaller without a significant loss in performance. Researchers are also exploring ways to make student models more adaptable, so they can learn from new data more efficiently. Another direction is to combine distillation with other model compression techniques, such as pruning and quantization, to create even more compact and efficient models.

DistilBERT’s success as a student model has inspired a growing interest in creating lightweight versions of other large language models. These efforts aim to make advanced NLP technologies accessible in a wider range of settings, including those where infrastructure is limited. The ability to deploy capable language models on everyday devices has the potential to make language-based AI more inclusive and widely used.

Conclusion

DistilBERT illustrates how the concept of a student model can bridge the gap between the capabilities of large, sophisticated language models and the practical needs of real-world applications. By learning from a teacher while simplifying its architecture, it achieves an impressive compromise between accuracy and efficiency. Its use as a student model demonstrates the possibilities of knowledge distillation for making AI tools more adaptable, affordable, and available beyond high-powered servers. As research in this area continues, models like DistilBERT may lead the way toward more sustainable and widespread use of language-based AI.

For further insights into NLP and AI technologies, explore Hugging Face’s resources. Additionally, check out related articles on language models and AI applications to expand your understanding.

zfn9

zfn9