Training a large language model might sound daunting, but with the right framework like Megatron-LM, it’s entirely manageable—even outside big tech labs. Developed by NVIDIA, Megatron-LM is designed for training massive transformer models using distributed GPUs. It’s built on PyTorch and supports model parallelism across GPUs, making it efficient for large-scale training.

Whether you’re starting from scratch or fine-tuning an existing model, understanding how to use Megatron-LM properly helps you train models capable of handling complex language tasks. This guide offers a clear and direct walkthrough of the setup, data preparation, configuration, and execution process.

Setting Up the Environment and Requirements

To begin, you’ll need to prepare a compatible system. Megatron-LM runs on multiple GPUs and is built on PyTorch, so it’s essential to install a supported version of PyTorch and NVIDIA’s Apex for mixed-precision training. This setup is crucial for speed and memory efficiency. Clone the Megatron-LM repository from GitHub, create a virtual environment, and install dependencies like Ninja, mpi4py, and Sentencepiece. Apex must be compiled with the --cpp_ext --cuda_ext flags for full compatibility.

Megatron-LM isn’t meant for single-GPU use. Even simple testing benefits from using at least four GPUs. For full-scale training—especially models with over a billion parameters—you’ll need dozens of GPUs, ideally connected with high-bandwidth networking like NVLink or Infiniband. High VRAM (16GB+ per GPU) and efficient data pipelines are also necessary.

Basic familiarity with distributed training concepts is highly beneficial. Running jobs involves writing and adjusting shell scripts, passing arguments through the command line, and sometimes modifying configuration files. The environment must be stable since large model training often runs for days or weeks.

Preparing the Training Data

The model’s performance heavily depends on the training data. Megatron-LM requires tokenized input in a binary format, so begin by collecting clean text data. This could be public datasets, curated web content, or proprietary corpora. It should be diverse yet relevant to the tasks the model will perform.

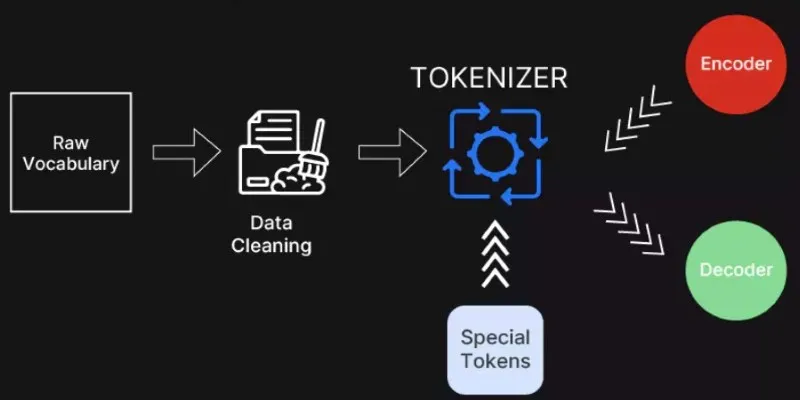

Use a tokenizer such as SentencePiece or GPT-2’s BPE-based tokenizer to convert the text into tokens. Megatron-LM includes scripts for tokenization and formatting. After tokenizing, convert the data into .bin and .idx files using the preprocess_data.py script. These files allow fast sequential access during training.

If you’re using several datasets, you can balance them using sampling weights. This approach is useful if one dataset is larger, but you don’t want it to dominate the training. Clean, high-quality input helps the model learn structure, grammar, and semantics more effectively. Avoid excessive duplication or noise, which can degrade output quality.

Tokenizers must match the model’s vocabulary size and type. You can reuse existing vocabulary or train a new one, depending on your goals. For domain-specific tasks, a specialized tokenizer may perform better than a general-purpose one.

Model Configuration and Training

With the data ready and the environment working, it’s time to define the model architecture. Megatron-LM uses command-line arguments to set the number of layers, hidden units, attention heads, vocabulary size, and sequence length. For example, a GPT-style model with 24 layers, 1024 hidden units, and 16 attention heads would require corresponding flags passed at runtime.

Megatron-LM supports three types of parallelism: data, tensor, and pipeline. Tensor parallelism splits matrix operations across GPUs, pipeline parallelism splits layers across GPUs, and data parallelism replicates models across nodes. These methods can be combined, allowing you to scale training across many GPUs efficiently.

Training is usually started via shell scripts, such as pretrain_gpt.sh. These scripts set key parameters like learning rate, optimizer (Adam or LAMB), weight decay, gradient clipping, batch size, and parallelism strategy. Megatron-LM also supports gradient accumulation and activation checkpointing to conserve memory.

The framework uses mixed-precision training (FP16) by default, improving speed and memory efficiency without loss in model accuracy. Loss values, learning rate, iteration times, and throughput are logged during the training process. You can set checkpoint intervals to resume training if it halts due to hardware or network issues.

Fine-tuning is handled similarly. You load a pre-trained checkpoint and train it further on a smaller, task-specific dataset. This is useful for adapting a general model to medical, legal, or technical writing, as well as for conversational agents. Fine-tuning typically uses lower learning rates and fewer steps than pretraining.

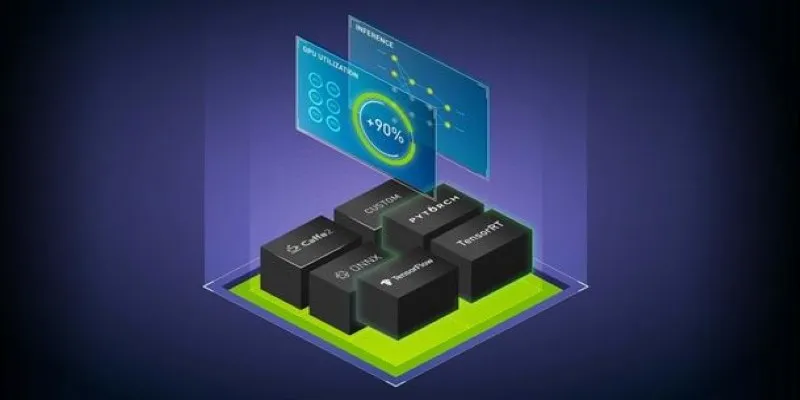

Training can take hours or days, depending on the model size, hardware, and data volume. Managing GPU utilization and choosing the right parallelism strategy can improve efficiency and reduce time. With proper configuration, Megatron-LM scales well from a few GPUs to hundreds.

Monitoring, Evaluation, and Scaling Up

Monitoring training involves more than watching loss numbers. Use Megatron-LM’s TensorBoard integration to visualize metrics such as training loss, validation loss, and learning rate over time. These plots help identify issues like vanishing gradients, overfitting, or unstable learning rates.

Validation is done using held-out data or task-specific benchmarks. Megatron-LM allows sampling outputs from the model mid-training, providing a quick look at its language generation ability and coherence. You can also evaluate perplexity on clean datasets, which provides a numerical measure of language model quality.

Scaling up training introduces more complexity. For very large models (billions of parameters), balancing memory and computing becomes more important. Activation checkpointing saves memory by recalculating intermediate outputs, and gradient accumulation simulates large batch sizes without increasing memory use. These features are integrated into Megatron-LM and configurable via flags.

After training, save model checkpoints for later use. You can load them to continue training, fine-tune them on a different dataset, or export the model for deployment. Megatron-LM checkpoints include model state, optimizer state, and learning rate scheduler progress.

Deployment is outside Megatron-LM’s scope, but exporting models for use with inference frameworks like ONNX or NVIDIA Triton is possible. The quality of output from a trained model depends on both data and training configuration. Testing across various prompts can help fine-tune the final output quality.

Conclusion

Training a language model with Megatron-LM involves setting up the environment, preparing data, configuring the model, and using efficient parallelism. It supports large-scale training with mixed precision and distributed computing, making it suitable for building high-performing transformer models. While it’s built for heavy-duty tasks, it’s flexible enough for various use cases. For those looking to train models that produce strong language output, Megatron-LM offers a dependable starting point.

zfn9

zfn9