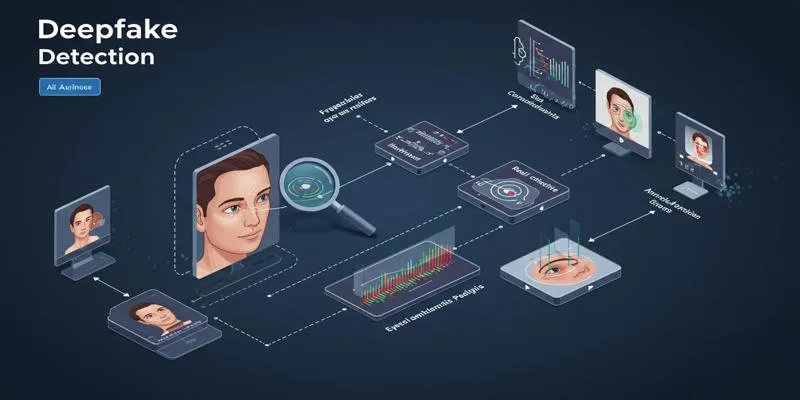

Quick advancements in deepfake technology have produced highly deceptive synthetic media that tricks people and circumvents conventional security systems. Deepfake technology creates two significant threats to digital security because it produces deceptive video and audio content that undermines trust relationships. To protect against deepfake misuse, detecting deepfake technology uses three main criteria: spectral artifact analysis, liveness detection, and behavioral analysis.

Introduction

Artificial media products named deepfakes originate from Generative Adversarial Networks (GANs) and diffusion models with their advanced generative models. The genuine applications of modern entertainment technology and educational tools stand beside serious unwanted effects from improper use. For example:

- Deepfake technologies allow criminals to fake individual voices and videos, which they use for financial deception.

- False information distributed online takes the form of fake political speeches and news reports, which serve to spread disinformation.

- Unlawful access to personal images or videos for harmful actions constitutes periods of privacy invasion.

Because deepfakes are advanced in their creation, their detection becomes extremely difficult when using basic detection methods. Researchers have created innovative detection systems by developing strategies to check artifacts while ensuring live status assessment of individual behavior patterns.

1. Spectral Artifact Analysis: Detecting Invisible Patterns

Spectral artifact examination aims to detect specialized defects that generative models introduce when producing artificial media products. Diamond City uses frequent domain analysis and machine learning techniques to detect these artifacts, which are invisible to the human eye.

How It Works

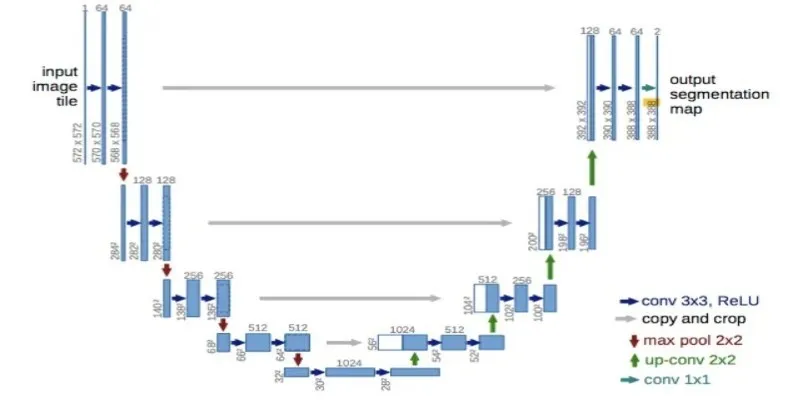

The analysis of spectral artifacts depends on DCT (Discrete Cosine Transform) or Fourier Transform tools for frequency spectrum investigation of images or videos. Synthesized content produced by GAN models shows detectable grid structure and repeated patterns in frequency domain space because of how their synthesis methods perform upsample calculations.

Key Capabilities

- This detection technique notes pixel-level inconsistencies resulting from using GAN-based models.

- Audio-visual desynchronization functions to discover when facial expressions do not match the recorded audio components in deepfake video content.

- The system monitors for semantic attacks by assessing changes made to expressions or object texture features when attackers deploy foundation models, including CLIP or StyleCLIP.

Example Application

The detection system identified a deepfake video depicting an imitation CEO approval of fraudulent transactions through spectral analysis, which detected grid pattern distortions in lighting, typical GAN artificial image effects.

Strengths

- This solution detects evasive, high-quality deepfakes that no person can identify.

- Works across multiple modalities (e.g., images, audio, video).

Limitations

- Computationally intensive for high-resolution content.

- ADI suffers from adverse training methods that produce frequency distributions resembling those found in natural data.

2. Liveness Detection: Verifying Real-Time Presence

The authentication field uses live detection methods to determine real people from synthetic impersonators in electronic replicas like deepfake videos or 3D masks. This evaluation system focuses on identifying physical behaviors and behavioral indicators that prove challenging for generative models to reproduce.

How It Works

Liveness detection employs active and passive verification methods:

- The system demands users execute standard actions, such as blinking, turning their heads, and displaying their presence through subtle motions.

- Passive Liveness Detection: This technique analyzes static features like skin texture, depth perception, or blood flow patterns without requiring user interaction.

Key Capabilities

- Remote photoplethysmography (rPPG) enables the system to identify physiological heartbeat changes.

- A system cheques for any abnormality found in in-depth maps created by three-dimensional cameras.

Example Application

A banking app requires users to complete all on-screen instructions during facial recognition login attempts. Deepfake videos cannot duplicate genuine micro-expressions that include normal pupil dilation and minor blood flow-induced skin color variations.

Strengths

Integrates seamlessly with existing biometric systems.

Such technology combats spoofing attacks by incorporating pre-recorded videos or 3D masks as security measures.

Limitations

- The system remains at risk from sophisticated spoofing techniques, which include hardware elements with heating components or dynamic texture features.

- The system needs specialized hardware, including infrared cameras, for best operation.

3. Behavioral Analysis: Profiling Interaction Patterns

Behavioral analysis uses user interaction pattern analysis to detect synthetic behavior indicators that differ from natural human behavior. The method successfully detects AI-generated bots or avatars in addition to genuine human behavior.

How It Works

Behavioral systems study typing speed variations, mouse movement patterns, touchscreen actions, and navigation trail patterns using Long-Short-Term Memory (LSTM) networks for analysis.

Key Capabilities

- Monitors typing speed and rhythm for irregularities indicative of AI automation.

- Tracks mouse acceleration curves and click force consistency.

- The system evaluates session irregularities, including when users log in unexpectedly or quickly complete tasks.

Example Application

A Fortune 500 company noticed account takeovers through behavioral biometrics, which detected robotic mouse motions that did not contain standard hand tremor patterns during remote workforce logins.

Strengths

- Adaptable across diverse workflows (e.g., e-commerce platforms, financial transactions).

- Effective against real-time bot-driven attacks on digital systems.

Limitations

- The permanent monitoring of individuals creates privacy problems, affecting GDPR compliance.

- Bots that receive human behavioral training display effective natural pattern replication.

Challenges in Deepfake Detection Technologies

These detection techniques encounter significant difficulties in performing their intended functions.

- Interconnected generative models using diffusion models with adversarial training produce evolutionary deepfakes that bypass standard verification systems.

- Using next-generation detection tools on existing systems demands organizations revise their API infrastructure and upgrade their hardware components.

- Social engineering methods that utilize deepfakes use human psychology weaknesses instead of underlying technical system weaknesses.

Future Directions in Deepfake Detection

Researchers dedicate their efforts to exploring different detection methods for countering emerging threats as part of their ongoing investigations.

- Multimodal Fusion: Combining spectral artifact analysis with liveness verification and behavioral profiling for comprehensive detection systems.

- Foundation Model Integration: Leveraging pre-trained vision models like CLIP for semantic-level forgery identification across diverse datasets.

Technology developers should establish Blockchain Timestamping as a solution for creating unalterable historical records on distributed ledger platforms to verify media origins.

Conclusion

Misusing synthetic media requires three critical detection tools: spectral artifact analysis, liveness verification, and behavioral profiling systems. A fused application of available detection techniques will help minimize financial, healthcare, and public safety risks, yet synthetic media remains vulnerable to all deception methods. Progress in generative AI technology requires organizations to spend money on adaptable detection methods that must be implemented with strict regulatory rules to protect digital trust.

zfn9

zfn9