In Retrieval-Augmented Generation (RAG) systems, the quality of the final answer depends heavily on how information is retrieved. A critical part of this process is chunking—the way documents are broken down into smaller, searchable pieces. Choosing the right chunking strategy can significantly enhance the system’s ability to retrieve relevant data and deliver more accurate answers.

This post explores 8 distinct chunking strategies used in RAG systems. Each method serves a different purpose depending on the data structure, the nature of the content, and the specific use case. For developers and researchers working on knowledge retrieval or generative AI applications, understanding these methods is key to building smarter solutions.

Why Chunking is a Crucial Step in RAG Pipelines

Chunking is the bridge between large knowledge bases and language models. Since most RAG systems don’t process entire documents at once, they rely on retrieving the right “chunk” that contains the answer. A poorly chunked document might result in the model missing important context or failing to deliver helpful responses.

Key reasons chunking matters:

- It determines how well relevant data is retrieved.

- It affects the semantic clarity and completeness of responses.

- It helps manage token limits by controlling input size.

By chunking intelligently, teams can improve retrieval efficiency, reduce hallucinations, and boost the overall performance of their AI applications.

1. Fixed-Length Chunking

Fixed-length chunking is the simplest approach. It divides a document into equal-sized blocks based on word count, character length, or token limits.

How it works:

- The system splits the text into fixed parts, such as every 300 words.

- No attention is given to the meaning or natural structure of the text.

This method is often used for early-stage testing or uniform datasets.

Benefits:

- Easy to implement across any text dataset.

- Ensures uniformity in input size.

Limitations:

- May cut off sentences or ideas mid-way.

- Reduces coherence and context in some cases.

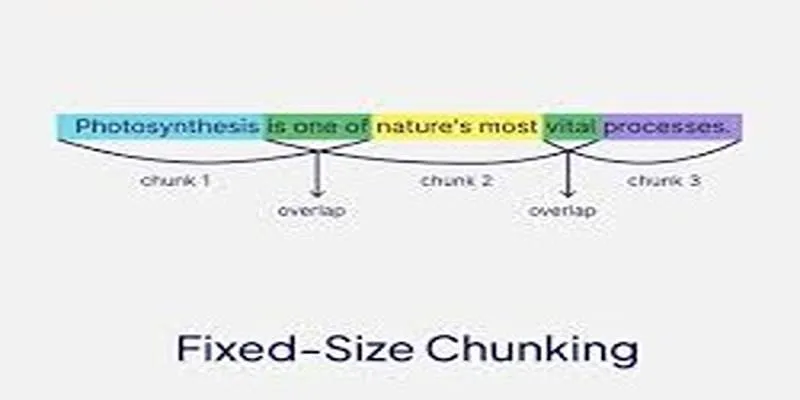

2. Overlapping Chunking

Overlapping chunking adds context retention to fixed-length approaches by allowing parts of adjacent chunks to overlap.

How it works:

- A windowing technique slides through the document.

- Each chunk starts slightly before the previous one ends.

For example:

- Chunk 1: Words 1–300

- Chunk 2: Words 250–550

It ensures that important transitional sentences aren’t lost at the boundaries.

Benefits:

- Maintains more contextual information between chunks.

- Reduces the risk of cutting off key ideas.

3. Sentence-Based Chunking

Sentence-based chunking respects sentence boundaries to ensure each chunk remains readable and semantically complete. One major advantage is that it keeps meaningful ideas intact, making it easier for RAG models to extract the correct information.

How it works:

- Sentences are grouped until a certain length is reached, e.g., 250–300 tokens.

- Chunks end only at sentence breaks.

Benefits:

- Preserves grammar and flow of information.

- Works well with documents containing narrative or conversational data.

Limitations:

- It may result in uneven chunk sizes.

- Requires sentence parsing tools like spaCy or NLTK.

4. Semantic Chunking

Semantic chunking uses the meaning of the content to form chunks, grouping related ideas or topics. It is especially helpful for dense or academic documents. A semantic approach relies on Natural Language Processing (NLP) tools like text embeddings, similarity models, or topic segmentation.

How it works:

- The system detects topic shifts.

- Each chunk contains text with a unified meaning or subject.

Benefits:

- High relevance during retrieval.

- Ideal for documents with rich or layered information.

Drawbacks:

- More complex to implement.

- Requires additional compute resources.

5. Paragraph-Based Chunking

Many documents are naturally structured into paragraphs. This method keeps those boundaries intact, treating each paragraph or a group of paragraphs as a chunk. It is most useful when working with documents like blogs, manuals, or reports that already have logical breaks.

How it works:

- The system uses newline characters or tags to detect paragraphs.

- Each paragraph becomes a chunk or is merged with adjacent ones to meet length requirements.

Benefits:

- Matches human writing styles.

- Simplifies retrieval and post-processing.

Limitations:

- Paragraph length can vary significantly.

- It is not always suitable for token-limited applications.

6. Title-Based Chunking

Title-based chunking uses document structure such as headings and subheadings (e.g., H1, H2, H3) to guide the chunking process. This method is especially effective for long-form content and technical manuals. This technique ensures that each chunk is focused on a single topic or subtopic.

How it works:

- The system scans for headers and groups the following text as a chunk.

- Subsections can be further broken down as needed.

Benefits:

- Easy to understand and navigate.

- Highly relevant for documents with nested topics.

7. Recursive Chunking

Recursive chunking is a flexible method that attempts higher-level chunking first and drills down only if the chunk exceeds the size limit. This layered approach mimics human reading behavior and keeps a clean hierarchy.

How it works:

- Starts with the largest logical chunks (titles, sections).

- If they are too large, break them into smaller ones (paragraphs or sentences).

Benefits:

- Adapts chunk size based on structure.

- Helps preserve context while respecting limits.

Ideal use cases:

- Knowledge bases

- Academic papers

- Research reports

8. Rule-based or Custom Chunking

When documents have unique patterns, rule-based chunking becomes useful. Developers define custom rules for chunking based on file types or domain- specific content.

Examples:

- Split transcripts by timestamps.

- Break code documentation by function or class.

- Use HTML tags in web pages for chunking sections.

Benefits:

- Offers high precision.

- Works well for domain-specific systems like legal, medical, or programming data.

Conclusion

Chunking isn’t just a technical detail—it’s a key ingredient that defines the success of any RAG system. Each chunking strategy brings its strengths, and the choice depends largely on the type of data being handled. From fixed- length basics to semantic or rule-based precision, teams can choose or combine methods to fit their specific project goals. Developers should always evaluate the document type, expected query types, and performance requirements before deciding on a chunking method. By understanding and applying the right chunking technique, organizations can significantly improve retrieval performance, reduce response errors, and deliver more accurate, human-like results from their AI systems.

zfn9

zfn9