Data protection is a significant concern as artificial intelligence (AI) technology rapidly evolves. Traditionally, AI models required vast amounts of data, often including private or confidential information, such as personal details or business data. This dependency on data poses substantial privacy issues, especially when information is stored or transmitted between devices.

Federated learning offers a revolutionary solution to these challenges by enabling AI models to be trained without sharing raw data, thereby safeguarding privacy and security. This article explores how federated learning works , its benefits, and its transformative impact on AI development in an increasingly privacy-conscious world.

What is Federated Learning?

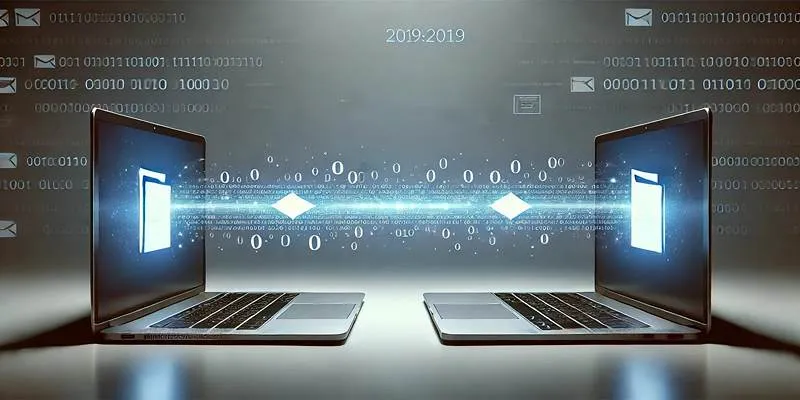

Federated learning allows AI models to be trained directly on decentralized devices, such as smartphones, computers, or Internet of Things (IoT) devices, rather than on centralized platforms. This approach ensures that private information remains on the original device, and only the model updates are shared with a central server for aggregation.

In simple terms, federated learning keeps data private , sharing only the insights gained to enhance the model. For example, when an AI model is trained on smartphones, user-generated data (like app usage patterns or location data) stays on the device. The model on each device is updated individually, and only the model updates are sent to a central server, ensuring personal data security.

How Does Federated Learning Work?

In traditional machine learning models, raw data from numerous users or devices is sent to a central server for processing and model training. Federated learning flips this model on its head. Here’s a step-by-step process of how it works:

- Local Model Training: The AI model is first initialized and distributed to a network of decentralized devices. Each device (smartphone, computer, or IoT device) uses its local data to train the model.

- Model Updates, Not Data: Instead of sending raw data back to the central server, each device sends updates (such as model parameters or weights) that reflect what it has learned from its local data.

- Aggregation on the Server: The central server aggregates updates from all devices, combining them into one global model. This aggregated model is then shared back to the devices for further training, continuing the cycle.

- No Data Movement: The key innovation is that raw data never leaves its original location. Only the model parameters are shared, ensuring sensitive data remains secure.

Advantages of Federated Learning

- Privacy Preservation: Federated learning ensures privacy as raw data is never shared or uploaded to central servers. This is crucial in sectors like healthcare, finance, and social media, where processed data can be highly sensitive.

- Compliance with Regulations: Federated learning helps organizations comply with global data protection regulations like GDPR and HIPAA, reducing the risk of privacy law violations by keeping data local.

- Reduced Bandwidth and Storage Costs: By minimizing large-scale data transfers, federated learning saves bandwidth and storage costs. Only small model updates are sent back to the server, making it a cost-effective and efficient AI training method.

- Personalization Without Data Sharing: Federated learning allows AI models to be personalized for users without requiring data sharing. For example, a smartphone app could learn to predict user preferences without sending data to the cloud, offering a personalized experience without compromising privacy.

Use Cases of Federated Learning

- Healthcare: Federated learning is highly beneficial in healthcare, where strict privacy laws protect patient data. Hospitals and research institutions can collaboratively train AI models to predict diseases, analyze medical images, or personalize treatment plans while keeping patient data private and local.

For instance, several hospitals could use federated learning to train an AI model for cancer detection in medical imaging. Instead of sharing patient images, each hospital trains its model and shares only updates, resulting in better AI without compromising patient confidentiality.

- Smartphones and Personal Devices: Federated learning is widely used in smartphones. For example, Google’s Gboard uses federated learning for text prediction. The keyboard learns from typing patterns to predict the next word, but the actual typing data is never shared. Only model updates are exchanged, allowing the system to improve while respecting user privacy.

Challenges of Federated Learning

- Device Diversity: The diversity of devices in federated learning poses a challenge. Different devices have varying computational power, impacting the efficiency of model training. While powerful devices like servers can handle large-scale training, mobile devices might be slower or have limited resources, reducing efficiency.

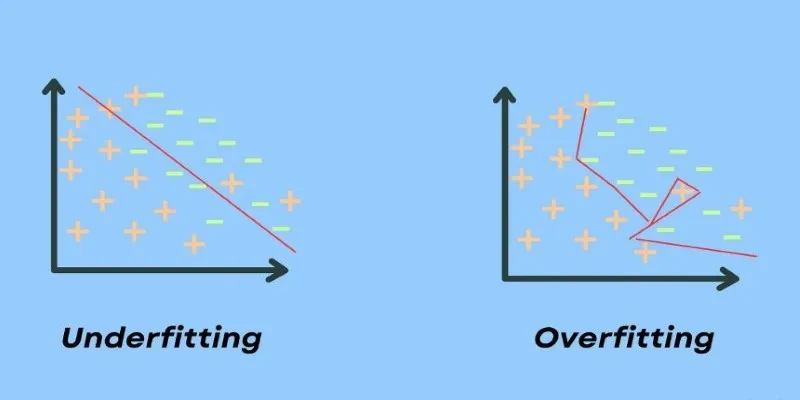

- Model Accuracy and Convergence: Federated learning can lead to slower model convergence or less accurate models. Since data on each device is not identical, local models may diverge, causing inconsistencies. Careful strategies are needed to ensure the global model remains effective and accurate.

Conclusion

Federated learning is a groundbreaking approach that enables AI model training without data leaving its local environment. By keeping raw data private and sharing only model updates, it provides a secure, privacy-preserving solution applicable across various industries, from healthcare to finance. Although challenges like device heterogeneity and communication overhead exist, federated learning holds significant promise for the future of AI.

zfn9

zfn9