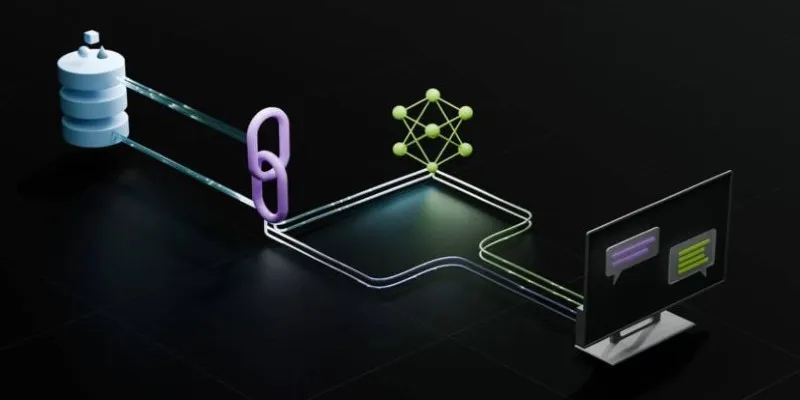

Imagine asking your computer a question, and it reads documents, understands them, and provides an answer. This is the essence of Retrieval-Augmented Generation (RAG). However, sometimes RAG retrieves irrelevant information. Enter CRAG—a smarter technique to enhance RAG by selecting more relevant facts before the AI crafts its response. Let’s explore how this works.

The Problem with Regular RAG

To appreciate CRAG’s benefits, we first need to understand RAG’s limitations. RAG models operate in two main steps: finding related documents and using them to generate a response. However, the accuracy of the final answer relies heavily on the quality of the retrieved documents. If they’re too generic, outdated, or irrelevant, the response may suffer, with AI potentially “hallucinating” or fabricating details.

Most RAG systems rely on keyword matching or vector similarity, which often leads to retrieving text that appears similar but doesn’t truly address the question. For example, if you search “Why does the sky look blue?” and receive articles about “skydiving tips,” it’s not helpful simply because they both mention “sky.”

So, how do we solve this? This is where CRAG steps in, offering a more intelligent method to discern useful documents from the rest.

What CRAG Does Differently

CRAG stands for Confidence-Ranked Answer Generation. It acts as a filter, scoring each document based on its potential to contribute to a valuable answer before the AI begins writing.

Here’s a simplified breakdown of CRAG:

Document Retrieval (Same as RAG)

Initially, like in regular RAG, CRAG collects documents that match the query using a retriever model.

Confidence Scoring (CRAG’s Superpower)

CRAG then evaluates each document’s utility, assigning confidence scores based on their likelihood to enhance the response’s quality.

Selective Answer Generation

The system generates multiple drafts using top-ranked document subsets, akin to crafting several essay drafts.

Best Answer Selection

Each draft is scored for relevance, clarity, and accuracy, with the highest- scoring draft chosen as the final answer.

Although this process is more time-consuming than traditional RAG, it significantly boosts answer accuracy and reliability. CRAG evaluates and compares documents using real-world training data examples, reducing AI hallucinations.

Why CRAG Makes RAG Smarter

CRAG excels by avoiding weak or unrelated sources through confidence-based document ranking, minimizing hallucinations. Its draft-based approach allows exploration of various phrasing and explanation styles, akin to refining an essay through multiple drafts.

Furthermore, CRAG adeptly handles complex, multi-part questions. For instance, when asked about “climate change effects on agriculture and AI assistance,” CRAG’s draft system is more likely to address both aspects comprehensively.

CRAG also facilitates model evaluation and improvement over time. By scoring different answer attempts, developers can identify strengths and areas for enhancement, accelerating the model’s learning process.

How You Can Use CRAG in a RAG Pipeline

Wondering how to implement CRAG? It can be integrated into most RAG pipelines with some modifications. Here’s a basic overview:

Set Up the Retriever

Utilize a retriever like FAISS or Elasticsearch to gather top documents based on user queries, forming a pool of potential sources.

Add the Confidence Reranker

Introduce a reranking model—often a small language model or fine-tuned transformer—to score each document’s utility for answering the query.

Create Multiple Drafts

Feed high-confidence document combinations into a generator model (e.g., GPT or another LLM) to create diverse answers from different document combinations.

Score and Pick the Best

Finally, use a scoring function—considering clarity, truthfulness, and relevance—to select the best final answer.

Several open-source tools and libraries, such as LangChain, Haystack, and LlamaIndex, support custom reranking and multi-passage generation, simplifying CRAG’s integration into chatbots or search engines.

Conclusion

While RAG models are useful, their effectiveness hinges on the quality of retrieved information. CRAG introduces a layer of discernment, selecting the most valuable parts and testing multiple drafts before finalizing an answer. It’s akin to giving your AI a second—or third—opinion before responding. By employing confidence scores and multiple drafts, CRAG produces clearer, more accurate answers. Whether you’re developing a chatbot or a student project, understanding how CRAG enhances RAG helps you build superior systems. In AI, even minor adjustments can yield significant improvements.

zfn9

zfn9