Apache Kafka is a powerful distributed event streaming platform that efficiently manages vast volumes of real-time data. Originally developed by LinkedIn, Kafka is now a popular tool for organizations needing to process continuous streams of records reliably. Unlike traditional messaging systems, Kafka excels in storing and replaying data streams, making it indispensable for applications requiring consistency and replayability.

Many businesses leverage Kafka as the backbone for applications dependent on real-time data and fault-tolerant communication. This article explores common Apache Kafka use cases and provides a clear, step-by-step guide to installing it correctly.

Common Use Cases of Apache Kafka

Apache Kafka excels in environments where data continuously flows, and decisions need to be made swiftly. One notable application of Kafka is in powering real-time analytics pipelines. For instance, retailers and online platforms stream live transaction and click data through Kafka into analytics dashboards. This real-time data processing allows businesses to monitor sales trends, stock levels, and customer behavior as they occur, enabling immediate reaction without waiting for overnight reports.

In the financial sector, Kafka plays a crucial role in fraud detection and trade monitoring. Banks and payment networks utilize Kafka to stream transaction data into processing systems, quickly identifying unusual patterns and triggering immediate alerts, which is vital for security.

Kafka is also popular in microservices architectures, where independent services need to share information without tight coupling. Each service can publish events to Kafka and subscribe to relevant topics, maintaining autonomy while staying informed about the system’s state. This architecture enhances system flexibility and reduces the risk of cascading failures.

For operations teams, Kafka simplifies log collection by aggregating logs from multiple servers into a central stream. Tools like Elasticsearch or Splunk can then analyze these logs to derive insights, helping identify issues before they escalate.

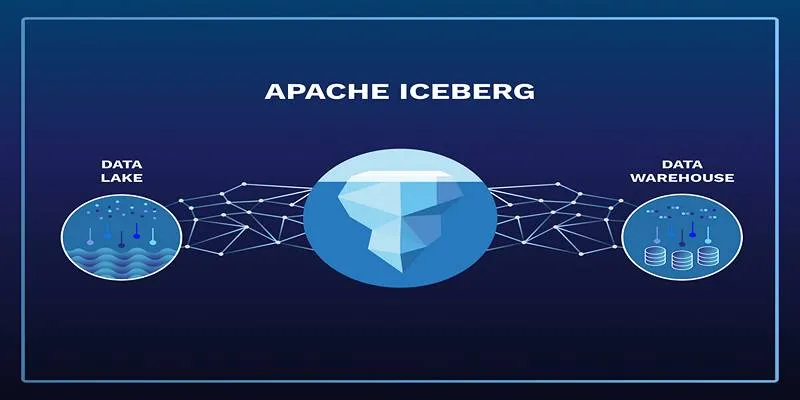

Lastly, Kafka is widely used for feeding data lakes. Organizations stream live operational data into large storage systems like Hadoop or cloud-based warehouses, avoiding delays and system strain associated with traditional batch uploads while keeping source systems responsive and fast.

Preparing for Kafka Installation

Before installing Kafka, a bit of preparation is necessary. Since Kafka runs on the Java Virtual Machine, ensure Java 8 or newer is installed. Kafka also requires ZooKeeper for broker metadata coordination and leader elections, so planning for ZooKeeper is essential. While both can run on the same machine in testing environments, production setups require at least three separate ZooKeeper nodes for reliability.

Ensure your server has sufficient memory, CPU, and fast disks, as Kafka’s performance heavily relies on disk speed and network bandwidth. SSDs are recommended for better throughput, and disk space should be closely monitored since Kafka persists all messages on disk until expiration.

Plan a clear directory structure before starting. Kafka stores log data in the directory specified by log.dirs in the configuration file. Choose a reliable and fast storage path for these files and decide on the number of brokers and their distribution across your infrastructure. Even in development, it’s beneficial to start with a structure that reflects your intended production layout.

Installing Apache Kafka Step by Step

Installing Apache Kafka is straightforward. Begin by downloading the latest release from the official Apache Kafka site. Extract the tar or zip file to your chosen directory.

-

Start ZooKeeper: Kafka ships with a basic ZooKeeper configuration located in

config/zookeeper.properties. Use the command:bin/zookeeper-server-start.sh config/zookeeper.propertiesThis starts ZooKeeper on the default port. With ZooKeeper running, you can start a Kafka broker.

-

Configure and Start a Kafka Broker: Edit

config/server.propertiesto change defaults likebroker.id,log.dirs, or the listener address. Launch the broker with:bin/kafka-server-start.sh config/server.propertiesKafka is now running and ready to handle data. Create a topic using the built-in script:

bin/kafka-topics.sh --create --topic test-topic --bootstrap-server localhost:9092 --partitions 1 --replication-factor 1 -

Verify Setup: Produce and consume a few messages to verify the setup. Use the producer console to send data:

bin/kafka-console-producer.sh --topic test-topic --bootstrap-server localhost:9092Open a separate terminal to consume those messages:

bin/kafka-console-consumer.sh --topic test-topic --from-beginning --bootstrap-server localhost:9092

In a production environment, configure multiple brokers, each with its own broker.id, and point them to the same ZooKeeper ensemble. Adjust replication and partitioning to suit your fault tolerance and throughput needs.

Kafka supports secure connections and authentication, though these are disabled by default. After confirming the installation works, enable SSL encryption and SASL authentication to secure your cluster, requiring updates to both broker and client configurations.

Maintaining and Monitoring Kafka

Once installed, Kafka requires ongoing monitoring and maintenance to ensure smooth operation. Monitor disk usage, broker uptime, and ZooKeeper health, as running out of space or losing quorum can lead to data loss or outages. Tools like Prometheus and Grafana are commonly used to visualize Kafka metrics.

Configure retention policies carefully. Kafka allows you to define message retention duration or disk space limits. Regularly clean up unused topics and monitor under-replicated partitions to maintain cluster stability.

Regular backups of configurations and careful version upgrades are essential for maintaining a healthy Kafka deployment. While rolling upgrades are supported, they should be tested in a staging environment before applying to production.

Conclusion

Apache Kafka has become a preferred choice for organizations needing reliable, high-throughput event streaming. Its capabilities in real-time analytics, microservices enablement, log aggregation, and data lake ingestion make it a versatile and dependable platform. Setting up Kafka involves installing Java, configuring ZooKeeper, starting brokers, and creating topics, all achievable with a few well-defined commands. Once installed, regular monitoring and proper configuration ensure Kafka delivers reliable performance over time. With thoughtful planning and maintenance, Kafka can efficiently meet the demands of modern data-driven applications.

zfn9

zfn9