If you’ve ever sat through a painfully slow training run, you’re not alone. Waiting hours—or even days—for a Hugging Face model to train can feel like watching paint dry. You tweak your code, throw in more GPU power, cross your fingers… and still, it drags. That’s where Optimum and ONNX Runtime step in. Together, they trim down that wait time, reduce the mental gymnastics involved in optimization, and make model training on Hugging Face feel way more manageable.

Let’s break it down without the fluff and walk you through how this combo works, why it’s effective, and how you can get started with minimal fuss.

What’s Happening Under the Hood?

Training transformer models is heavy work. They’re built for performance, but they’re also hungry for memory and compute. Optimum, a toolkit from Hugging Face, helps bridge the gap between research-grade models and real-world deployment. Pair it with ONNX Runtime, and suddenly you’re getting faster throughput and smoother runs, without flipping your whole codebase on its head.

So, what exactly is ONNX Runtime doing? It’s optimizing your model at the graph level—think fewer redundant operations, more efficient memory management, and better CPU/GPU utilization. Meanwhile, Optimum handles the messy parts, such as exporting the model, aligning the configuration, and running the training loop, with fewer surprises. You don’t need to reinvent anything; you just plug them in and let them do the legwork.

This isn’t just about speed, either. Lower latency, reduced costs, and more stable training sessions are part of the package, too. And yes, it works out of the box with Hugging Face Transformers.

How to Set It All Up (Without Losing Your Mind)

No need to crawl through forum threads or dig through GitHub issues. Here’s a clean setup you can follow—just five steps to get your Hugging Face model running with Optimum + ONNX Runtime.

Step 1: Install the Essentials

Start with the libraries. If you haven’t already, install Hugging Face Transformers, Optimum, and ONNX Runtime. It’s one command away:

pip install transformers optimum[onnxruntime] onnxruntime

That bracketed bit installs the ONNX Runtime backend specifically tailored to work with Optimum. Nothing extra. Nothing bloated.

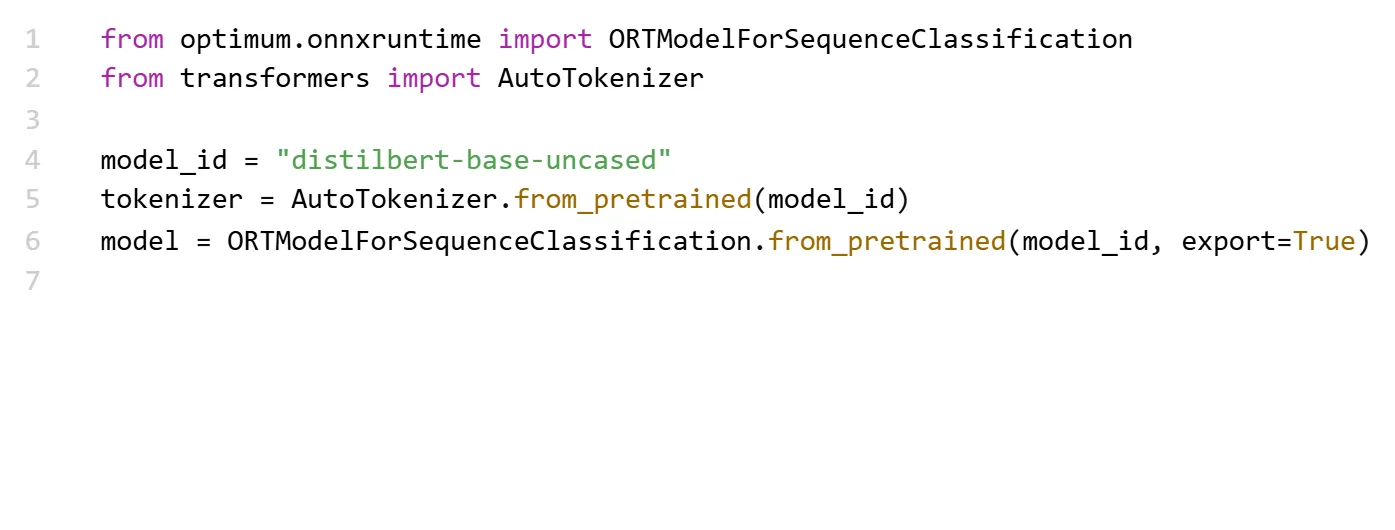

Step 2: Convert Your Model to ONNX

You’ll need to export your model to the ONNX format. Optimum makes this straightforward:

from optimum.onnxruntime import ORTModelForSequenceClassification

from transformers import AutoTokenizer

model_id = "distilbert-base-uncased"

tokenizer = AutoTokenizer.from_pretrained(model_id)

model = ORTModelForSequenceClassification.from_pretrained(model_id, export=True)

The export=True argument is doing the magic—behind the scenes, it converts the model to ONNX and sets it up for runtime optimization. You don’t have to tinker with opset versions or graph slicing manually.

Step 3: Tokenize Your Data

No major detours here. Just use the tokenizer like you normally would:

inputs = tokenizer("The future of model training is here.", return_tensors="pt")

This input will work seamlessly with your ONNX-ified model. No need to modify anything downstream.

Step 4: Run Inference or Fine-Tune

For inference:

outputs = model(**inputs)

If you’re fine-tuning, swap in a Trainer from Hugging Face and point it at your ORT model. You can still use all the training arguments you’re familiar with—learning rate, batch size, epochs, and so on. Optimum simply wraps the process so it runs through ONNX Runtime, not the standard PyTorch engine.

Step 5: Measure the Boost

Don’t skip this. Run your model using both regular PyTorch and the ONNX Runtime path. You’ll notice the speed bump—often in the range of 2x faster inference and up to 40% reduced training time, depending on the model and hardware.

Use the Hugging Face InferenceTimeEvaluator if you want a quick benchmark:

optimum-cli benchmark --model onnx_model_directory --task text-classification

Now you have real data backing up what you feel intuitively: everything runs smoother.

Why It’s Worth Your Time (and GPU Hours)

Let’s be honest: switching runtimes sounds like a pain. But with Optimum + ONNX Runtime, the transition is surprisingly painless. And the gains? They’re real. Faster inference is one thing, but when you’re pushing models into production—or training dozens in a research loop—those saved hours add up fast.

Here’s what this setup gives you without extra hoops:

- Better Throughput – Whether you’re on CPU or GPU, the model runs faster, and your batch sizes don’t choke the memory limits.

- Stability – Fewer crashes, smoother logging, and less weird behavior mid-training.

- Portability – ONNX models can be run across different frameworks, making deployment to edge devices or cross-platform environments much simpler.

- Lower Costs – If you’re paying for compute by the minute (and who isn’t?), faster training directly means smaller bills.

You don’t have to commit to a massive infrastructure overhaul. You keep your Hugging Face workflow, plug in Optimum and ONNX, and watch the training logs tick by faster.

Where This Really Shines

There are plenty of cases where this combo quietly outperforms standard pipelines. For example:

- DistilBERT and TinyBERT models get a notable acceleration in inference-heavy tasks like Q&A systems and sentiment analysis APIs.

- Quantized models trained with dynamic or static quantization work natively with ONNX Runtime, pushing both speed and memory efficiency further.

- Real-time applications—like chatbots or voice recognition—where latency is make-or-break, benefit from the snappier response ONNX provides.

All of this with no black-box mystery or proprietary lockdowns.

Wrapping It Up

Faster training and inference don’t have to come with trade-offs or headaches. With Hugging Face’s Optimum and ONNX Runtime working together, you get smoother performance, faster results, and less time staring at a terminal waiting for epochs to finish.

No rocket science. No cryptic configs. Just smarter use of the tools already at your fingertips. So if you’re tired of sluggish training cycles and want a quicker way to production—or just better use of your GPU—this setup is worth a look. Go ahead, give your training loop a breather. Let ONNX and Optimum do the heavy lifting.

zfn9

zfn9