Introduction

At first glance, Space Invaders might seem far removed from artificial intelligence. This simple arcade game—a spaceship battling waves of aliens—provides an ideal testing ground for how AI can learn through interaction. Deep Q-Learning, which blends reinforcement learning with deep neural networks, offers a method for machines to acquire skills autonomously. Instead of receiving direct instructions, the AI plays, makes mistakes, and adjusts its approach. Teaching it to play Space Invaders isn’t just nostalgic—it’s a practical step toward more adaptive machine learning systems.

Understanding Deep Q-Learning

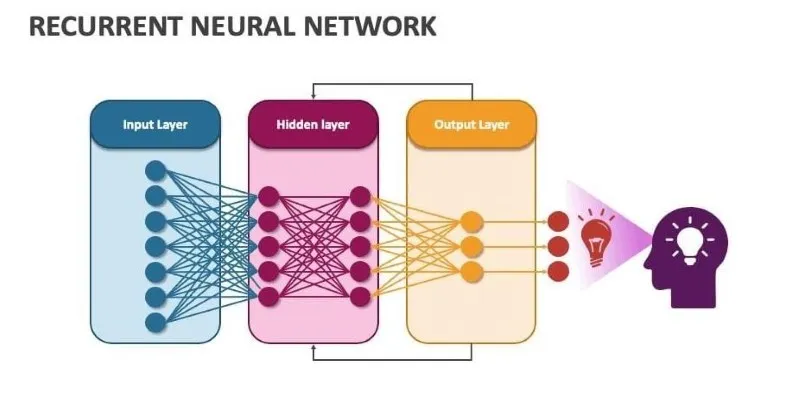

Deep Q-Learning combines the logic of Q-Learning with the pattern recognition strengths of deep neural networks. In basic Q-Learning, an agent learns the value of taking certain actions in given states, updating its decisions based on rewards. This method works well when the number of states is manageable. However, a game like Space Invaders presents thousands of possible screen states, rendering a simple table of values ineffective.

Instead, a deep neural network estimates the value of each possible action using game frames—typically reduced to grayscale and resized. These predicted values are known as Q-values. The agent selects actions based on these Q-values but occasionally makes random choices to explore new strategies. This balance between choosing the best-known action and trying something new is crucial for optimal learning.

Applying Deep Q-Learning to Space Invaders

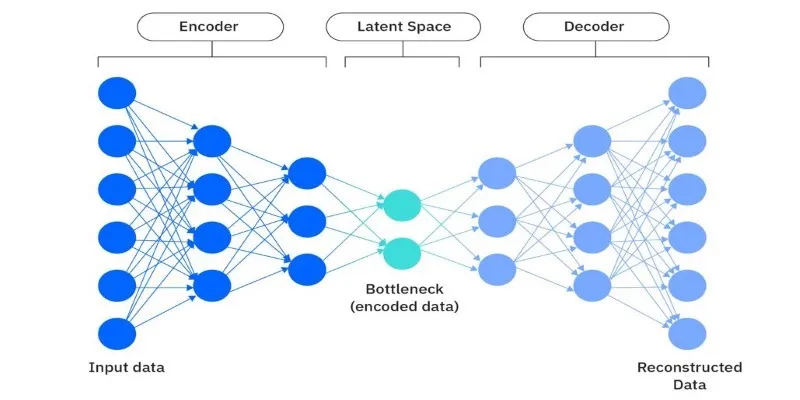

Space Invaders challenges players to shoot descending aliens while dodging their attacks, offering a fast-paced and unforgiving environment for training AI. Each frame is preprocessed into a simpler format—an 84x84 grayscale image, sometimes stacked with previous frames to infer motion.

The AI observes these images, chooses actions, and receives rewards based on outcomes. Shooting an alien earns points, while losing a life results in negative feedback. These signals help the AI update its understanding of effective moves.

To stabilize training, two networks are used: one for selecting actions and another as a slower-changing target for calculating value updates. This separation helps prevent unstable feedback loops. An experience replay buffer is also utilized, allowing the AI to learn from random past experiences rather than only recent ones, thereby improving generalization.

Challenges in Training and Performance

Training an AI with Deep Q-Learning isn’t straightforward. Space Invaders provides sparse rewards, meaning the AI might go several steps without feedback, complicating progress assessment. There’s also the issue of credit assignment—determining which past actions contributed to success.

Overfitting can occur if the AI becomes too reliant on a single strategy, making it vulnerable to slight game variations. To mitigate this, randomness is added during training, encouraging diverse strategies and adaptability.

Despite these challenges, a well-trained AI can outperform human players, learning to time shots, anticipate alien movements, and avoid danger. These skills are not preprogrammed but result from extensive gameplay and gradual improvement.

Relevance of Space Invaders Today

Though Space Invaders is decades old, it remains a valuable experiment in training AI. It’s simple enough to run with limited resources yet complex enough to demand learning. The game’s visible reward system, clear objectives, and increasing difficulty make it ideal for testing AI capabilities.

Applying Deep Q-Learning to Space Invaders demonstrates how machines can develop behavior from scratch. The agent starts with random actions and frequent failures but gradually acts with purpose through trial and reward feedback. These learned behaviors stem from experience rather than hardcoded instructions.

The significance extends beyond games. The same learning principles apply to fields like robotics, navigation, and process optimization. The AI’s ability to make decisions, adapt to new situations, and learn from results can be leveraged in real-world systems. Training an AI to succeed at Space Invaders highlights how machines can evolve from trial to skill.

Conclusion

Deep Q-Learning with Space Invaders is more than a technical exercise. It’s a hands-on demonstration of how machines learn by doing—without instructions, scripts, or shortcuts. Through countless games and steady feedback, the AI refines its timing, aim, reaction, and strategy. This method reflects the broader concept of reinforcement learning: learning through interaction, adaptation, and long-term reward-based behavior. Watching a machine improve in a classic game may seem simple, but it opens the door to applications where machines learn from environments much more complex than pixels and aliens. This gives Space Invaders new relevance and lasting value in modern AI research.

zfn9

zfn9