Python takes care of memory behind the scenes, making coding smoother. But have you ever wondered how it actually works? Every variable, function, and object you create has to live somewhere in memory, and Python has a smart way of handling this without making you think about it. Through a mix of dynamic memory allocation, reference counting, and garbage collection, Python keeps things efficient while preventing memory leaks.

However, understanding how memory management operates can make your code more efficient and help avoid performance bottlenecks. Let’s demystify it so it finally makes sense.

How Does Python Allocate and Manage Memory?

Python uses dynamic memory allocation, meaning memory is assigned to variables at runtime rather than at the beginning of program execution. When an object is created, Python assigns memory from an internal pool designed to manage small and large objects efficiently. This system reduces fragmentation and speeds up execution by avoiding frequent requests to the operating system for memory allocation.

Memory management in Python revolves around a private heap, where all data structures and objects are placed. This private heap is controlled by Python’s memory manager to allocate and free memory effectively. Unlike low-level programming languages that allow direct access to memory addresses, Python prohibits access to the heap, enhancing security and reducing the risk of memory corruption.

Python memory blocks are typed and sized, which ensures quicker access and eliminates memory fragmentation. Python also employs an internal technique called object pooling, where tiny objects (like integers and strings) are held in reusable memory blocks instead of creating new ones repeatedly. This provides a significant performance boost, especially in loops and repeated operations.

Understanding Reference Counting in Python

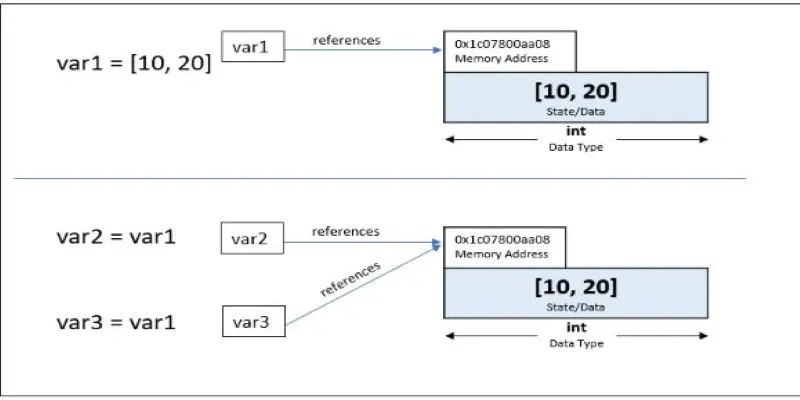

One of Python’s core memory management techniques is reference counting, which tracks the number of references to an object. Every object in Python has an associated reference count that increases when a new reference is assigned and decreases when a reference is removed. When an object’s reference count reaches zero, Python automatically removes it from memory.

For example:

a = [1, 2, 3]# A list is created and assigned to variable ‘a’b = a# ‘b’ now also references the same list, increasing the reference countdel a# ‘a’ is deleted, but the object still exists because ‘b’ holds a referencedel b# ‘b’ is deleted, reference count reaches zero, and the list is removed from memory

While reference counting works well for most scenarios, it has a limitation: circular references. A circular reference occurs when two objects refer to each other, preventing their reference counts from reaching zero. Python solves this issue using garbage collection, designed to identify and remove cyclic references.

How Does Garbage Collection Work in Python?

Python’s garbage collection mechanism is an extension of reference counting that helps detect and clean up circular references. The garbage collector groups objects into generations, categorizing them based on their lifespan. New objects start in the youngest generation, and if they survive multiple garbage collection cycles, they move to older generations.

The generational garbage collection process works as follows:

- Generation 0: New objects are created here. Since most objects in a program are temporary, this generation is frequently cleared.

- Generation 1: Objects that survive several collection cycles move here as they are likely to persist longer.

- Generation 2: The oldest objects, which remain in memory for an extended period, are stored in this generation and are rarely collected.

Python runs garbage collection automatically, but developers can manually

control it using the gc module. For example, to trigger garbage collection

manually, you can use:

import gc

gc.collect() # Forces garbage collection

While Python’s garbage collection system is generally efficient, excessive use of cyclic references can slow down performance. Developers should aim to write clean, well-structured code to minimize unnecessary memory consumption.

Best Practices for Optimizing Memory Usage in Python

Even though Python automates memory management, several techniques can optimize memory usage and improve program performance:

Use Generators Instead of Lists

Lists store all elements in memory, whereas generators produce values on demand. Using generators is more memory-efficient when working with large datasets.

def large_dataset():

for i in range(1000000):

yield i # Generates values without storing them in memory

Choose the Right Data Structures

Selecting efficient data types can reduce memory usage. For instance, tuples consume less memory than lists because they are immutable and require less overhead.

Avoid Unnecessary Object Creation

Creating multiple copies of the same object increases memory usage. To reduce memory allocation overhead, reuse existing objects where possible.

Profile and Monitor Memory Usage

Using tools like memory_profiler and objgraph, developers can analyze how

their programs consume memory and detect inefficiencies.

from memory_profiler import profile

@profile

def memory_intensive_function():

data = [x for x in range(1000000)] # Creates a large list in memory

memory_intensive_function()

Manually Manage Garbage Collection When Necessary

Controlling garbage collection can significantly improve efficiency in high- performance applications. Carefully adjusting the garbage collector’s behavior based on specific application needs can effectively prevent unnecessary performance slowdowns.

Conclusion

Python’s memory management automates resource handling through reference counting, garbage collection, and dynamic memory allocation, preventing memory leaks and fragmentation. While efficient, developers can further optimize memory usage by selecting appropriate data structures, utilizing generators, reducing object creation, and profiling memory consumption. These strategies help Python applications run efficiently, even with large datasets. Understanding memory mechanics allows developers to write high-performance code while minimizing overhead. Mastering Python’s memory management ensures better execution speed and resource utilization, making programs more scalable and responsive. Implementing best practices helps maintain optimal memory efficiency, improving overall application performance and stability.

zfn9

zfn9