AI is everywhere—from guiding your music app recommendations to supporting medical diagnoses. But what happens to the data that trains these models? More importantly, how can we use AI without compromising privacy? Enter Substra.

Substra allows AI systems to learn from sensitive data—like medical records or financial transactions—without that data ever leaving its original location. It flips the usual data flow upside down and brings the model to the data instead.

How Substra Protects Your Data

Typically, AI systems gather and centralize data for training, which can risk privacy. With Substra, instead of pooling data, it keeps data at its source and sends the code to it. This is called federated learning.

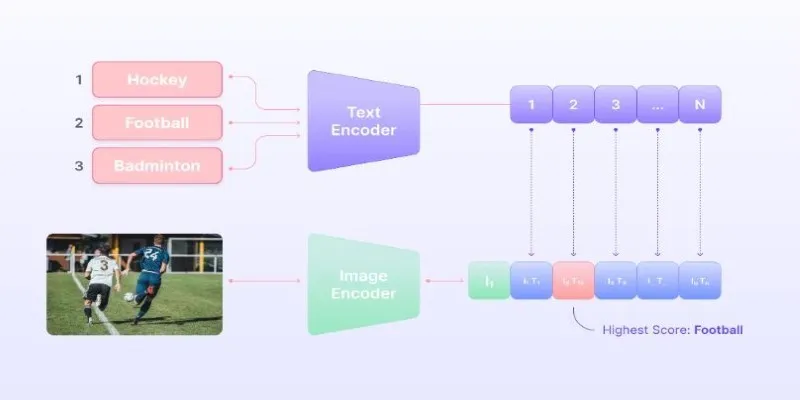

Understanding Federated Learning

In federated learning, the training algorithm travels to where the data lives—hospital systems, bank servers, etc. The model learns locally and only sends back updated weights, never personal records or raw files. Imagine having a private tutor visit your house; it’s personal and secure.

Substra in Action

In practice, Substra operates within controlled environments called nodes, representing different data owners. The AI model visits each node, trains on that data, and continues to the next.

Illustration of federated learning in action.

Illustration of federated learning in action.

Practical Example

- Hospital A has MRI scans and diagnoses.

- Hospital B holds CT scans and treatment responses.

Neither can share raw data, but both want a predictive model. Substra allows the model to train at each hospital without sharing data. Updates are aggregated securely, benefiting all without compromising privacy.

Building with Substra: Step-by-Step

Step 1: Define the Objective

Start with a clear objective compatible with distributed training, like predicting loan defaults or identifying image anomalies. Define metrics like accuracy or F1 score to evaluate progress.

Step 2: Package the Training Code

Prepare your training logic as Docker containers, ensuring code, dependencies, and environment are bundled. This includes an opener script to access data at each node without exposing it.

Step 3: Deploy Across Nodes

Each data-owning party runs a Substra node. Push your code to the network, and nodes execute training locally, sending only necessary updates back.

Step 4: Aggregate and Update

After rounds of training, Substra aggregates insights using an algorithm like FedAvg, enhancing the model without data exposure.

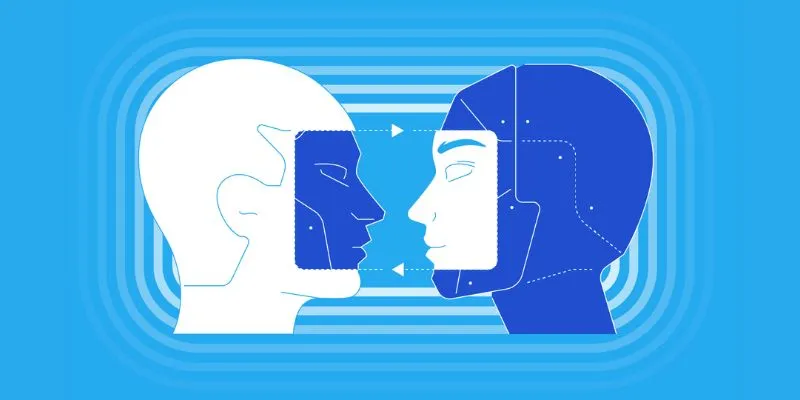

Example of an AI model training across different nodes.

Example of an AI model training across different nodes.

Tips for Smooth Integration

- Keep Code Lightweight: Ensure your training logic is clean and modular.

- Log Minimally: Track model metrics, not data details.

- Version Everything: Version control is essential with multiple collaborators.

- Test Locally First: Validate your setup before network-wide execution.

- Mind Compute Limits: Ensure designs accommodate varying hardware capacities.

Conclusion

Creating AI doesn’t have to sacrifice privacy. Substra enables smart, respectful systems by moving code—not data—while maintaining transparency. Whether with hospitals, banks, or other data-sensitive organizations, Substra facilitates collaborative, private AI development. That’s a win for everyone.

zfn9

zfn9