Artificial Intelligence is revolutionizing video creation. With NVIDIA COSMOS 1.0, generating videos that mimic real-life footage is now a reality. This advanced AI model utilizes diffusion technology to produce high-quality videos from simple inputs or text prompts. It’s fast, flexible, and surprisingly adept at understanding user intentions. This post will guide you through the workings of COSMOS 1.0, its significance, and how it stands out from other video generation tools.

What Is NVIDIA COSMOS 1.0?

NVIDIA COSMOS 1.0 is an AI-driven video generation model that converts textual descriptions or image inputs into high-quality, realistic video sequences. Developed by NVIDIA, this model uses a diffusion-based architecture, a technique gaining traction in AI for its ability to generate high-resolution content.

Instead of producing an entire video in one go, COSMOS 1.0 builds it incrementally through a series of steps that “denoise” random noise into coherent, photorealistic visuals. This process ensures the model maintains visual accuracy and continuity across frames.

Core Features of COSMOS 1.0

NVIDIA COSMOS 1.0 is packed with features designed to deliver professional- grade video content. From flexible input options to high-speed generation, the model caters to a wide range of use cases.

Some of its standout features include:

- Text-to-Video Generation: Users can provide natural language descriptions to generate matching video scenes.

- Multi-Modal Input Support: Accepts both text and images to guide the generation process.

- High Temporal Consistency: Maintains consistent colors, shapes, and lighting across video frames.

- Natural Motion Dynamics: Delivers smoother, more realistic movement than previous video generators.

- High Resolution: Outputs videos in HD quality, minimizing artifacts or blurring.

- GPU Optimization: Specifically designed to run efficiently on NVIDIA GPUs, ensuring faster processing times.

These features make COSMOS 1.0 a versatile solution for content creators, educators, game developers, and visual storytellers.

The Diffusion Model Behind COSMOS 1.0

At the heart of COSMOS 1.0 is a diffusion-based generation pipeline. This model adds noise to training data and learns to reverse the noise process to recover the original signal. During video generation, the model essentially does the reverse—it starts from pure noise and gradually constructs the video frame by frame.

Here’s how the process works in simple steps:

-

Step 1: Noise Initialization

The model begins with a noisy sequence representing random data. -

Step 2: Prompt Conditioning

Text or image input guides the model’s understanding of what the video should contain. -

Step 3: Denoising Iterations

Each step removes a bit of noise, revealing clearer content over time. -

Step 4: Frame Assembly

Once each frame is generated, they are assembled to form a complete, smooth video.

This approach allows COSMOS to generate visuals with strong structural integrity, reducing flickering and improving motion realism.

How COSMOS 1.0 Outperforms Other AI Video Tools

While there are several AI video generation tools available—such as Sora by OpenAI, Pika Labs, and Runway ML—COSMOS 1.0 sets itself apart in several important ways.

COSMOS offers a superior experience through:

- Better Scene Cohesion: Frames transition smoothly without visual jumps or inconsistencies.

- Faster Rendering: Optimized for NVIDIA hardware, COSMOS processes videos quicker than many cloud-based services.

- Flexible Input Handling: Combines different types of inputs to produce more nuanced results.

- More Accurate Prompt Interpretation: Understands and reflects complex prompts better than many competitors.

In side-by-side comparisons, COSMOS frequently delivers higher visual fidelity and more coherent motion, making it ideal for professional applications.

Real-World Applications of COSMOS 1.0

COSMOS 1.0 isn’t just a tech demo—it’s a highly functional model ready for use across multiple industries. Its ability to quickly transform ideas into visual content makes it a game-changer for professionals and creatives.

Common use cases include:

- Film and Animation: Generate concept videos, storyboards, or full scenes.

- Marketing and Advertising: Create eye-catching ads from product descriptions.

- Game Development: Design animated cutscenes or background environments.

- Education and E-Learning: Visualize complex topics or historical events.

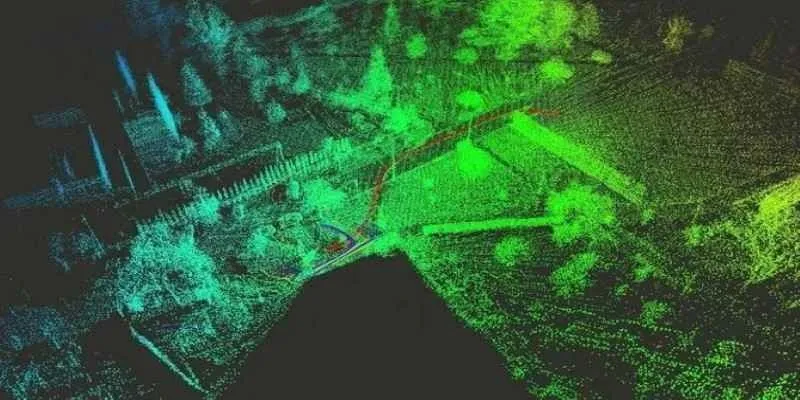

- Simulation and Training: Generate realistic environments for skill development.

Its user-friendly interface and fast generation time make it especially useful for teams that need to iterate quickly and stay visually consistent.

Challenges and Limitations

While COSMOS 1.0 is impressive, it is not without its challenges. Users must understand its limitations to make the most of its capabilities.

Some of the current limitations include:

- Hardware Dependency: The model requires a powerful NVIDIA GPU to function efficiently.

- Prompt Sensitivity: Vague or overly complex prompts may lead to undesired results.

- Motion Complexity: Extremely fast or unpredictable motion can still introduce artifacts.

- Limited Audio Support: COSMOS 1.0 currently focuses only on visuals; audio must be added separately.

As NVIDIA continues to refine the model, many of these limitations are expected to improve in future versions.

How to Start Using COSMOS 1.0

Access to COSMOS 1.0 is generally offered through NVIDIA’s research platforms or partnerships. Users interested in trying it out need to prepare their environment accordingly.

Basic requirements to get started:

- A compatible NVIDIA GPU (RTX 30-series or higher recommended)

- Installed drivers and CUDA toolkit

- Python environment and basic ML framework knowledge

- Access to COSMOS model weights (via GitHub or NVIDIA NGC)

- Sample prompts or test inputs for experimentation

Once the setup is complete, users can generate videos by inputting descriptive

text like:

“A city skyline at sunset with cars driving on the highway” or

“A child blowing bubbles in a sunny park.”

The system then generates a video matching the input description with realistic animation and lighting.

Conclusion

NVIDIA COSMOS 1.0 is a significant advancement in AI-driven video generation. With its diffusion-based approach, it delivers realistic visuals, smooth motion, and versatile input handling. For anyone looking to explore the world of AI-generated content, COSMOS offers a practical and powerful entry point. By combining technical sophistication with creative flexibility, COSMOS 1.0 is set to transform how videos are imagined, designed, and produced. Whether it’s for education, marketing, gaming, or entertainment, COSMOS 1.0 is shaping the future of video—one realistic frame at a time.

zfn9

zfn9